ICME Workshop on Hyper-Realistic Multimedia for Enhanced Quality of Experience (ICMEW)

July 18-22, 2022 | Taipei, Taiwan

Conference Website

Ekrem Çetinkaya (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), and Christian Timmerer (Christian Doppler LaboratoryATHENA, Alpen-Adria-Universität Klagenfurt)

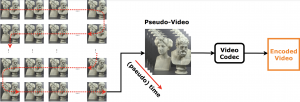

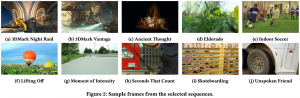

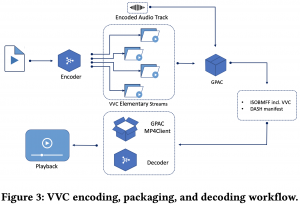

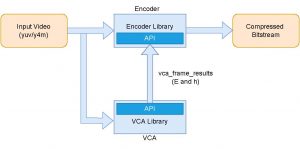

Abstract: Light field imaging enables post-capture actions such as refocusing and changing view perspective by capturing both spatial and angular information. However, capturing richer information about the 3D scene results in a huge amount of data. To improve the compression efficiency of the existing light field compression methods, we investigate the impact of light field super-resolution approaches (both spatial and angular super-resolution) on the compression efficiency. To this end, firstly, we downscale light field images over (i) spatial resolution, (ii) angular resolution, and (iii) spatial-angular resolution and encode them using Versatile Video Coding (VVC). We then apply a set of light field super-resolution deep neural networks to reconstruct light field images in their full spatial-angular resolution and compare their compression efficiency. Experimental results show that encoding the low angular resolution light field image and applying angular super-resolution yield bitrate savings of 51.16 % and 53.41 % to maintain the same PSNR and SSIM, respectively, compared to encoding the light field image in high-resolution.

Keywords: Light field, Compression, Super-resolution, VVC.

On April 6th, 2022, Natalia Mathá (former Sokolova) successfully defended her thesis on “Relevance Detection and Relevance-Based Video Compression in Cataract Surgery Videos” under the supervision of Assoc.-Prof. Klaus Schöffmann and Assoc.-Prof. Christian Timmerer. The defense was chaired by Univ.-Prof. Hermann Hellwagner and the examiners were Assoc.-Prof. Konstantin Schekotihin and Assoc.-Prof. Mathias Lux. Congratulations to Dr. Mathá for this great achievement!

On April 6th, 2022, Natalia Mathá (former Sokolova) successfully defended her thesis on “Relevance Detection and Relevance-Based Video Compression in Cataract Surgery Videos” under the supervision of Assoc.-Prof. Klaus Schöffmann and Assoc.-Prof. Christian Timmerer. The defense was chaired by Univ.-Prof. Hermann Hellwagner and the examiners were Assoc.-Prof. Konstantin Schekotihin and Assoc.-Prof. Mathias Lux. Congratulations to Dr. Mathá for this great achievement!