The following papers have been accepted at the Intel4EC Workshop 2025 which will be held on June 4, 2025 in Milan, Italy in conjunction with 39th IEEE International Parallel and Distributed Processing Symposium (IPDPS 2025)

Title: 6G Infrastructures for Edge AI: An Analytical Perspective

Authors: Kurt Horvath, Shpresa Tuda*, Blerta Idrizi*, Stojan Kitanov*, Fisnik Doko*, Dragi Kimovski (*Mother Teresa University Skopje, North Macedonia)

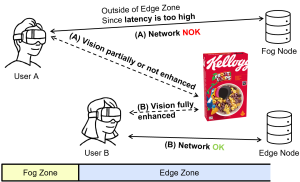

Abstract: The convergence of Artificial Intelligence (AI) and the Internet of Things has accelerated the development of distributed, network-sensitive applications, necessitating ultra-low latency, high throughput, and real-time processing capabilities. While 5G networks represent a significant technological milestone, their ability to support AI-driven edge applications remains constrained by performance gaps observed in real-world deployments. This paper addresses these limitations and highlights critical advancements needed to realize a robust and scalable 6G ecosystem optimized for AI applications. Furthermore, we conduct an empirical evaluation of 5G network infrastructure in central Europe, with latency measurements ranging from 61 ms to 110 ms across different close geographical areas. These values exceed the requirements of latency-critical AI applications by approximately 270%, revealing significant shortcomings in current deployments. Building on these findings, we propose a set of recommendations to bridge the gap between existing 5G performance and the requirements of next-generation AI applications.

Title: Blockchain consensus mechanisms for democratic voting environments

Authors: Thomas Auer, Kurt Horvath, Dragi Kimovski

Abstract: Democracy relies on robust voting systems to ensure transparency, fairness, and trust in electoral processes. Despite its foundational role, voting mechanisms – both manual and electronic – remain vulnerable to threats such as vote manipulation, data loss, and administrative interference. These vulnerabilities highlight the need for secure, scalable, and cost-efficient alternatives to safeguard electoral integrity. The fully decentralized voting system leverages blockchain technology to overcome critical challenges in modern voting systems, including scalability, cost-efficiency, and transaction throughput. By eliminating the need for a centralized authority, the system ensures transparency, security, and real-time monitoring by integrating Distributed Ledger Technologies. This novel architecture reduces operational costs, enhances voter anonymity, and improves scalability, achieving significantly lower costs for 1,000 votes than traditional voting methods.

The system introduces a formalized decentralized voting model that adheres to constitutional requirements and practical standards, making it suitable for implementation in direct and representative democracies. Additionally, the design accommodates high transaction volumes without compromising performance, ensuring reliable operation even in large-scale elections. The results demonstrate that this system outperforms classical approaches regarding efficiency, security, and affordability, paving the way for broader adoption of blockchain-based voting solutions.

![]()