Pattern Recognition Special Issue on

Advances in Multimodal-Driven Video Understanding and Assessment

The rapid growth of video content across various domains has led to an increasing demand for more intelligent and efficient video understanding and assessment techniques. This Special Issue focuses on the integration of multimodal information, such as audio, text, and sensor data, with video to enhance processing, analysis, and interpretation. Multimodal-driven approaches are crucial for numerous real-world applications, including automated surveillance, content recommendation, and healthcare diagnostics.

This Special Issue invites cutting-edge research on topics such as video capture, compression, transmission, enhancement, and quality assessment, alongside advancements in deep learning, multimodal fusion, and real-time processing frameworks. By exploring innovative methodologies and emerging applications, we aim to provide a comprehensive perspective on the latest developments in this dynamic and evolving field.

Topics of interest include but are not limited to:

- Multimodal-driven video capture techniques

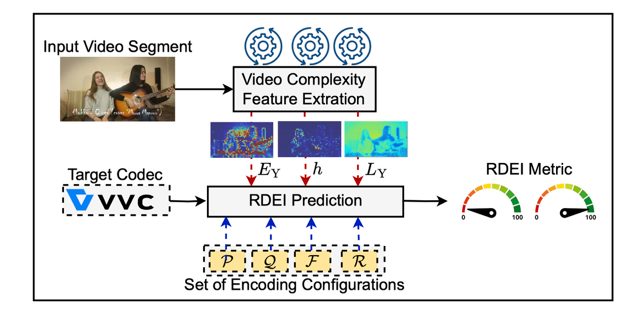

- Video compression and efficient transmission for/using multimodal data

- Deep learning-based video enhancement and super-resolution

- Multimodal action and activity recognition

- Audio-visual and text-video fusion methods

- Video quality assessment with multimodal cues

- Video captioning and summarization using multimodal data

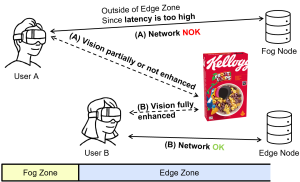

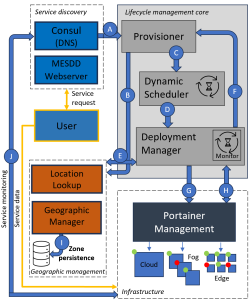

- Real-time multimodal video processing frameworks

- Explainability and interpretability in multimodal video models

- Applications in surveillance, healthcare, and autonomous systems

Guest editors:

Wei Zhou, PhD

Cardiff University, Cardiff, United Kingdom

Email: zhouw26@cardiff.ac.uk

Yakun Ju, PhD

University of Leicester, Leicester, United Kingdom

Email: yj174@leicester.ac.uk

Hadi Amirpour, PhD

University of Klagenfurt, Klagenfurt, Austria

Email: hadi.amirpour@aau.at

Bruce Lu, PhD

University of Western Australia, Perth, Australia

Email: bruce.lu@uwa.edu.au

Jun Liu, PhD

Lancaster University, Lancaster, United Kingdom

Email: j.liu81@lancaster.ac.uk

Important dates

Submission Portal Open: April 04, 2025

Submission Deadline: October 30, 2025

Acceptance Deadline: May 30, 2026

Keywords:

Multimodal video analysis, video understanding, deep learning, video quality assessment, action recognition, real-time video processing, audio-visual learning, text-video processing