We are happy to announce and our congratulations to Dr. Hermann Hellwagner for receiving the appreciation award of Carinthia in the area of natural/technical sciences.

We are happy to announce and our congratulations to Dr. Hermann Hellwagner for receiving the appreciation award of Carinthia in the area of natural/technical sciences.

16th International Conference on Signal Image Technology & Internet based Systems – Dijon, France – October 19-21, 2022

Babak Taraghi (Alpen-Adria-Universität Klagenfurt, Austria), Selina Zoë Haack (Alpen-Adria-Universität Klagenfurt, Austria), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: HTTP Adaptive Streaming (HAS) is nowadays a popular solution for multimedia delivery. The novelty of HAS lies in the possibility of continuously adapting the streaming session to current network conditions, facilitated by Adaptive Bitrate (ABR) algorithms. Various popular streaming and Video on Demand services such as Netflix, Amazon Prime Video, and Twitch use this method. Given this broad consumer base, ABR algorithms continuously improve to increase user satisfaction. The insights for these improvements are, among others, gathered within the research area of Quality of Experience (QoE). Within this field, various researchers have dedicated their works to identifying potential impairments and testing their impact on viewers’ QoE. Two frequently discussed visual impairments influencing QoE are stalling events and quality switches. So far, it is commonly assumed that those stalling events have the worst impact on QoE. This paper challenged this belief and reviewed this assumption by comparing stalling events with multiple quality and high amplitude quality switches. Two subjective studies were conducted. During the first subjective study, participants received a monetary incentive, while the second subjective study was carried out with volunteers. The statistical analysis demonstrated that stalling events do not result in the worst degradation of QoE. These findings suggest that a reevaluation of the effect of stalling events in QoE research is needed. Therefore, these findings may be used for further research and to improve current adaptation strategies in ABR algorithms.

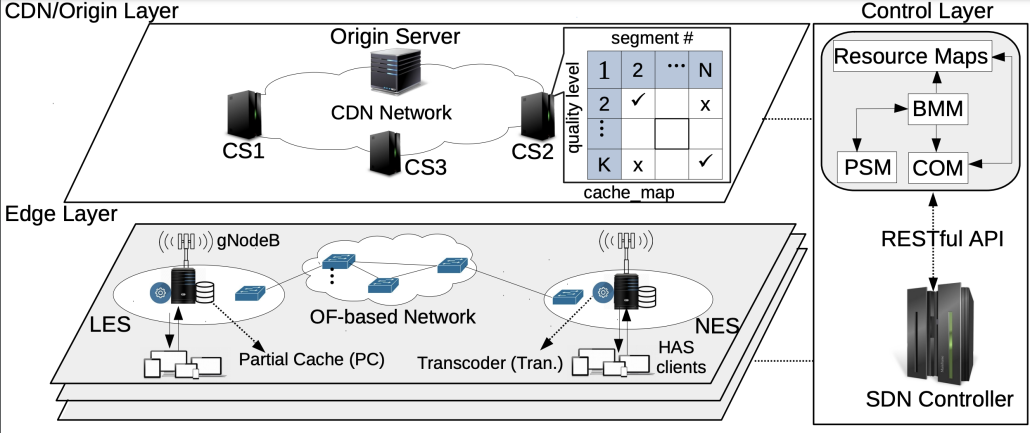

Authors: Reza Farahani (Alpen-Adria-Universität Klagenfurt, Austria), Mohammad Shojafar (University of Surry, UK), Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria), Farzad Tashtarian (Alpen-Adria-Universität Klagenfurt, Austria), Mohammad Ghanbari (University of Essex, UK), and Hermann Hellwagner (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: With the ever-increasing demands for high-definition and low-latency video streaming applications, network-assisted video streaming schemes have become a promising complementary solution in the HTTP Adaptive Streaming (HAS) context to improve users’ Quality of Experience (QoE) as well as network utilization. Edge computing is considered one of the leading networking paradigms for designing such systems by providing video processing and caching close to the end-users. Despite the wide usage of this technology, designing network-assisted HAS architectures that support low-latency and high-quality video streaming, including edge collaboration is still a challenge. To address these issues, this article leverages the Software-Defined Networking (SDN), Network Function Virtualization (NFV), and edge computing paradigms to propose A collaboRative edge-Assisted framewoRk for HTTP Adaptive video sTreaming (ARARAT). Aiming at minimizing HAS clients’ serving time and network cost, besides considering available resources and all possible serving actions, we design a multi-layer architecture and formulate the problem as a centralized optimization model executed by the SDN controller. However, to cope with the high time complexity of the centralized model, we introduce three heuristic approaches that produce near-optimal solutions through efficient collaboration between the SDN controller and edge servers. Finally, we implement the ARARAT framework, conduct our experiments on a large-scale cloud-based testbed including 250 HAS players, and compare its effectiveness with state-of-the-art systems within comprehensive scenarios. The experimental results illustrate that the proposed ARARAT methods (i) improve users’ QoE by at least 47%, (ii) decrease the streaming cost, including bandwidth and computational costs, by at least 47%, and (iii) enhance network utilization, by at least 48% compared to state-of-the-art approaches.

27th September 2022 | Rennes, France

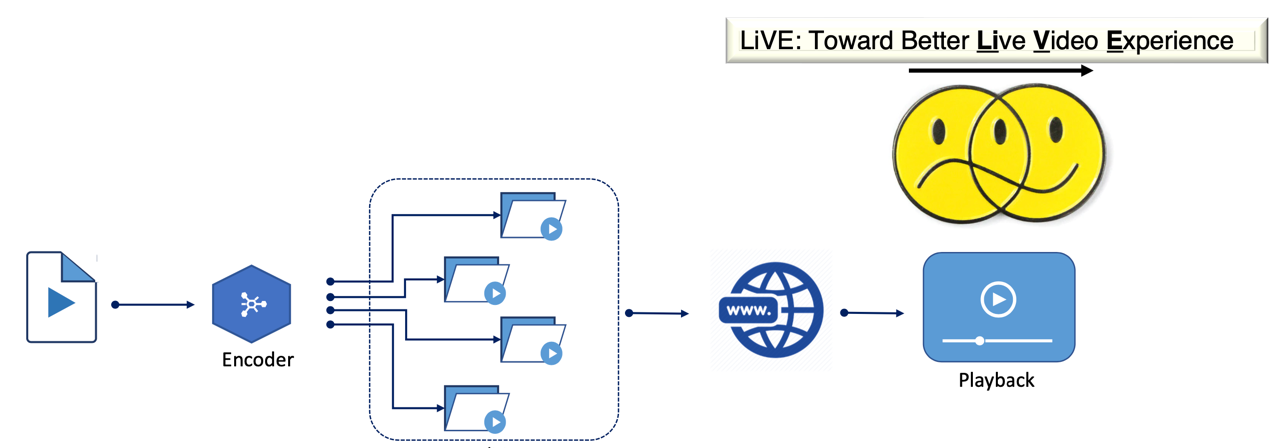

Abstract: In this presentation, we first introduce the principles of video streaming and the existing challenges. While live video streaming is expected to continue growing at an accelerated pace, one potential area for optimization that has remained relatively untapped is the use of content-aware encoding to improve the quality of live contribution streams due to avoid of latency. In this talk, we introduce revolutionary real-time content-aware video quality improvement methods for live applications that keep the added latency very low.

Hadi Amirpour is a postdoctoral researcher at the University of Klagenfurt. He received his B.Sc. degrees in Electrical and Biomedical Engineering, and he pursued his M.Sc. in Electrical Engineering. He got his Ph.D. in computer science from the University of Klagenfurt in 2022. He was involved in the project EmergIMG, a Portuguese consortium on emerging imaging technologies, funded by the Portuguese funding agency and H2020. Currently, he is working on the ATHENA project in cooperation with its industry partner Bitmovin. His research interests are image processing and compression, video processing and compression, quality of assessment, emerging 3D imaging technology, and medical image analysis.

The “Game Studies and Engineering” master’s program can be studied at the University of Klagenfurt for five years. It’s much more about technical skills, a critical understanding of the influence of games on society “as well as the courage and creativity to use this knowledge and experience in your own innovative ways for yourself and for society,” says program director Felix Schniz.

Carinthian newspaper “Kleine Zeitung” interviewed current and former ITEC team members about how games will change in the future. Read the whole article here about former “Octopus project” colleagues Fabian and Daniela from “Dirty Paws Studio” and Sebastian’s “A Webbing Journey” (German only).

Titel: CardioHPC: Serverless Approaches for Real-Time Heart Monitoring of Thousands of Patients

Authors: Marjan Gusev, Sashko Ristov, Andrei Amza, Armin Hohenegger, Radu Prodan, Dimitar Mileski, Pano Gushev, Goran Temelkov

17th Workshop on Workflows in Support of Large-Scale Science

Abstract: We analyze a heart monitoring center for patients wearing electrocardiogram sensors outside hospitals. This prevents serious heart damages and increases life expectancy and health-care efficiency. In this paper, we address a problem to provide a scalable infrastructure for the real-time processing scenario for at least 10000 patients simultaneously, and efficient fast processing architecture for the postponed scenario when patients upload data after realized measurements. CardioHPC is a project to realize a simulation of these two scenarios using digital signal processing algorithms and artificial intelligence-based detection and classification software for automated reporting and alerting. We elaborate the challenges we met in experimenting with different serverless implementations: 1) container-based on Google Cloud Run, and 2) Function-as-a-Service (FaaS) on AWS Lambda. Experimental results present the effect of overhead in the request and transfer time, and speedup achieved by analyzing the response time and throughput on both container-based and FaaS implementations as serverless workflows.

Titel: SimLess: Simulate Serverless Workflows and Their Twins and Siblings in Federated FaaS

Authors: Sashko Ristov, Mika Hautz, Christian Hollaus, Radu Prodan

2022 ACM Symposium on Cloud Computing

Abstract: Many researchers migrate scientific serverless workflows or function choreographies (FC) on Function-as-a-Service (FaaS) to benefit from its high scalability and elasticity. Unfortunately, the heterogeneous nature of federated FaaS hampers decisions on the most appropriate configuration setup to run FCs. Consequently, scientists must choose between accurate but tedious and expensive experiments or simple but cheap but less accurate simulations. Unfortunately, related work mainly supports either simulation models for serverfull workflow applications that run on virtual machines and containers or partial FaaS models for individual serverless functions that focus on execution time and neglect various kinds of federated FaaS overheads. Therefore, this paper introduces SimLess, an FC simulation framework across multiple FaaS providers to achieve accurate FC simulations with a simple and cheap parameter setup. Unlike the costly approaches that use machine learning over time series to predict the behavior of FCs, SimLess introduces two light concepts: (1) twins, representing the same code deployed with the same computing, communication, and storage resources, but in other cloud regions of the same FaaS provider, and (2) siblings, representing the same code deployed in the same region with different computing resources. The novel SimLess simulation model splits the round trip time of a function into several parameters reused among twins and siblings without running them. We evaluated SimLess with two scientific FCs deployed across 18 AWS, Google, and IBM regions. SimLess simulates the cumulative overhead with an average inaccuracy of 8.9% without significant differences between regions for learning and validation. Moreover, SimLess generates an inaccuracy of up to 9.75% for a low concurrency FC executed on a single region, with high concurrency of 2500 functions executed in other regions. Finally, SimLess reduces the parameter setup cost by 77.23% compared to the existing simulation approaches.

The project partners reunited at @itecmmc for a final project review. Thank you Horizon2020 @EU_Commission it has been an honour to collaborate for the Future Hyper-connected Sociality.

ARTICONF project review

@UvA_Amsterdam

@mscdigsoc

@UOhrid

@MOGTechnologies

@AgiliaCenter

@vialog_io

@bitYogaAS

@itec

Student travel award at IEEE Cluster 2022

Narges Mehran got the student award for presenting the paper titled “Matching-based Scheduling of Asynchronous Data Processing Workflows on the Computing Continuum” at IEEE Cluster 2022.

The presentation was on the 7th of September: https://clustercomp.org/2022/program/

IEEE Cloud Summit 2022, https://www.ieeecloudsummit.org/

Authors: Radu Prodan, Dragi Kimovski, Andrea Bartolini, Michael Cochez,

Alexandru Iosup, Evgeny Kharlamov, Joze Rozanec, Laurentiu Vasiliu, Ana

Lucia Varbanescu

Abstract: The Graph-Massivizer project, funded by the Horizon Europe research and innovation program, researches and develops a high-performance, scalable, and sustainable platform for information processing and reasoning based on the massive graph (MG) representation of extreme data. It delivers a toolkit of five open-source software tools and FAIR graph datasets covering the sustainable lifecycle of processing extreme data as MGs. The tools focus on holistic usability (from extreme data ingestion and MG creation), automated intelligence (through analytics and reasoning), performance modelling, and environmental sustainability tradeoffs, supported by credible data-driven evidence across the computing continuum. The automated operation based on the emerging serverless computing paradigm supports experienced and novice stakeholders from a broad group of large and small organisations to capitalise on extreme data through MG programming and processing.

Graph-Massivizer validates its innovation on four complementary use cases considering their extreme data properties and coverage of the three sustainability pillars (economy, society, and environment): sustainable green finance, global environment protection foresight, green AI for the sustainable automotive industry, and data centre digital twin for exascale computing. Graph-Massivizer promises 70% more efficient analytics than AliGraph, and 30% improved energy awareness for ETL storage operations than Amazon Redshift. Furthermore, it aims to demonstrate a possible two-fold improvement in data centre energy efficiency and over 25% lower greenhouse gas emissions for basic graph operations.