ACM MMSys 2024, Bari, Italy, Apr. 15-18, 2024

Authors: Emanuele Artioli (Alpen-Adria-Universität Klagenfurt, Austria), Farzad Tashtarian (Alpen-Adria-Universität Klagenfurt, Austria), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

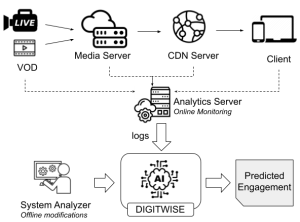

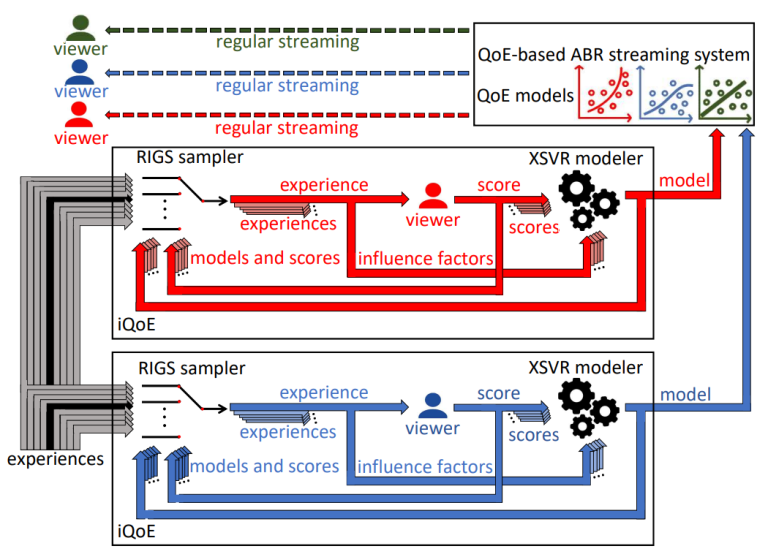

Abstract: As the popularity of video streaming entertainment continues to grow, understanding how users engage with the content and react to its changes becomes a critical success factor for every stakeholder. User engagement, i.e., the percentage of video the user watches before quitting, is central to customer loyalty, content personalization, ad relevance, and A/B testing. This paper presents DIGITWISE, a digital twin-based approach for modeling adaptive video streaming engagement. Traditional adaptive bitrate (ABR) algorithms assume that all users react similarly to video streaming artifacts and network issues, neglecting individual user sensitivities. DIGITWISE leverages the concept of a digital twin, a digital replica of a physical entity, to model user engagement based on past viewing sessions. The digital twin receives input about streaming events and utilizes supervised machine learning to predict user engagement for a given session. The system model consists of a data processing pipeline, machine learning models acting as digital twins, and a unified model to predict engagement. DIGITWISE employs the XGBoost model in both digital twins and unified models. The proposed architecture demonstrates the importance of personal user sensitivities, reducing user engagement prediction error by up to 5.8% compared to non-user-aware models. Furthermore, DIGITWISE can optimize content provisioning and delivery by identifying the features that maximize engagement, providing an average engagement increase of up to 8.6 %.

Keywords: digital twin, user engagement, xgboost