Magdalena participated in the “Game Over?”- Conference from November 14th to 16th with its topic “Dystopia x Utopia x Video Games.” Together with Iris van der Horst (MEd), she presented the talk titled “From Oppression to Liberation: Postcolonial Perspectives on the Dystopian World of Xenoblade Chronicles 3” focused on how the game displays a critical dystopia variant 1 and how it criticizes colonial structures by magnifying the abstract concepts of third space and contact zone and depicting them in a very concrete and creative way. Therefore, this presentation combined theories on utopianism and postcolonialism.

Authors: Narges Mehran, Zahra Najafabadi Samani, Samira Afzal, Radu Prodan, Frank Pallas and Peter Dorfinger

Event: The 40th ACM/SIGAPP Symposium On Applied Computing https://www.sigapp.org/sac/sac2025/

Abstract:

The popularity of asynchronous data exchange patterns has recently increased, as evidenced by an Alibaba trace analysis showing that 23% of the communication between microservices uses this method. Such workloads necessitate exploring a method for reducing their dataflow processing and completion time. Moreover, there is a need to exploit a prediction method to forecast the future requirements of such microservices and (re-)schedule them. Therefore, we investigate the prediction-based scheduling of asynchronous dataflow processing applications by considering the stochastic changes due to dynamic user requirements.

Moreover, we present a microservice scaling and scheduling method named PreMatch combining a machine learning prediction strategy based on gradient boosting with ranking and game theory matching scheduling principles. Firstly, PreMatch predicts the number of microservice replicas, and then, the ranking method orders the microservice replica and devices based on microservice and transmission times. Thereafter, the PreMatch schedules microservice replicas requiring dataflow processing on computing devices. Experimental analysis of the PreMatch method shows lower completion times on average 13% compared to a related prediction-based scheduling method.

Scalable Per-Title Encoding – US Patent

[PDF]

Hadi Amirpour (Alpen-Adria-Universität Klagenfurt, Austria) and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: A scalable per-title encoding technique may include detecting scene cuts in an input video received by an encoding network or system, generating segments of the input video, performing per-title encoding of a segment of the input video, training a deep neural network (DNN) for each representation of the segment, thereby generating a trained DNN, compressing the trained DNN, thereby generating a compressed trained DNN, and generating an enhanced bitrate ladder including metadata comprising the compressed trained DNN. In some embodiments, the method may also include generating a base layer bitrate ladder for CPU devices and providing the enhanced bitrate ladder for GPU-available devices.

Authors: Tom Tucek, Kseniia Harshina, Georgia Samaritaki (University of Amsterdam), and Dipika Rajesh (University of California, Santa Cruz)

Abstract:

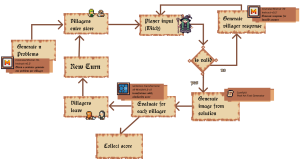

This paper presents “One Spell Fits All”, an AI-native game prototype where the player, playing as a witch, solves villagers’ problems using magical conjurations. We show how, beyond being a standalone game, “One Spell Fits All” could serve as a research platform to explore several key areas in AI-driven and AI-native game design. These areas include AI creativity, user experience in predominantly AI-generated content, and the energy efficiency of locally running versus cloud-based AI models. By leveraging smaller, locally running generative AI models, including LLMs and diffusion models for image generation, the game dynamically generates and evaluates content without the need for external APIs or internet access, offering a sustainable and responsive gameplay experience. This paper explores the application of LLMs in narrative video games, outlines a game prototype’s design and mechanics, and proposes future research opportunities that can be explored using the game as a platform.

EXAG ’24: Experimental AI in Games Workshop at the AIIDE Conference, November 18, 2024, Lexington, USA

Authors: Kseniia Harshina, Imke Alenka Harbig, Mathias Lux, and Tom Tucek

Abstract: Forced migration affects millions worldwide due to conflict, disasters, and persecution. This paper presents a participatory approach to serious game development, engaging individuals with lived migration experiences to create authentic and impactful narratives. Based on qualitative research, we propose a game design methodology to enhance empathy and raise awareness of forced migration. Our study demonstrates the potential of participatory games to bridge the gap between forced migrants and the broader public, fostering empathy, social change, and empowerment for marginalized communities.

GALA Conference 2024 Proceedings will be published on Springer Lecture Notes in Computer Science. Kseniia and Imke Harbig from the psychology department will present their research at the GALA conference in Berlin from November 20 to 22.

Kseniia and Tom participated in the annual symposium CHI PLAY (Computer-Human Interaction in Play) from October 14–17/online.

They both took part in the doctoral consortium, where they presented their works and built connections with fellow doctoral students and experienced researchers in the field.

Kseniia presented her topic, “Developing a Virtual-Reality Game for Empathy Enhancement and Perspective-Taking in the Context of Forced Migration Experiences”, and Tom presented his topic, “Enhancing Empathy Through Personalized AI-Driven Experiences and Conversations with Digital Humans in Video Games”.

Proceedings are published under Companion Proceedings of the Annual Symposium on Computer-Human Interaction in Play (CHI PLAY Companion ’24), October 14–17, 2024, Tampere, Finland.

Kseniia participated in the annual FROG conference from 11 to 13 October, which had the topic of “Gaming the Apocalypse”. Her talk, “Unraveling the Romanticization of Colonial, Imperial and Authoritarian Narratives in Modern Video Games”, showcased a trend in video games to cutefy serious topics like historical power dynamics through aesthetics, and explained the potential issues caused by this phenomenon.

Her talk can be seen on YouTube (https://www.youtube.com/watch?v=37-sqVJrMoY), and proceedings will follow in the summer of 2025.

Radu Prodan is invited to give a keynote talk at the 21st EAI International Conference on Mobile and Ubiquitous Systems (MobiQuitous 2024), which will be held from November 12-14,2024, in Oslo, Norway.

Abstract:

The presentation starts by reviewing the convergence of two historically disconnected AI branches: symbolic AI of explicit deductive reasoning owning mathematical rigor and interpretability, and connectionist AI of implicit inductive reasoning lacking readability and explainability.

Afterward, it makes a case of neuro-symbolic data processing in knowledge graph representation approached by the Graph-Massivizer Horizon Europe project and applied for anomaly prediction in data centers using graph neural networks. Machine learning-driven graph sampling algorithms support its training and inference on resource-constrained Edge devices. The presentation concludes with an outlook into future projects targeting Edge large language models fine-tuned and contextualized using symbolic knowledge representation for regulatory AI compliance, job market, and medicine.

Authors: Jing Yang, Qinghua Ni, Song Zhang, Nan Zheng, Juanjuan Li, Lili Fan, Lili Fan, Radu Prodan, Levente Kovacs, Fei-Yue Wang

IEEE Transactions on Intelligent Vehicles: https://ieeexplore.ieee.org/document/10734076/

Abstract: The Lebanese wireless device explosion incident has drawn widespread attention, involving devices such as pagers, walkie-talkies, and other common devices. This event has revealed and highlighted the security vulnerabilities in global supply chains from raw material manufacturing and distribution to the usage of devices and equipment, signaling the onset of a new wave of “supply chain warfare”. Even worse, with the rapid proliferation of Internet of Things (IoT) devices and smart hardware, the fragility of global supply chains would become increasingly fatal and significant, since almost all devices of daily usage could be maliciously programmed and triggered as weapons of massive destruction. Given this, we need new thinking and new approaches to improve supply chain security [3]. With its decentralized, tamper-proof, and highly traceable characteristics, blockchain technology is considered an effective solution to address these security threats [4], [5]. How to secure the entire lifecycle of smart devices, from production and transportation to usage, through blockchain-enabled safety management and protection, has become a pressing issue that requires immediate attention.

Authors: Leonardo Peroni (UC3M, Spain); Sergey Gorinsky (IMDEA Networks Institute, Spain); Farzad Tashtarian (Alpen-Adria Universität Klagenfurt, Austria)

Conference: IEEE 13th International Conference on Cloud Networking (CloudNet)

27–29 November 2024 // Rio de Janeiro, Brazil

Abstract: While ISPs (Internet service providers) strive to improve QoE (quality of experience) for end users, end-to-end traffic encryption by OTT (over-the-top) providers undermines independent inference of QoE by an ISP. Due to the economic and technological complexity of the modern Internet, ISP-side QoE inference based on OTT assistance or out-of-band signaling sees low adoption. This paper presents IQN (in-band quality notification), a novel mechanism for signaling QoE impairments from an automated agent on the end-user device to the server-to-client ISP responsible for QoE-impairing congestion. Compatible with multi-ISP paths, asymmetric routing, and other Internet realities, IQN does not require OTT support and induces the OTT server to emit distinctive packet patterns that encode QoE information, enabling ISPs to infer QoE by monitoring these patterns in network traffic. We develop a prototype system, YouStall, which applies IQN signaling to ISP-side inference of YouTube stalls.

Cloud-based experiments with YouStall on YouTube Live streams validate IQN’s feasibility and effectiveness, demonstrating its potential for accurate user-assisted ISP-side QoE inference from encrypted traffic in real Internet environments.