Authors: Haichao Yao (Beijing Jiaotong University), Rongrong Ni (Beijing Jiaotong University), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Alpen-Adria-Universität Klagenfurt), Yao Zhao (Beijing Jiaotong University).

Multimedia Communication

Authors: Haichao Yao (Beijing Jiaotong University), Rongrong Ni (Beijing Jiaotong University), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Alpen-Adria-Universität Klagenfurt), Yao Zhao (Beijing Jiaotong University).

14th – 17th June 2022 | Athlone, Ireland.

The ACM Multimedia Systems Conference (MMSys) provides a forum for researchers to present and share their latest research findings in multimedia systems. While research about specific aspects of multimedia systems is regularly published in the various proceedings and transactions of the networking, operating systems, real-time systems, databases, mobile computing, distributed systems, computer vision, and middleware communities, MMSys aims to cut across these domains in the context of multimedia data types.

This year, MMSys hosted around 150 on-site participants from academia and industry. Five ATHENA members travelled to Athlone, Ireland, to present four papers by Reza Farahani, Babak Taraghi, and Vignesh V Menon in two tracks, i.e., Open Dataset & Software Track and Demo & Industry Track.

Moreover, two presentations in Mentoring & Postdoc Networking event by ATHENA postdocs:

We presented the poster “The Power in Your Pocket: Boosting Video Quality with Super-Resolution on Mobile Devices” at the Austrian Computer Science Day 2022 conference. The poster summarizes research about improving the visual quality of video streaming on mobile devices by utilizing deep neural network-based enhancement techniques.

Here is the list of papers that we cover in the poster:

This years´s “Lange Nacht der Forschung” took place on May 20, 2022. The LNDF is Austria´s most significant national research event to present the accomplishments to the broad public. ITEC was represented by three stations and involved in the station of Computer Games and Engineering, and it was a fantastic experience for everyone! We tried to make our research easily understandable for everyone.

Authors: Josef Hammer and Hermann Hellwagner, Alpen-Adria-Universität Klagenfurt

Abstract: Multi-access Edge Computing (MEC) is a central piece of 5G telecommunication systems and is essential to satisfy the challenging low-latency demands of future applications. MEC provides a cloud computing platform at the edge of the radio access network that developers can utilize for their applications. In [1] we argued that edge computing should be transparent to clients and introduced a solution to that end. This paper presents how to efficiently implement such a transparent approach, leveraging Software-Defined Networking. For high performance and scalability, our architecture focuses on three aspects: (i) a modular architecture that can easily be distributed onto multiple switches/controllers, (ii) multiple filter stages to avoid screening traffic not intended for the edge, and (iii) several strategies to keep the number of flows low to make the best use of the precious flow table memory in hardware switches. A performance evaluation is shown, with results from a real edge/fog testbed.

Keywords: 5G, Multi-Access Edge Computing, MEC, Patricia Trie, SDN, Software-Defined Networking

The 13th ACM Multimedia Systems Conference (ACM MMSys 2022) Open Dataset and Software (ODS) track

June 14–17, 2022 | Athlone, Ireland

Authors: Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Samira Afzal (Alpen-Adria-Universität Klagenfurt), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt).

Abstract: This paper provides an overview of the open Video Complexity Dataset (VCD) which comprises 500 Ultra High Definition (UHD) resolution test video sequences. These sequences are provided at 24 frames per second (fps) and stored online in losslessly encoded 8-bit 4:2:0 format. In this paper, all sequences are characterized by spatial and temporal complexities, rate-distortion complexity, and encoding complexity with the x264 AVC/H.264 and x265 HEVC/H.265 video encoders. The dataset is tailor-made for cutting-edge multimedia applications such as video streaming, two-pass encoding, per-title encoding, scene-cut detection, etc. Evaluations show that the dataset includes diversity in video complexities. Hence, using this dataset is recommended for training and testing video coding applications. All data have been made publicly available as part of the dataset, which can be used for various applications.

The details of VCD can be accessed online at https://vcd.itec.aau.at.

May 25, 2022, Edinburgh, UK

Empowered by today’s rich tools for media generation and collaborative production and the convenient wireless access (e.g., WiFi and cellular networks) to the Internet, crowdsourced live streaming over wireless networks have become very popular. However, crowdsourced wireless live streaming presents unique video delivery challenges that make a difficult tradeoff among three core factors: bandwidth, computation/storage, and latency. However, [read more]

Elsevier Computer Communications journal

Shirzad Shahryari (Ferdowsi University of Mashhad, Mashhad, Iran), Farzad Tashtarian (Alpen-Adria-Universität Klagenfurt), Seyed-Amin Hosseini-Seno (Ferdowsi University of Mashhad, Mashhad, Iran).

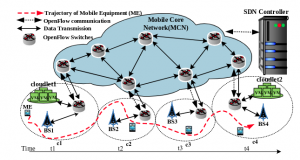

Abstract: Edge Cloud Computing (ECC) is a new approach for bringing Mobile Cloud Computing (MCC) services closer to mobile users in order to facilitate the complicated application execution on resource-constrained mobile devices. The main objective of the ECC solution with the cloudlet approach is mitigating the latency and augmenting the available bandwidth. This is basically done by deploying servers (a.k.a ”cloudlets”) close to the user’s device on the edge of the cellular network. Once the user requests mount, the resource constraints in a cloudlet will lead to resource shortages. This challenge, however, can be overcome using a network of cloudlets for sharing their resources. On the other hand, when considering the users’ mobility along with the limited resource of the cloudlets serving them, the user-cloudlet communication may need to go through multiple hops, which may seriously affect the communication delay between them and the quality of services (QoS).

The importance of remote communication is becoming more and more important in particular after COVID-19 crisis. However, to bring a more realistic visual experience, more than the traditional two-dimensional (2D) interfaces we know today is required. Immersive media such as 360-degree, light fields, point cloud, ultra-high-definition, high dynamic range, etc. can fill this gap. These modalities, however, face several challenges from capture to display. Learning-based solutions show great promise and significant performance in improving traditional solutions in addressing the challenges. In this special session, we will focus on research works aimed at extending and improving the use of learning-based architectures for immersive imaging technologies.

Paper Submissions: 6th June, 2022

Paper Notifications: 11th July, 2022

2022 IEEE International Conference on Multimedia and Expo (ICME) Industry & Application Track

July 18-22, 2022 | Taipei, Taiwan

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Christian Feldmann (Bitmovin, Austria), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt)

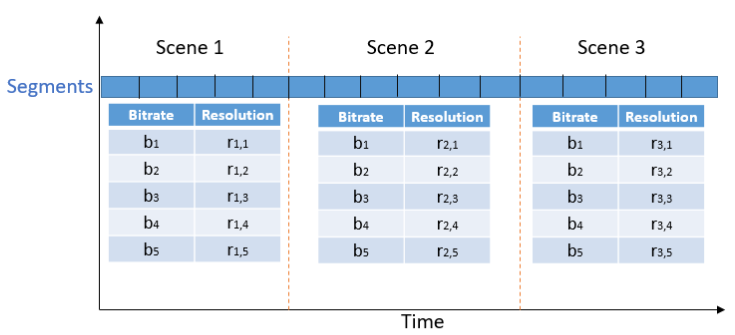

Abstract:

In live streaming applications, typically a fixed set of bitrate-resolution pairs (known as a bitrate ladder) is used during the entire streaming session in order to avoid the additional latency to find scene transitions and optimized bitrate-resolution pairs for every video content. However, an optimized bitrate ladder per scene may result in (i) decreased

storage or delivery costs or/and (ii) increased Quality of Experience (QoE). This paper introduces an Online Per-Scene Encoding (OPSE) scheme for adaptive HTTP live streaming applications. In this scheme, scene transitions and optimized bitrate-resolution pairs for every scene are predicted using Discrete Cosine Transform (DCT)-energy-based low-complexity spatial and temporal features. Experimental results show that, on average, OPSE yields bitrate savings of upto 48.88% in certain scenes to maintain the same VMAF,

compared to the reference HTTP Live Streaming (HLS) bitrate ladder without any noticeable additional latency in streaming.

The bitrate ladder prediction envisioned using OPSE.