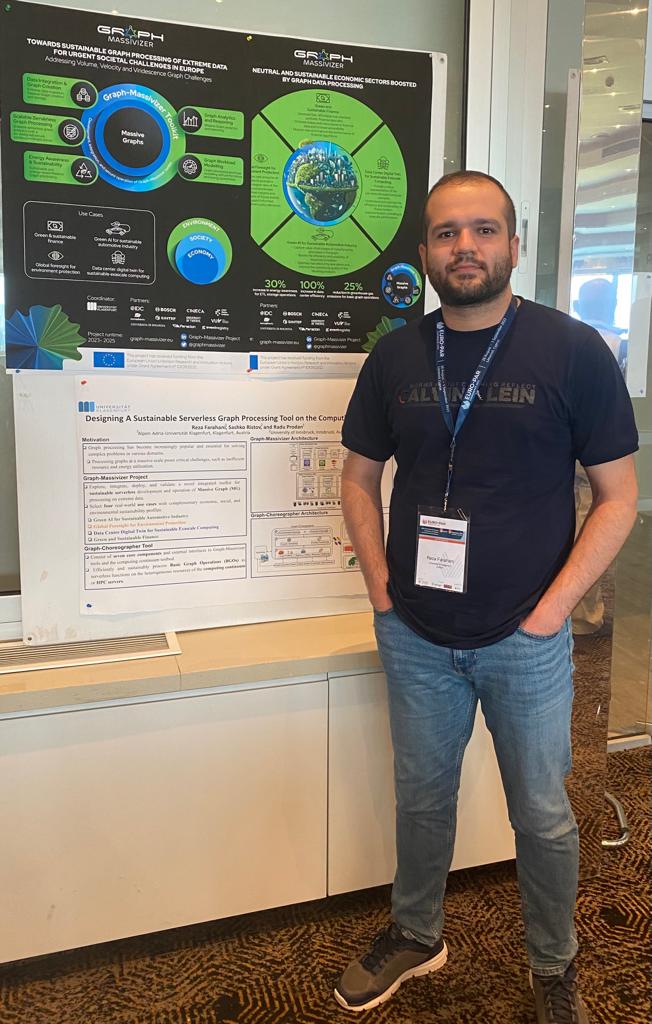

Title: Designing A Sustainable Serverless Graph Processing Tool on the Computing Continuum

Abstract: Graph processing has become increasingly popular and essential for solving complex problems in various domains, like social networks. However, processing graphs at a massive scale poses critical challenges, such as inefficient resource and energy utilization. To bridge such challenges, the Graph-Massivizer project, funded by the Horizon Europe research and innovation program, conducts research and develops a high-performance, scalable, and sustainable platform for information processing and reasoning based on the massive graph (MG) representation of extreme data. This paper presents an initial architectural design for the Choreographer, one of the five Graph-Massivizer tools. We explain Choreographer’s components and their collaboration with other Graph-Massivizer tools. We demonstrate how Choreographer can adopt the emerging serverless computing paradigm to process Basic Graph Operations (BGOs) as serverless functions across the computing continuum efficiently. Moreover, we present an early vision of our federated Function-as-a-Service (FaaS) testbed, which will be used to conduct experiments and assess Choreographer performance.

On 14.07.2023, Zahra Najafabadi Samani successfully defended her doctoral studies with the thesis on the title: “Resource-Aware Time-Critical Application Placement in the Computing Continuum” under the supervision of Prof. Radu Prodan and Assoc.-Prof. Dr. Klaus Schöffmann at ITEC. Her defense was chaired by Univ.-Prof. Dr. Christian Timmerer and examined by Univ.-Prof. Dr. Thomas Fahringer (Leopold Franzens-Universität Innsbruck, AT) and Assoc.-Prof. Dr. Attila Kertesz (University of Szeged, HU).

On 14.07.2023, Zahra Najafabadi Samani successfully defended her doctoral studies with the thesis on the title: “Resource-Aware Time-Critical Application Placement in the Computing Continuum” under the supervision of Prof. Radu Prodan and Assoc.-Prof. Dr. Klaus Schöffmann at ITEC. Her defense was chaired by Univ.-Prof. Dr. Christian Timmerer and examined by Univ.-Prof. Dr. Thomas Fahringer (Leopold Franzens-Universität Innsbruck, AT) and Assoc.-Prof. Dr. Attila Kertesz (University of Szeged, HU).