We are glad that the paper was accepted for publication in IEEE Transactions on Multimedia.

Authors: Hadi Amirpour (AAU, AT), Jingwen Zhu (Nantes University, FR), Wei Zhu (Cardiff University, UK), Patrick Le Callet (Nantes University, FR), and Christian Timmerer (AAU, AT)

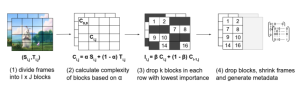

Abstract: In HTTP Adaptive Streaming (HAS), a video is encoded at various bitrate-resolution pairs, collectively known as the bitrate ladder, allowing users to select the most suitable representation based on their network conditions. Optimizing this set of pairs to enhance the Quality of Experience (QoE) requires accurately measuring the quality of these representations. VMAF and ITU-T’s P.1204.3 are highly reliable metrics for assessing the quality of representations in HAS. However, in practice, using these metrics for optimization is often impractical for live streaming applications due to their high computational costs and the large number of bitrate-resolution pairs in the bitrate ladder that need to be evaluated. To address their high complexity, our paper introduces a new method called VQM4HAS, which extracts low-complexity features including (i) video complexity features, (ii) frame-level encoding statistics logged during the encoding process, and (iii) lightweight video quality metrics. These extracted features are then fed into a regression model to predict VMAF and P.1204.3, respectively.

The VQM4HAS model is designed to operate on a per bitrate-resolution pair, per-resolution, and cross-representation basis, optimizing quality predictions across different HAS scenarios. Our experimental results demonstrate that VQM4HAS achieves a high correlation with VMAF and P.1204.3, with Pearson correlation coefficients (PCC) ranging from 0.95 to 0.96 for VMAF and 0.97 to 0.99 for P.1204.3, depending on the resolution. Despite achieving a high correlation with VMAF and P.1204.3, VQM4HAS exhibits significantly less complexity than both metrics, with 98% and 99% less complexity for VMAF and P.1204.3, respectively, making it suitable for live streaming scenarios.

We also conduct a feature importance analysis to further reduce the complexity of the proposed method. Furthermore, we evaluate the effectiveness of our method by using it to predict subjective quality scores. The results show that VQM4HAS achieves a higher correlation with subjective scores at various resolutions, despite its minimal complexity.