OTEC: An Optimized Transcoding Task Scheduler for Cloud and Fog Environments

Samira Afzal (Alpen-Adria-Universität Klagenfurt), Farzad Tashtarian (Alpen-Adria-Universität Klagenfurt), Hamid Hadian (Alpen-Adria-Universität Klagenfurt), Alireza Erfanian (Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Alpen-Adria-Universität Klagenfurt), and Radu Prodan (Alpen-Adria-Universität Klagenfurt)

Abstract:

Encoding and transcoding videos into multiple codecs and representations is a significant challenge that requires seconds or even days on high-performance computers depending on many technical characteristics, such as video complexity or encoding parameters. Cloud computing offering on-demand computing resources optimized to meet the needs of customers and their budgets is a promising technology for accelerating dynamic transcoding workloads. In this work, we propose OTEC, a novel multi-objective optimization method based on the mixed-integer linear programming model to optimize the computing instance selection for transcoding processes. OTEC determines the type and number of cloud and fog resource instances for video encoding and transcoding tasks with optimized computation cost and time. We evaluated OTEC on AWS EC2 and Exoscale instances for various administrator priorities, the number of encoded video segments, and segment transcoding times. The results show that OTEC can achieve appropriate resource selections and satisfy the administrator’s priorities in terms of time and cost minimization.

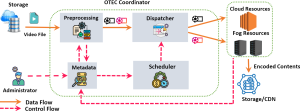

OTEC architecture overview.