Title: A Traffic-sign recognition IoT-based Application

Title: A Traffic-sign recognition IoT-based Application Title: A Traffic-sign recognition IoT-based Application

Title: A Traffic-sign recognition IoT-based Application Title: A Traffic-sign recognition IoT-based Application

Title: A Traffic-sign recognition IoT-based ApplicationJuly 18-22, 2022 | Taipei, Taiwan

Ekrem Çetinkaya (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), and Christian Timmerer (Christian Doppler LaboratoryATHENA, Alpen-Adria-Universität Klagenfurt)

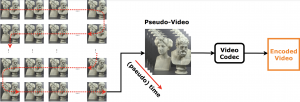

Abstract: Light field imaging enables post-capture actions such as refocusing and changing view perspective by capturing both spatial and angular information. However, capturing richer information about the 3D scene results in a huge amount of data. To improve the compression efficiency of the existing light field compression methods, we investigate the impact of light field super-resolution approaches (both spatial and angular super-resolution) on the compression efficiency. To this end, firstly, we downscale light field images over (i) spatial resolution, (ii) angular resolution, and (iii) spatial-angular resolution and encode them using Versatile Video Coding (VVC). We then apply a set of light field super-resolution deep neural networks to reconstruct light field images in their full spatial-angular resolution and compare their compression efficiency. Experimental results show that encoding the low angular resolution light field image and applying angular super-resolution yield bitrate savings of 51.16 % and 53.41 % to maintain the same PSNR and SSIM, respectively, compared to encoding the light field image in high-resolution.

Keywords: Light field, Compression, Super-resolution, VVC.

MPEG, specifically, ISO/IEC JTC 1/SC 29/WG 3 (MPEG Systems), has been just awarded a Technology & Engineering Emmy® Award for its ground-breaking MPEG-DASH standard. Dynamic Adaptive Streaming over HTTP (DASH) is the first international de-jure standard that enables efficient streaming of video over the Internet and it has changed the entire video streaming industry including — but not limited to — on-demand, live, and low latency streaming and even for 5G and the next generation of hybrid broadcast-broadband. The first edition has been published in April 2012 and MPEG is currently working towards publishing the 5th edition demonstrating an active and lively ecosystem still being further developed and improved to address requirements and challenges for modern media transport applications and services.

This award belongs to 90+ researchers and engineers from around 60 companies all around the world who participated in the development of the MPEG-DASH standard for over 12 years.

From left to right: Kyung-mo Park, Cyril Concolato, Thomas Stockhammer, Yuriy Reznik, Alex Giladi, Mike Dolan, Iraj Sodagar, Ali Begen, Christian Timmerer, Gary Sullivan, Per Fröjdh, Young-Kwon Lim, Ye-Kui Wang. (Photo © Yuriy Reznik)

Christian Timmerer, director of the Christian Doppler Laboratory ATHENA, chaired the evaluation of responses to the call for proposals and since that served as MPEG-DASH Ad-hoc Group (AHG) / Break-out Group (BoG) co-chair as well as co-editor for Part 2 of the standard. For a more detailed history of the MPEG-DASH standard, the interested reader is referred to Christian Timmerer’s blog post “HTTP Streaming of MPEG Media” (capturing the development of the first edition) and Nicolas Weill’s blog post “MPEG-DASH: The ABR Esperanto” (DASH timeline).

We are happy that our tutorial on Open Challenges of Interactive Video Search and Evaluation (by Jakub Lokoc, Klaus Schöffmann, Werner Bailer, Luca Rossetto, and Björn Thor Jonsson) has been accepted for ACM Multimedia (ACMMM 2022), to be held in Lisbon, Portugal, in October 2022.

The 5th Annual Lifelog Search Challenge (LSC 2022), co-organized by Klaus Schöffmann, will be this year’s grand challenge at the ACM International Conference on Multimedia Retrieval (ICMR 2022) in Newark, NJ, USA. More information here: https://www.icmr2022.org/program/challenges/

Klaus Schöffmann will give an invited talk about Relevant Content Detection in Cataract Surgery Videos at the IEEE International Conference on Image Processing Theory, Tools and Applications (IPTA) on April 19, 2022, in Salzburg, Austria. More information here: https://ipta-conference.com/ipta22/index.php/invited-speakers

Klaus Schöffmann will give a tutorial about Medical Video Processing at the IEEE International Conference on Image Processing Theory, Tools and Applications (IPTA) on April 19, 2022, in Salzburg, Austria. More information here: https://ipta-conference.com/ipta22/index.php/invited-speakers

We are happy that our 2nd International Workshop on Interactive Multimedia Retrieval (IMuR) has been accepted for the ACM Multimedia Conference 2022 (ACMMM) in Lisbon, Portugal. More information can be found here: https://sites.google.com/view/imur2022

Babak Taraghi (Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt).

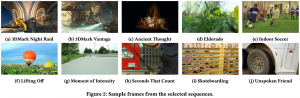

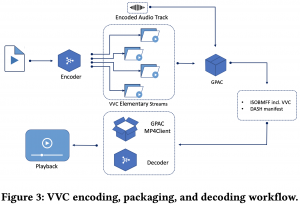

Abstract: There exist many applications that produce multimedia traffic over the Internet. Video streaming is on the list, with a rapidly growing desire for more bandwidth to deliver higher resolutions such as Ultra High Definition (UHD) 8K content. HTTP Adaptive Streaming (HAS) technique defines baselines for audio-visual content streaming to balance the delivered media quality and minimize streaming session defects. On the other hand, video codecs development and standardization help the theorem by introducing efficient algorithms and technologies. Versatile Video Coding (VVC) is one of the latest advancements in this area that is still not fully optimized and supported on all platforms. Stated optimization and supporting many platforms require years of research and development. This paper offers a dataset that facilitates the research and development of the aforementioned technologies. Our open-source dataset comprises Dynamic Adaptive Streaming over HTTP (MPEG-DASH) multimedia test assets of encoded Advanced Video Coding (AVC), High Efficiency Video Coding (HEVC), AOMedia Video 1 (AV1), and VVC content with resolutions of up to 7680×4320 or 8K. Our dataset has a maximum media duration of 322 seconds, and we offer our MPEG-DASH packaged content with two segments lengths, 4 and 8 seconds.

The dataset is available here.

June 14–17, 2022 | Athlone, Ireland

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Christian Feldmann (Bitmovin, Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt),

Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt).

Abstract:

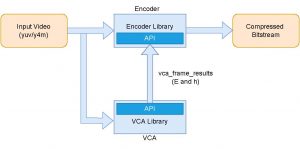

VCA in content-adaptive encoding applications

For online analysis of the video content complexity in live streaming applications, selecting low-complexity features is critical to ensure low-latency video streaming without disruptions. To this light, for each video (segment), two features, i.e., the average texture energy and the average gradient of the texture energy, are determined. A DCT-based energy function is introduced to determine the block-wise texture of each frame. The spatial and temporal features of the video (segment) are derived from the DCT-based energy function. The Video Complexity Analyzer (VCA) project aims to provide an

efficient spatial and temporal complexity analysis of each video (segment) which can be used in various applications to find the optimal encoding decisions. VCA leverages some of the x86 Single Instruction Multiple Data (SIMD) optimizations for Intel CPUs and

multi-threading optimizations to achieve increased performance. VCA is an open-source library published under the GNU GPLv3 license.

Github: https://github.com/cd-athena/VCA

Online documentation: https://cd-athena.github.io/VCA/