Authors: Sandro Linder (AAU, Austria), Samira Afzal (AAU, Austria), Christian Bauer (AAU, Austria), Hadi Amirpour (AAU, Austria), Radu Prodan (AAU, Austria), and Christian Timmerer (AAU, Austria)

Venue: The 15th ACM Multimedia Systems Conference (Open-source Software and Datasets)

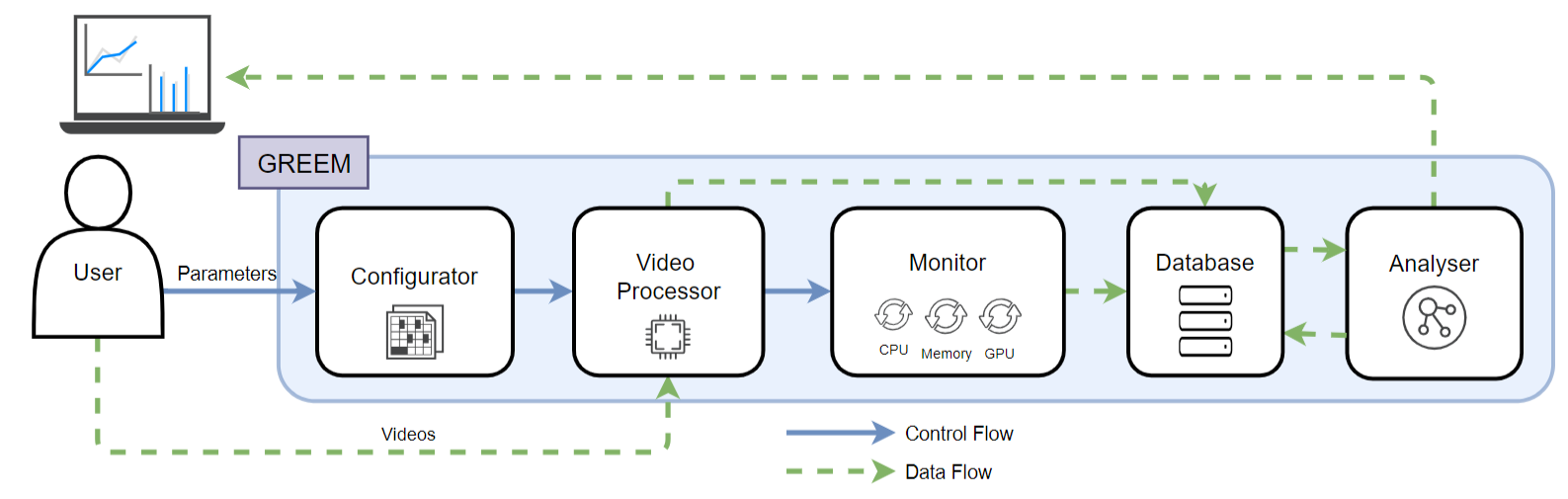

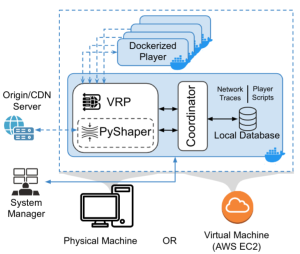

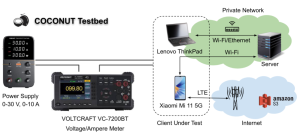

Abstract: Video streaming constitutes 65 % of global internet traffic, prompting an investigation into its energy consumption and CO2 emissions. Video encoding, a computationally intensive part of streaming, has moved to cloud computing for its scalability and flexibility. However, cloud data centers’ energy consumption, especially video encoding, poses environmental challenges. This paper presents VEED, a FAIR Video Encoding Energy and CO2 Emissions Dataset for Amazon Web Services (AWS) EC2 instances. Additionally, the dataset also contains the duration, CPU utilization, and cost of the encoding. To prepare this dataset, we introduce a model and conduct a benchmark to estimate the energy and CO2 emissions of different Amazon EC2 instances during the encoding of 500 video segments with various complexities and resolutions using Advanced Video Coding (AVC)

and High-Efficiency Video Coding (HEVC). VEED and its analysis can provide valuable insights for video researchers and engineers to model energy consumption, manage energy resources, and distribute workloads, contributing to the sustainability of cloud-based video encoding and making them cost-effective. VEED is available at Github.