Radu Prodan talks about “Democratic Trustworthy News in the Social Continuum: the ARTICONF Appeoach” Live streaming at the Digital Day in Messina.

ICME Workshop on Hyper-Realistic Multimedia for Enhanced Quality of Experience (ICMEW)

July 18-22, 2022 | Taipei, Taiwan

Conference Website

Ekrem Çetinkaya (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), and Christian Timmerer (Christian Doppler LaboratoryATHENA, Alpen-Adria-Universität Klagenfurt)

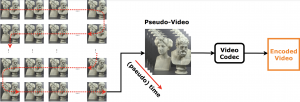

Abstract: Light field imaging enables post-capture actions such as refocusing and changing view perspective by capturing both spatial and angular information. However, capturing richer information about the 3D scene results in a huge amount of data. To improve the compression efficiency of the existing light field compression methods, we investigate the impact of light field super-resolution approaches (both spatial and angular super-resolution) on the compression efficiency. To this end, firstly, we downscale light field images over (i) spatial resolution, (ii) angular resolution, and (iii) spatial-angular resolution and encode them using Versatile Video Coding (VVC). We then apply a set of light field super-resolution deep neural networks to reconstruct light field images in their full spatial-angular resolution and compare their compression efficiency. Experimental results show that encoding the low angular resolution light field image and applying angular super-resolution yield bitrate savings of 51.16 % and 53.41 % to maintain the same PSNR and SSIM, respectively, compared to encoding the light field image in high-resolution.

Keywords: Light field, Compression, Super-resolution, VVC.

Babak Taraghi (Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt).

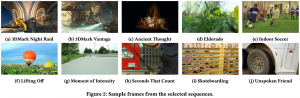

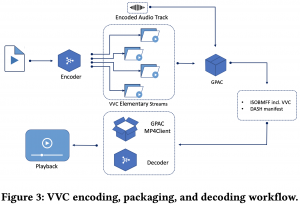

Abstract: There exist many applications that produce multimedia traffic over the Internet. Video streaming is on the list, with a rapidly growing desire for more bandwidth to deliver higher resolutions such as Ultra High Definition (UHD) 8K content. HTTP Adaptive Streaming (HAS) technique defines baselines for audio-visual content streaming to balance the delivered media quality and minimize streaming session defects. On the other hand, video codecs development and standardization help the theorem by introducing efficient algorithms and technologies. Versatile Video Coding (VVC) is one of the latest advancements in this area that is still not fully optimized and supported on all platforms. Stated optimization and supporting many platforms require years of research and development. This paper offers a dataset that facilitates the research and development of the aforementioned technologies. Our open-source dataset comprises Dynamic Adaptive Streaming over HTTP (MPEG-DASH) multimedia test assets of encoded Advanced Video Coding (AVC), High Efficiency Video Coding (HEVC), AOMedia Video 1 (AV1), and VVC content with resolutions of up to 7680×4320 or 8K. Our dataset has a maximum media duration of 322 seconds, and we offer our MPEG-DASH packaged content with two segments lengths, 4 and 8 seconds.

The dataset is available here.

The 13th ACM Multimedia Systems Conference (ACM MMSys 2022) Open Dataset and Software (ODS) track

June 14–17, 2022 | Athlone, Ireland

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Christian Feldmann (Bitmovin, Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt),

Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt).

Abstract:

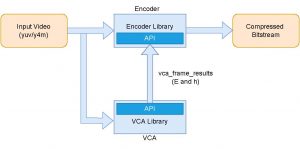

VCA in content-adaptive encoding applications

For online analysis of the video content complexity in live streaming applications, selecting low-complexity features is critical to ensure low-latency video streaming without disruptions. To this light, for each video (segment), two features, i.e., the average texture energy and the average gradient of the texture energy, are determined. A DCT-based energy function is introduced to determine the block-wise texture of each frame. The spatial and temporal features of the video (segment) are derived from the DCT-based energy function. The Video Complexity Analyzer (VCA) project aims to provide an

efficient spatial and temporal complexity analysis of each video (segment) which can be used in various applications to find the optimal encoding decisions. VCA leverages some of the x86 Single Instruction Multiple Data (SIMD) optimizations for Intel CPUs and

multi-threading optimizations to achieve increased performance. VCA is an open-source library published under the GNU GPLv3 license.

Github: https://github.com/cd-athena/VCA

Online documentation: https://cd-athena.github.io/VCA/

In Carinthia, researchers find an open test laboratory in the 5G Playground Carinthia, where the possibilities of the new mobile phone technology can be explored. The problem is: 5G enables the fast transmission of large amounts of data, but these also have to be processed. Read the whole interview of Univ.-Prof. DI Dr. Radu Prodan in the latest University Klagenfurt news.

Prof. Radu Prodan held a keynote speech about the ARTICONF project at the 3rd International Conference on Applications of AI & Machine Learning (ICAML 2021).

Congratulations to Negin Ghamsarian et al., who got their paper “ReCal-Net: Joint Region-Channel-Wise Calibrated Network for Semantic Segmentation in Cataract Surgery Videos” accepted at the International Conference on Neural Information Processing (ICONIP 2021).

Abstract: Semantic segmentation in surgical videos is a prerequisite for a broad range of applications towards improving surgical outcomes and surgical video analysis. However, semantic segmentation in surgical videos involves many challenges. In particular, in cataract surgery, various features of the relevant objects such as blunt edges, color and context variation, reflection, transparency, and motion blur pose a challenge for semantic segmentation. In this paper, we propose a novel convolutional module termed as ReCal module, which can calibrate the feature maps by employing region intra-and-inter-dependencies and channel-region cross-dependencies. This calibration strategy can effectively enhance semantic representation by correlating different representations of the same semantic label, considering a multi-angle local view centering around each pixel. Thus the proposed module can deal with distant visual characteristics of unique objects as well as cross-similarities in the visual characteristics of different objects. Moreover, we propose a novel network architecture based on the proposed module termed as ReCal-Net. Experimental results confirm the superiority of ReCal-Net compared to rival state-of-the-art approaches for all relevant objects in cataract surgery. Moreover, ablation studies reveal the effectiveness of the ReCal module in boosting semantic segmentation accuracy.

Title: On The Impact of Viewing Distance on Perceived Video Quality

Link: IEEE Visual Communications and Image Processing (VCIP 2021) 5-8 December 2021, Munich, Germany

Authors: Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Raimund Schatz (AIT Austrian Institute of Technology, Austria), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt)

Abstract: Due to the growing importance of optimizing quality and efficiency of video streaming delivery, accurate assessment of user perceived video quality becomes increasingly relevant. However, due to the wide range of viewing distances encountered in real-world viewing settings, actually perceived video quality can vary significantly in everyday viewing situations. In this paper, we investigate and quantify the influence of viewing distance on perceived video quality. A subjective experiment was conducted with full HD sequences at three different stationary viewing distances, with each video sequence being encoded at three different quality levels. Our study results confirm that the viewing distance has a significant influence on the quality assessment. In particular, they show that an increased viewing distance generally leads to an increased perceived video quality, especially at low media encoding quality levels. In this context, we also provide an estimation of potential bitrate savings that knowledge of actual viewing distance would enable in practice.

Since current objective video quality metrics do not systematically take into account viewing distance, we also analyze and quantify the influence of viewing distance on the correlation between objective and subjective metrics. Our results confirm the need for distance-aware objective metrics when accurate prediction of perceived video quality in real-world environments is required.

Title: Improving Per-title Encoding for HTTP Adaptive Streaming by Utilizing Video Super-resolution

Link: IEEE Visual Communications and Image Processing (VCIP 2021) 5-8 December 2021, Munich, Germany

Authors: Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Hannaneh Barahouei Pasandi (Virginia Commonwealth University), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt)

Abstract: In per-title encoding, to optimize a bitrate ladder over spatial resolution, each video segment is downscaled to a set of spatial resolutions and they are all encoded at a given set of bitrates. To find the highest quality resolution for each bitrate, the low-resolution encoded videos are upscaled to the original resolution, and a convex hull is formed based on the scaled qualities. Deep learning-based video super-resolution (VSR) approaches show a significant gain over traditional approaches and they are becoming more and more efficient over time. This paper improves the per-title encoding over the upscaling methods by using deep neural network-based VSR algorithms as they show a significant gain over traditional approaches. Utilizing a VSR algorithm by improving the quality of low-resolution encodings can improve the convex hull. As a result, it will lead to an improved bitrate ladder. To avoid bandwidth wastage at perceptually lossless bitrates a maximum threshold for the quality is set and encodings beyond it are eliminated from the bitrate ladder. Similarly, a minimum threshold is set to avoid low-quality video delivery. The encodings between the maximum and minimum thresholds are selected based on one Just Noticeable Difference. Our experimental results show that the proposed per-title encoding results in a 24% bitrate reduction and 53% storage reduction compared to the state-of-the-art method.

Title: INTENSE: In-depth Studies on Stall Events and Quality Switches and Their Impact on the Quality of Experience in HTTP Adaptive Streaming

Link: IEEE Access, A Multidisciplinary, Open-access Journal of the IEEE

[PDF]

Babak Taraghi (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Minh Nguyen (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt)

Abstract: With the recent growth of multimedia traffic over the Internet and emerging multimedia streaming service providers, improving Quality of Experience (QoE) for HTTP Adaptive Streaming (HAS) becomes more important. Alongside other factors, such as the media quality, HAS relies on the performance of the media player’s Adaptive Bitrate (ABR) algorithm to optimize QoE in multimedia streaming sessions. QoE in HAS suffers from weak or unstable internet connections and suboptimal ABR decisions. As a result of imperfect adaptiveness to the characteristics and conditions of the internet connection, stall events and quality level switches could occur and with different durations that negatively affect the QoE. In this paper, we address various identified open issues related to the QoE for HAS, notably (i) the minimum noticeable duration for stall events in HAS;(ii) the correlation between the media quality and the impact of stall events on QoE; (iii) the end-user preference regarding multiple shorter stall events versus a single longer stall event; and (iv) the end-user preference of media quality switches over stall events. Therefore, we have studied these open issues from both objective and subjective evaluation perspectives and presented the correlation between the two types of evaluations. The findings documented in this paper can be used as a baseline for improving ABR algorithms and policies in HAS.

Keywords: Crowdsourcing; HTTP Adaptive Streaming; Quality of Experience; Quality Switches; Stall Events; Subjective Evaluation; Objective Evaluation.