IEEE Global Communications Conference (GLOBECOM)

December 4-8, 2022 |Rio de Janeiro, Brazil

Conference Website

Authors: Reza Farahani (Alpen-Adria-Universität Klagenfurt, Austria), Abdelhak Bentaleb (National University of Singapore, Singapore), Ekrem Cetinkaya (Alpen-Adria-Universität Klagenfurt, Austria), Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria), Roger Zimmermann (National University of Singapore, Singapore), and Hermann Hellwagner (Alpen-Adria-Universität Klagenfurt, Austria)

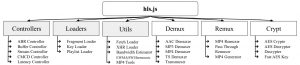

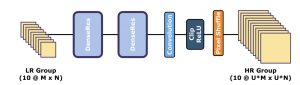

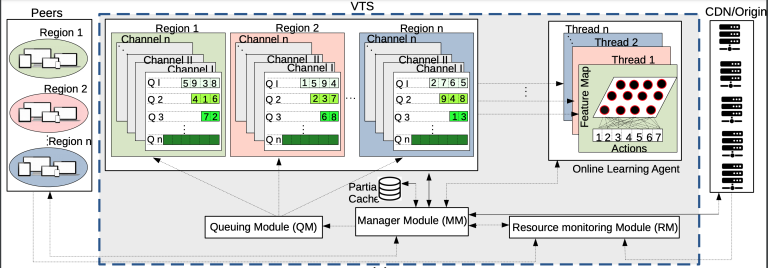

Abstract: a cost-effective, scalable, and flexible architecture that supports low latency and high-quality live video streaming is still a challenge for Over-The-Top (OTT) service providers. To cope with this issue, this paper leverages Peer-to-Peer (P2P), Content Delivery Network (CDN), edge computing, Network Function Virtualization (NFV), and distributed video transcoding paradigms to introduce a hybRId P2P-CDN arcHiTecture for livE video stReaming (RICHTER). We first introduce RICHTER’s multi-layer architecture and design an action tree that considers all feasible resources provided by peers, edge, and CDN servers for serving peer requests with minimum latency and maximum quality. We then formulate the problem as an optimization model executed at the edge of the network. We present an Online Learning (OL) approach that leverages an unsupervised Self Organizing Map (SOM) to (i) alleviate the time complexity issue of the optimization model and (ii) make it a suitable solution for large-scale scenarios by enabling decisions for groups of requests instead of for single requests. Finally, we implement the RICHTER framework, conduct our experiments on a large-scale cloud-based testbed including 350 HAS players, and compare its effectiveness with baseline systems. The experimental results illustrate that RICHTER outperforms baseline schemes in terms of users’ Quality of Experience (QoE), latency, and network utilization, by at least 59%, 39%, and 70%, respectively.