18th International Conference on Network and Service Management (CNSM 2022)

Thessaloniki, Greece | 31 October – 4 November 2022

Conference Website

Minh Nguyen (Alpen-Adria-Universität Klagenfurt, Austria), Babak Taraghi (Alpen-Adria-Universität Klagenfurt, Austria), Abdelhak Bentaleb (National University of Singapore, Singapore), Roger Zimmermann (National University of Singapore, Singapore), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

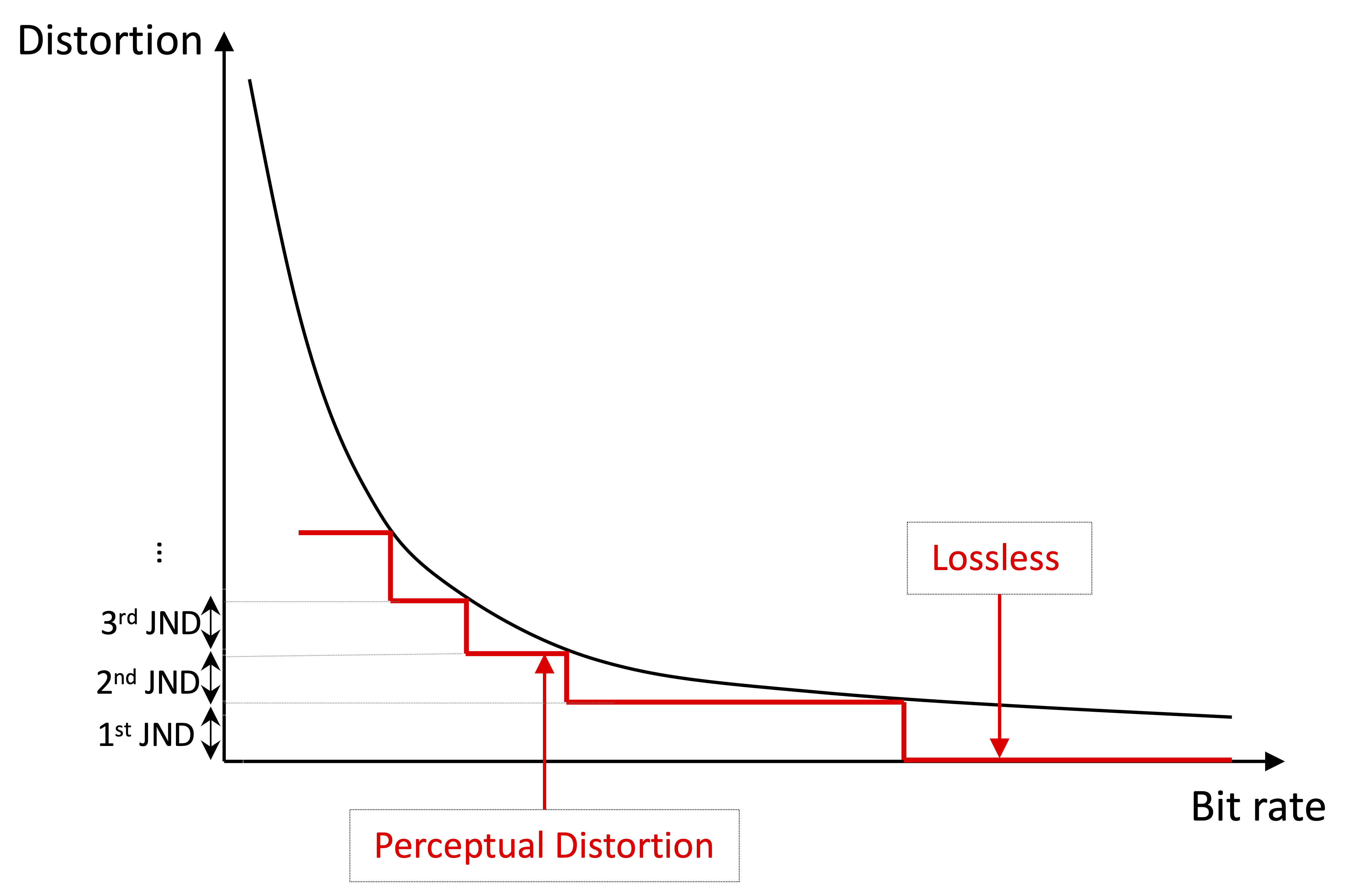

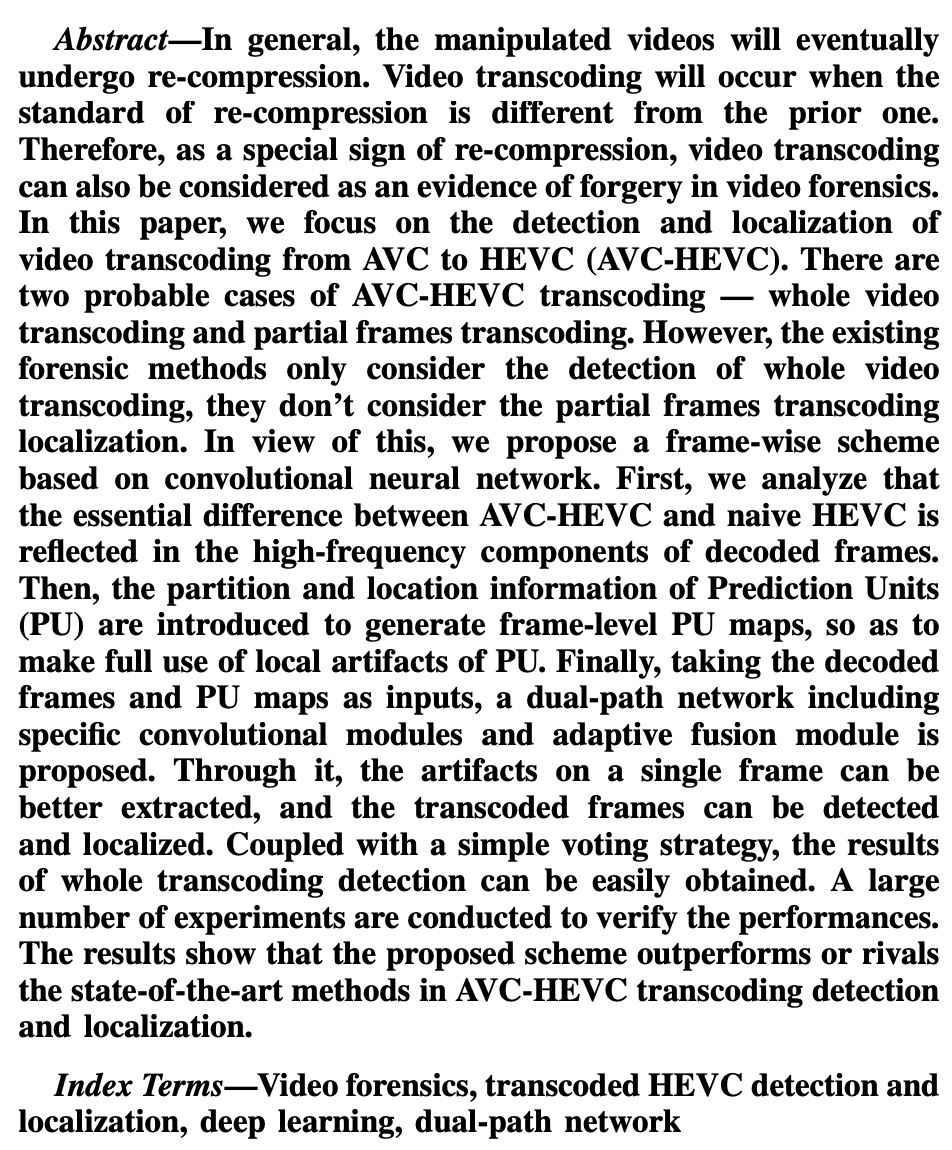

Abstract: Considering network conditions, video content, and viewer device type/screen resolution to construct a bitrate ladder is necessary to deliver the best Quality of Experience (QoE).

A large-screen device like a TV needs a high bitrate with high resolution to provide good visual quality, whereas a small one like a phone requires a low bitrate with low resolution. In

addition, encoding high-quality levels at the server side while the network is unable to deliver them causes unnecessary cost for the content provider. Recently, the Common Media Client Data (CMCD) standard has been proposed, which defines the data that is collected at the client and sent to the server with its HTTP requests. This data is useful in log analysis, quality of service/experience monitoring and delivery improvements.

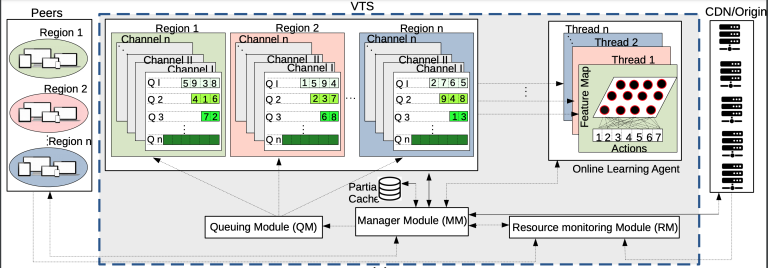

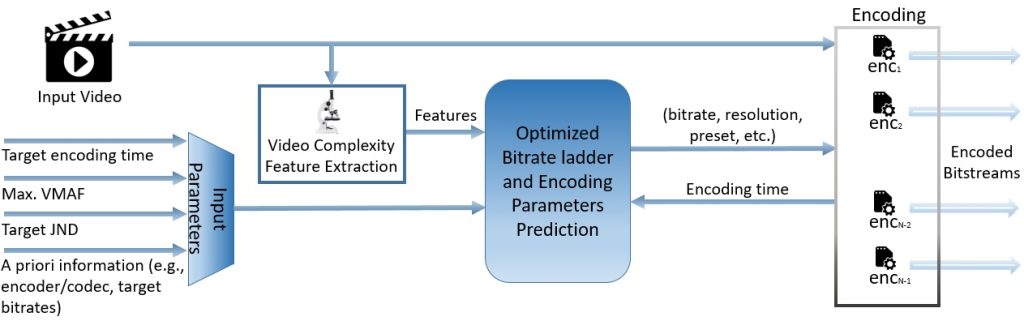

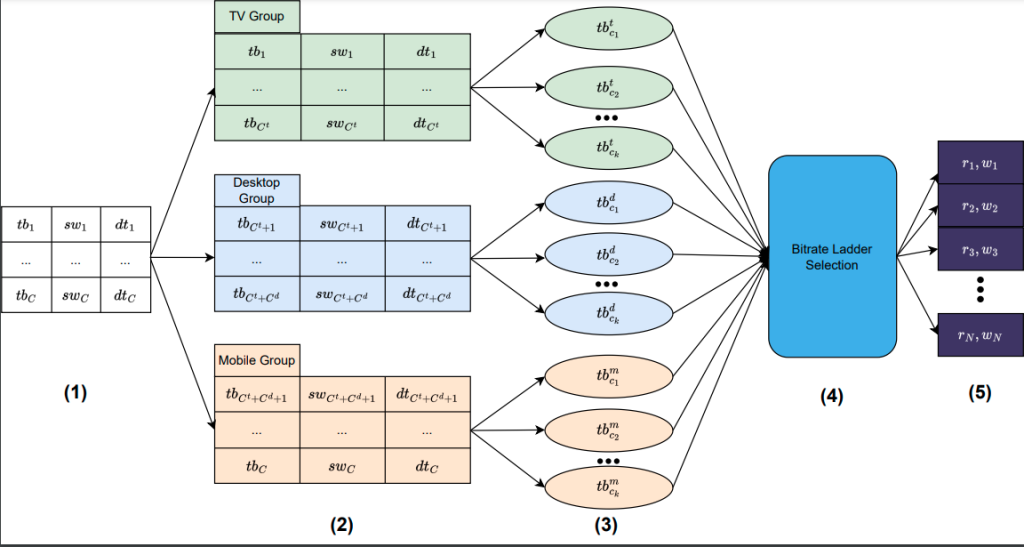

In this paper, we introduce a CMCD-Aware per-Device bitrate LADder construction (CADLAD) that leverages CMCD to address the above issues. CADLAD comprises components at both client and server sides. The client calculates the top bitrate (tb) — a CMCD parameter to indicate the highest bitrate that can be rendered at the client — and sends it to the server together with its device type and screen resolution. The server decides on a suitable bitrate ladder, whose maximum bitrate and resolution are based on CMCD parameters, to the client device with the purpose of providing maximum QoE while minimizing delivered data. CADLAD has two versions to work in Video on

Demand (VoD) and live streaming scenarios. Our CADLAD is client agnostic; hence, it can work with any players and ABR algorithms at the client. The experimental results show that CADLAD is able to increase the QoE by 2.6x while saving 71% of delivered data, compared to an existing bitrate ladder of an available video dataset. We implement our idea within CAdViSE — an open-source testbed for reproducibility.