2022 IEEE International Conference on Multimedia and Expo (ICME)

July 18-22, 2022 | Taipei, Taiwan

Conference Website

Vignesh V Menon (Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt)

Abstract:

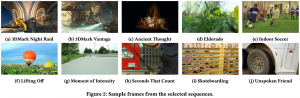

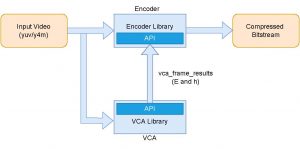

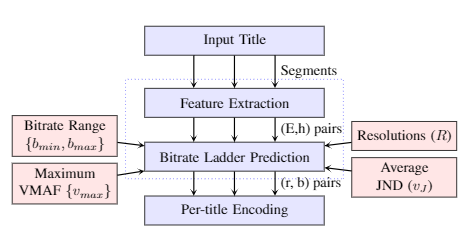

In live streaming applications, typically a fixed set of bitrate-resolution pairs (known as bitrate ladder) is used for simplicity and efficiency in order to avoid the additional encoding run-time required to find optimum resolution-bitrate pairs for every video content. However, an optimized bitrate ladder may result in (i) decreased storage or delivery costs or/and (ii) increased Quality of Experience (QoE). This paper introduces a perceptually-aware per-title encoding (PPTE) scheme for video streaming applications. In this scheme, optimized bitrate-resolution pairs are predicted online based on Just Noticeable Difference (JND) in quality perception to avoid adding perceptually similar representations in the bitrate ladder. To this end, Discrete Cosine Transform(DCT)-energy-based low-complexity spatial and temporal features for each video segment are used. Experimental results show that, on average, PPTE yields bitrate savings of 16.47% and 27.02% to maintain the same PSNR and VMAF, respectively, compared to the reference HTTP Live Streaming (HLS) bitrate ladder without any noticeable additional latency in streaming accompanied by a 30.69% cumulative decrease in storage space for various representations.

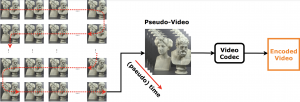

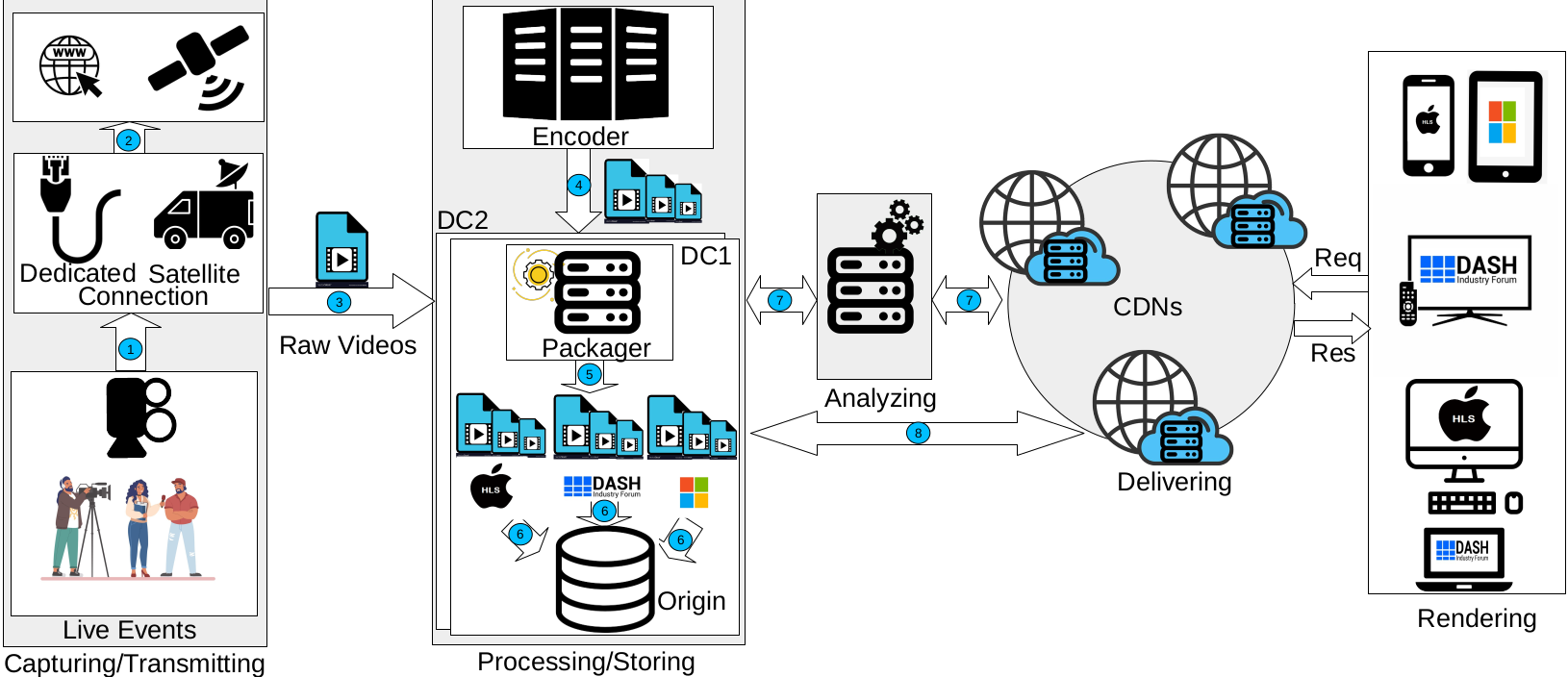

Architecture of PPTE

Title: A Traffic-sign recognition IoT-based Application

Title: A Traffic-sign recognition IoT-based Application