Alpen-Adria Universität Klagenfurt, Institute of Information Technology Chinese Academy of Sciences, Institute of Automation Johannes-Kepler-Universität Linz, Intelligent Transport Systems- Sustainable Transport Logistics 4.0 Logoplan – Logistik, Verkehrs und Umweltschutz Consulting GmbH Intact GmbH Chinese Academy of Sciences, Institute of Computing Technology

Alpen-Adria Universität Klagenfurt, Institute of Information Technology Chinese Academy of Sciences, Institute of Automation Johannes-Kepler-Universität Linz, Intelligent Transport Systems- Sustainable Transport Logistics 4.0 Logoplan – Logistik, Verkehrs und Umweltschutz Consulting GmbH Intact GmbH Chinese Academy of Sciences, Institute of Computing Technology

Distributed and Parallel Systems

Title: Boosting the Impact of Extreme and Sustainable Graph Processing for Urgent Societal Challenges in Europe

The First Workshop on Serverless, Extreme-Scale, and Sustainable Graph Processing Systems (GraphSys), Co-located with ICPE 2023, April 15-19, Coimbra, Portugal (https://sites.google.com/view/graphsys23/home)

Authors: Nuria de Lama Sanchez (International Data Corporation Madrid, Spain), Peter Haase (metaphacts GmbH Walldorf, Germany), Dumitru Roman (SINTEF AS), and Radu Prodan (University of Klagenfurt

Klagenfurt, Austria)

Abstract: We explore the potential of the Graph-Massivizer project funded by the Horizon Europe research and innovation program of the European Union to boost the impact of extreme and sustainable graph processing for mitigating existing urgent societal challenges. Current graph processing platforms do not support diverse workloads, models, languages, and algebraic frameworks. Existing specialized platforms are difficult to use by non-experts and suffer from limited portability and interoperability, leading to redundant efforts and inefficient resource and energy consumption due to vendor and even platform lock-in. While synthetic data emerged as an invaluable resource overshadowing actual data for developing robust artificial intelligence analytics, graph generation remains a challenge due to extreme dimensionality and complexity. On the European scale, this practice is unsustainable and, thus, threatens the possibility of creating a climate-neutral and sustainable economy based on graph data. Making graph processing sustainable is essential but needs credible evidence. The grand vision of the Graph-Massivizer project is a technological solution, coupled with field experiments and experience-sharing, for a high-performance and sustainable graph processing of extreme data with a proper response for any need and organizational size by 2030.

Radu Prodan presented the Graph-Massivizer project at the “Get-to-know” introductory and welcome day, part of Data Spaces Support Centre activities on 23 February.

Radu Prodan presented the Graph-Massivizer project at the CERCIRAS COST action on 7th February in Gdansk, Poland

Radu Prodan presented the Graph-Massivizer project at the CERCIRAS COST action on 7th February in Gdansk, Poland

Authors: Zahra Najafabadi Samani (Alpen-Adria-Universität Klagenfurt, Austria), Narges Mehran (Alpen-Adria-Universität Klagenfurt, Austria), Dragi Kimovski (Alpen-Adria-Universität Klagenfurt, Austria), Radu Prodan (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: The accelerating growth of modern distributed applications with low delivery deadlines leads to a paradigm shift towards the multi-tier computing continuum. However, the geographical dispersion, heterogeneity, and availability of the continuum resources may result in failures and quality of service degradation, significantly negating its advantages and lowering users’ satisfaction. We propose in this paper a proactive application placement PROS method relying on distributed coordination to prevent the quality of service violations through service-level agreements on the computing continuum. PROS employs a sigmoid function with adaptive weights for the different parameters to predict the service level agreement assurance of devices based on their past credentials and current capabilities. We evaluate PROS using two application workloads with different traffic stress levels up to 90 million services on a real testbed with 600 heterogeneous instances deployed over eight geographical locations. The results show that PROS increases the success rate by 7-33%, reduces the response time by 16-38%, and increases the deadline satisfaction rate by 19-42% compared to the two related work methods. A comprehensive simulation study with 1000 devices and a workload of up to 670 million services confirms the scalability of the results.

HiPEAC magazine https://www.hipeac.net/news/#/magazine/

HiPEACINFO 68, pages 27-28.

Autohrs: Dragi Kimovski (Alpen-Adria-Universität Klagenfurt, Austria), Narges Mehran (Alpen-Adria-Universität Klagenfurt, Austria), Radu Prodan (Alpen-Adria-Universität Klagenfurt, Austria), Souvik Sengupta (iExec Blockchain Tech, France), Anthony Simonet-Boulgone (iExec Blockchain Tech, France), Ioannis Plakas (UBITECH, Greece) , Giannis Ledakis (UBITECH, Greece) and Dumitru Roman (University of Oslo and SINTEF AS, Norway)

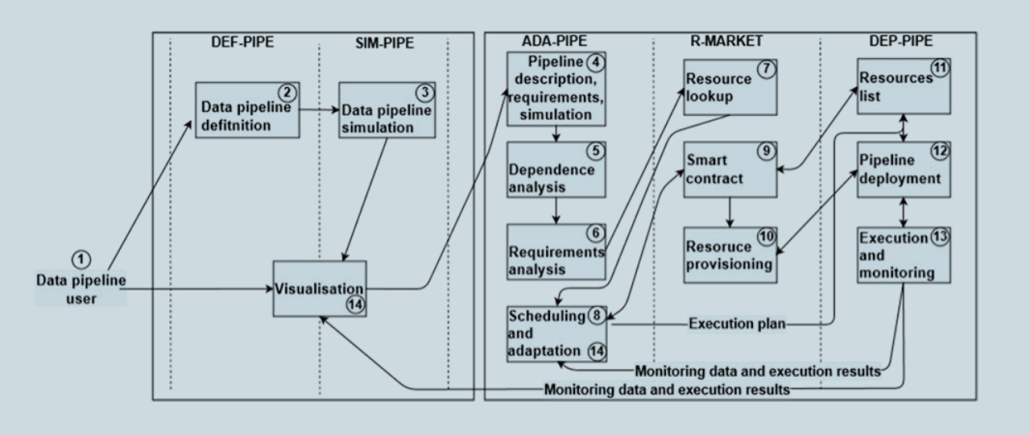

Abstract: Modern big-data pipeline applications, such as machine learning, encompass complex workflows for real-time data gathering, storage and analysis. Big-data pipelines often have conflicting requirements, such as low communication latency and high computational speed. These require different kinds of computing resource, from cloud to edge, distributed across multiple geographical locations – in other words, the computing continuum. The Horizon 2020 DataCloud project is creating a novel paradigm for big-data pipeline processing over the computing continuum, covering the complete lifecycle of bigdata pipelines. To overcome the runtime challenges associated with automating big-data pipeline processing on the computing continuum, we’ve created the DataCloud architecture. By separating the discovery, definition, and simulation of big-data pipelines from runtime execution, this architecture empowers domain experts with little infrastructure or software knowledge to take an active part in defining big-data pipelines.

This work received funding from the DataCloud European Union’s Horizon 2020 research and innovation programme under grant agreement no. 101016835.

Journal: Sensors

Authors: Akif Quddus Khan, Nikolay Nikolov, Mihhail Matskin,Radu Prodan, Dumitru Roman, Bekir Sahin, Christoph Bussler, Ahmet Soylu

Abstract: Big data pipelines are developed to process data characterized by one or more of the three big data features, commonly known as the three Vs (volume, velocity, and variety), through a series of steps (e.g., extract, transform, and move), making the ground work for the use of advanced analytics and ML/AI techniques. Computing continuum (i.e., cloud/fog/edge) allows access to virtually infinite amount of resources, where data pipelines could be executed at scale; however, the implementation of data pipelines on the continuum is a complex task that needs to take computing resources, data transmission channels, triggers, data transfer methods, integration of message queues, etc., into account. The task becomes even more challenging when data storage is considered as part of the data pipelines. Local storage is expensive, hard to maintain, and comes with several challenges (e.g., data availability, data security, and backup). The use of cloud storage, i.e., storage-as-a-service (StaaS), instead of local storage has the potential of providing more flexibility in terms of scalability, fault tolerance, and availability. In this article, we propose a generic approach to integrate StaaS with data pipelines, i.e., computation on an on-premise server or on a specific cloud, but integration with StaaS, and develop a ranking method for available storage options based on five key parameters: cost, proximity, network performance, server-side encryption, and user weights/preferences. The evaluation carried out demonstrates the effectiveness of the proposed approach in terms of data transfer performance, utility of the individual parameters, and feasibility of dynamic selection of a storage option based on four primary user scenarios.

Radu Prodan participated in the panel on “Fueling Industrial AI with Data Pipelines” at presented the Graph-Massivizer project at the European Big Data Value Forum on November 22 in Prague, Czech Republic.