On 22.08.2023, Reza Farahani successfully defended his doctoral studies with the thesis on the title: “Network-Assisted Delivery of Adaptive Video Streaming Services through CDN, SDN, and MEC” under the supervision of Univ.-Prof. DI Dr. Hermann Hellwagner and Univ.-Prof. DI Dr. Christian Timmerer at ITEC. His defense was chaired by Assoc. Prof. DI Dr. Klaus Schöffmann and examined by Prof. Dr. Tobias Hoßfeld (University of Würzburg, Germany) and Prof. Dr. Filip De Turck (Ghent University, Belgium).

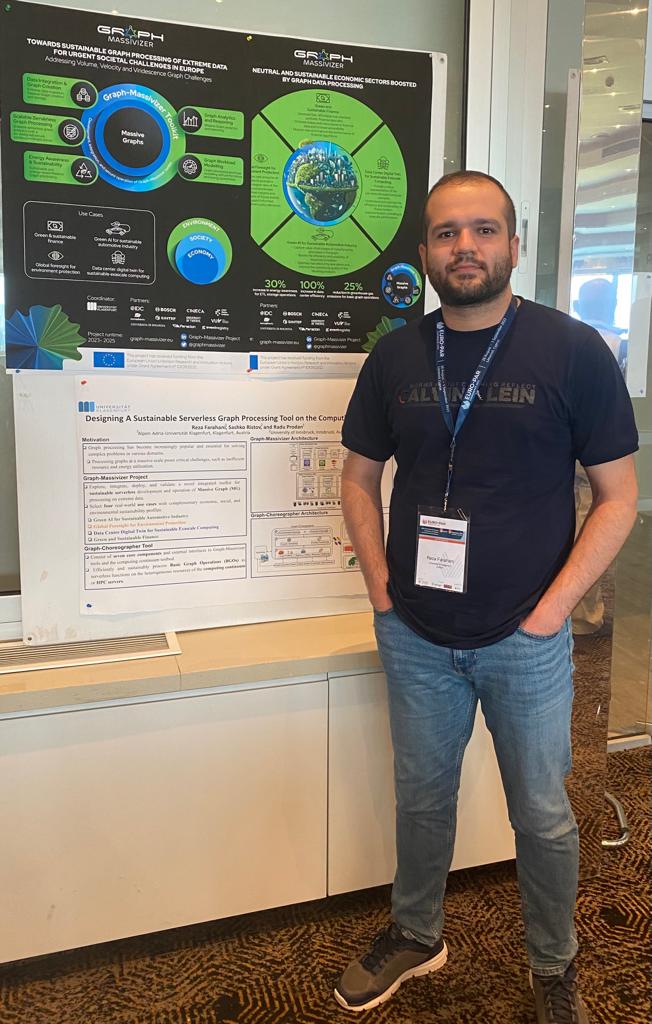

During his doctoral study, he contributed to ATHENA and Graph Massivizer projects.

Reza will continue as a Postdoctoral researcher at ITEC in the Graph Massivizer project.

The abstract of his disseration is as follows:

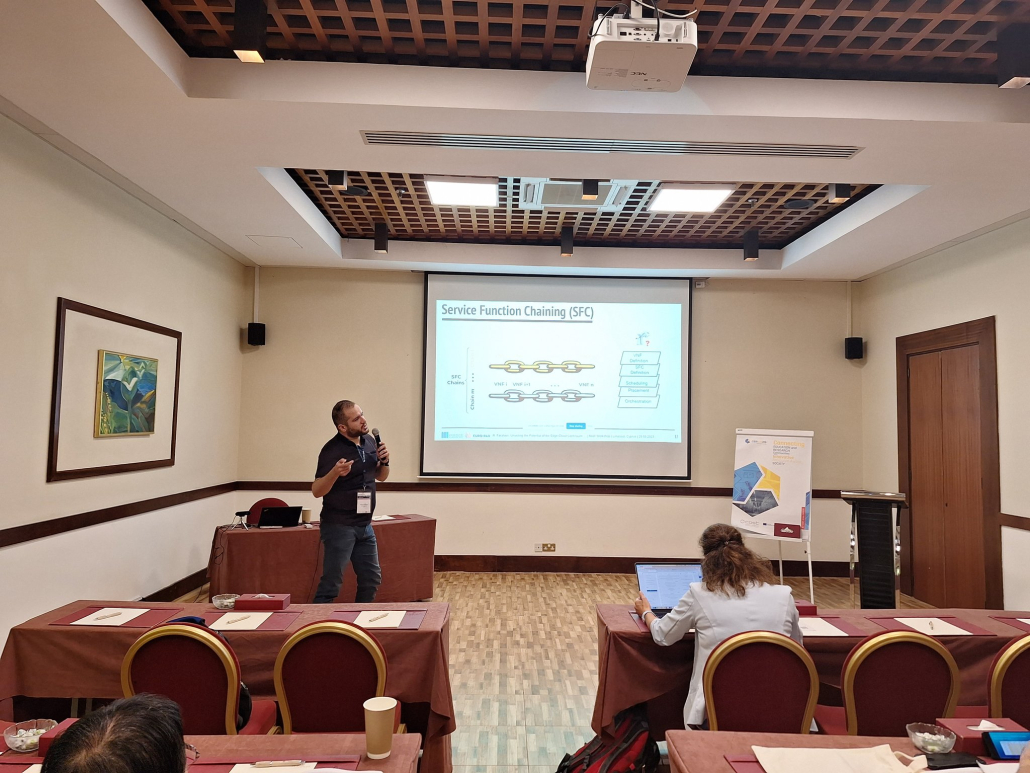

Multimedia applications, mainly video streaming services, are currently the dominant source of network load worldwide. In recent VoD and live video streaming services, traditional streaming delivery techniques have been replaced by adaptive solutions based on the HTTP protocol. Current trends toward high-resolution and low-latency VoD and live video streaming pose new challenges to E2E bandwidth demand and have stringent delay requirements. To do this, video providers rely on CDNs to ensure that they provide scalable video streaming services. To support future streaming scenarios involving millions of users, it is necessary to increase the CDNs’ efficiency. It is agreed that these requirements may be satisfied by adopting emerging networking techniques to present Network Assisted Video Streaming (NAVS) methods. Motivated by this, this thesis goes one step beyond traditional pure client-based HAS algorithms by incorporating (an) in-network component(s) with a broader view of the network to present completely transparent NAVS solutions for HAS clients. Our first contribution concentrates on leveraging the capabilities of the SDN, NFV, and MEC paradigms to introduce ES-HAS and CSDN as edge- and SDN-assisted frameworks. ES-HAS and CSDN introduce VNFs named VRP servers at the edge of an SDN-enabled network to collect HAS clients’ requests and retrieve networking information. The SDN controller in these systems manages a single domain network. VRP servers perform optimization models as server/segment selection policies to serve clients’ requests with the shortest fetching time by selecting the most appropriate cache server/video segment quality or by reconstructing the requested quality through transcoding at the edge. Deployment of ES-HAS and CSDN on the cloud-based testbeds and estimation of users’ QoE using objective metrics demonstrates how clients’ requests can be served with higher QoE by 40% and lower bandwidth usage by 63% compared to state-of-the-art approaches. Our second contribution designs an architecture that simultaneously supports various types of video streaming (live and VoD), considering their versatile QoE and latency requirements. To this end, the SDN, NFV, and MEC paradigms are leveraged, and three VNFs, i.e., VPF, VCF, and VTF, are designed. We build a series of these function chains through the SFC paradigm, utilize all CDN and edge server resources, and present SARENA, an SFC-enabled architecture for adaptive video streaming applications. We equip SARENA’s SDN controller with a lightweight request scheduler and edge configurator to make it deployable in practical environments and to dynamically scale edge servers based on service requirements, respectively. Experimental results show that SARENA outperforms baseline schemes in terms of higher users’ QoE figures by 39.6%, lower E2E latency by 29.3%, and lower backhaul traffic usage by 30% for live and VoD services. Our third contribution aims to use the idle resources of edge servers and employ the capabilities of the SDN controller to establish a collaboration between edge servers in addition to collaboration between edge servers and the SDN controller. We introduce two collaborative edge-assisted frameworks named LEADER and ARARAT. LEADER utilizes sets of actions, presented in an Action Tree, formulates the problem as a central optimization model to enhance the HAS clients’ serving time, subject to the network’s and edge servers’ resource constraints, and proposes a lightweight heuristic algorithm to solve the model. ARARAT extends LEADER’s Action Tree, considers network cost in the optimization, devises multiple heuristic algorithms, and runs extensive scenarios. Evaluation results show that LEADER and ARARAT improve users’ QoE by 22%, decrease the streaming cost by 47%, and enhance network utilization by 13%, as compared to others. Our final contribution focuses on incorporating P2P networks and CDNs, utilizing NFV and edge computing techniques, and then presenting RICHTER and ALIVE as hybrid P2P-CDN frameworks. RICHTER and ALIVE particularly use HAS clients’ potential idle computational resources besides their available bandwidth to provide distributed video processing services, e.g., video transcoding and video super-resolution. Both frameworks introduce multi-layer architectures and design Action Trees that consider all feasible resources for serving clients’ requests with acceptable latency and quality. Moreover, RICHTER proposes an online learning method and ALIVE utilizes a lightweight algorithm distributed over in-network virtualized components, which are designed to play decision-maker roles in large-scale practical scenarios. Results show that RICHTER and ALIVE improve the users’ QoE by 22%, decrease cost incurred for the streaming service provider by 34%, shorten clients’ serving latency by 39%, enhance edge server energy consumption by 31%, and reduce backhaul bandwidth usage by 24% compared to the others.