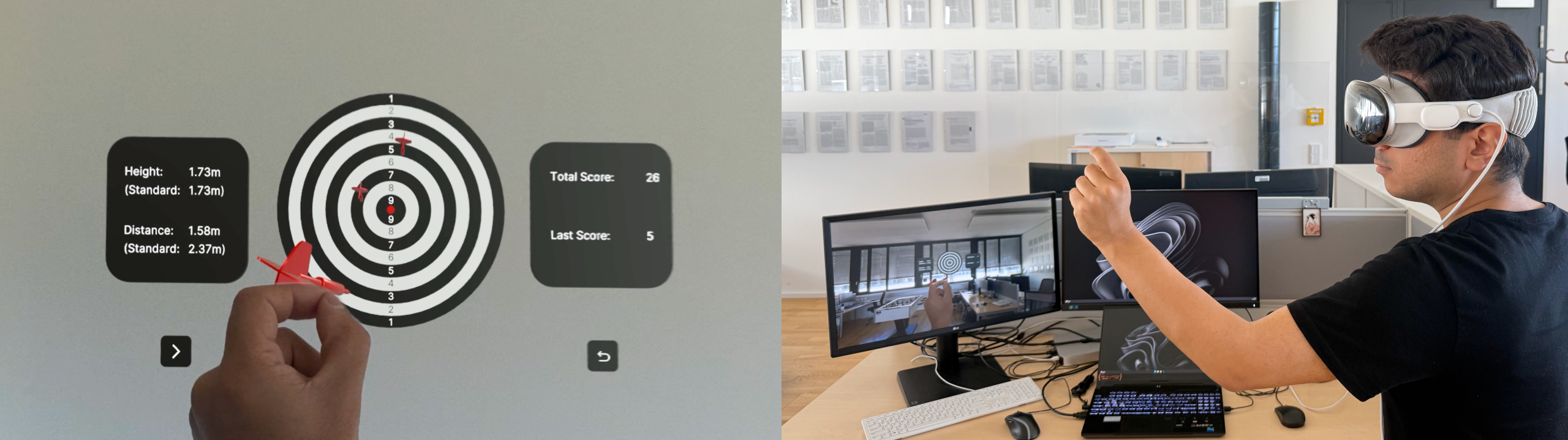

SDART: Spatial Dart AR Simulation with Hand-Tracked Input

ACM Multimedia 2025

October 27 – October 31, 2025

Dublin, Ireland

[PDF]

Milad Ghanbari (AAU, Austria), Wei Zhou (Cardiff, UK), Cosmin Stejerean (Meta, US), Christian Timmerer (AAU, Austria), Hadi Amirpour (AAU, Austria)

Abstract: We present a physics-driven 3D dart-throwing interaction system for Apple Vision Pro (AVP), developed using Unity 6 engine and running in augmented reality (AR) mode on the device. The system utilizes the PolySpatial and Apple’s ARKit software development kits (SDKs) to ensure hand input and tracking in order to intuitively spawn, grab, and throw virtual darts similar to real darts. The application benefits from physics simulations alongside the innovative no-controller input system of AVP to manipulate objects realistically in an unbounded spatial volume. By implementing spatial distance measurement, scoring logic, and recording user performance, this project enables user studies on quality of experience in interactive experiences. To evaluate the perceived quality and realism of the interaction, we conducted a subjective study with 10 participants using a structured questionnaire. The study measured various aspects of the user experience, including visual and spatial realism, control fidelity, depth perception, immersiveness, and enjoyment. Results indicate high mean opinion scores (MOS) across key dimensions. Link to video: Link