YTLive: A Dataset of Real-World YouTube Live Streaming Sessions

IEEE/IFIP Network Operations and Management Symposium (NOMS) 2026

Rome, Italy- 18 – 22 May 2026

[PDF]

Abstract

YTLive: A Dataset of Real-World YouTube Live Streaming Sessions

IEEE/IFIP Network Operations and Management Symposium (NOMS) 2026

Rome, Italy- 18 – 22 May 2026

[PDF]

Abstract

Conference: IEEE International Conference on Image Processing 2026

13-17 September 2026

Tampere, Finland

Special Session Proposal 1: Generative Visual Coding: Emerging Paradigms for Future Communication

Special Session Proposal 2: Visual information processing for human-centered immersive experiences

Autor: Hadi Amirpourazarian

Conference: 24th International Conference on e-Society (ES 2026)

Paper Title: Gamification in the Age of AI: Surveying Player Perceptions of Motivation, Manipulation, and Data Protection

Authors: Kurt Horvath, Tom Tucek

Abstract: Modern games and applications increasingly employ artificial intelligence (AI), user modeling, and gamification to influence user behavior, sustain engagement, and monetize attention. Although these mechanisms can enhance motivation and enjoyment, they also raise concerns about manipulation, personal data usage, and transparency. To examine how players perceive these dynamics, we conducted a survey with 28 adult participants. Respondents expressed strong concerns about the collection and exploitation of personal information in games and gamified applications, while also reporting low awareness and a limited sense of control over how their data is used. They evaluated progression features such as leveling systems and leaderboards as motivating. In contrast, they rated retention-focused mechanics such as daily login rewards and random reward reveals as highly manipulative. Participants reported that these systems can create fear of missing out or resemble gambling. Participants also expressed support for regulatory measures that limit exploitative design and protect vulnerable users. These findings reveal a clear gap between increasingly powerful engagement strategies and user trust, illustrating the need for more responsible and transparent gamification practices.

2026 IEEE International Conference on Acoustics, Speech, and Signal Processing

4 – 8 May, 2026

Barcelona, Spain

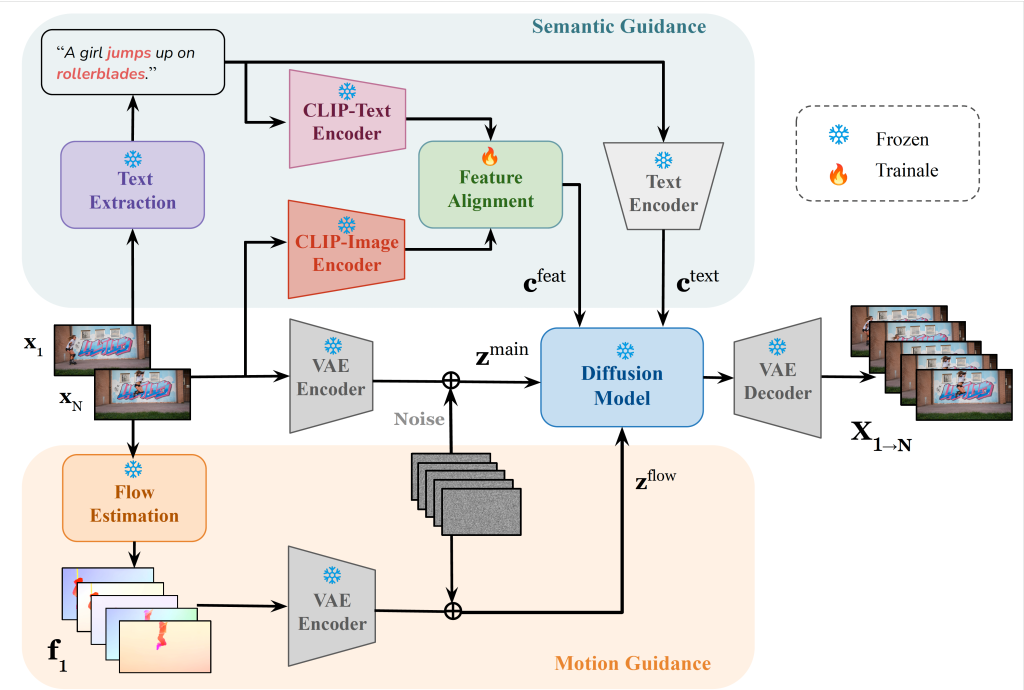

Paper title: Dual-guided Generative Frame Interpolation

Yiying Wei (AAU, Austria), Hadi Amirpour (AAU, Austria), and Christian Timmerer (AAU, Austria)

Abstract: Video frame interpolation (VFI) aims to generate intermediate frames between given keyframes to enhance temporal resolution and visual smoothness. While conventional optical flow–based methods and recent generative approaches achieve promising results, they often struggle with large displacements, failing to maintain temporal coherence and semantic consistency. In this work, we propose dual-guided generative frame interpolation (DGFI), a framework that integrates semantic guidance from vision-language models and flow guidance into a pre-trained diffusion-based image-to-video (I2V) generator. Specifically, DGFI extracts textual descriptions and injects multimodal embeddings to capture high-level semantics, while estimated motion guidance provides smooth transitions. Experiments on public datasets demonstrate the effectiveness of our dual-guided method over the state-of-the-art approaches.

Conference: International Symposium on Biomedical Imaging (ISBI 2026), April 8-11, 2026, London, UK

Paper-Title: SAM-Fed: SAM-Guided Federated Semi-Supervised Learning for Medical Image Segmentation.

Authors: Sahar Nasirihaghighi, Negin Ghamsarian, Yiping Li, Marcel Breeuwer, Raphael Sznitman, and Klaus Schoeffmann

Abstract:

Medical image segmentation is clinically important, yet data privacy and the cost of expert annotation limit the availability of labeled data. Federated semi-supervised learning (FSSL) offers a solution but faces two challenges: pseudo-label reliability depends on the strength of local models, and client devices often require compact or heterogeneous architectures due to limited computational resources. These constraints reduce the quality and stability of pseudo-labels, while large models, though more accurate, cannot be trained or used for routine inference on client devices. We propose SAM-Fed, a federated semi-supervised framework that leverages a high-capacity segmentation foundation model to guide lightweight clients during training. SAM-Fed combines dual knowledge distillation with an adaptive agreement mechanism to refine pixel-level supervision. Experiments on skin lesion and polyp segmentation across homogeneous and heterogeneous settings show that SAM-Fed consistently outperforms state-of-the-art FSSL methods.

Paper title: ELLMPEG: An Edge-based Agentic LLM Video Processing Tool

Authors: Zoha Azimi, Reza Farahani, Radu Prodan, Christian Timmerer

Venue: MMSys’26, The 17th ACM Multimedia System Conference, Hong Kong SAR, 4th – 8th April 2026

Abstract:

Large language models (LLMs), the foundation of generative AI systems like ChatGPT, are transforming many fields and applications, including multimedia, enabling more advanced content generation, analysis, and interaction. However, cloud-based LLM deployments face three key limitations: high computational and energy demands, privacy and reliability risks from remote processing, and recurring API costs. Recent advances in agentic AI, especially in structured reasoning and tool use, offer a better way to exploit open and locally deployed tools and LLM models. This paper presents ELLMPEG, an edge-enabled agentic LLM framework for the automated generation of video-processing commands. ELLMPEG integrates tool-aware

Retrieval-Augmented Generation (RAG) with iterative self-reflection to produce and locally verify executable FFmpeg and VVenC commands directly at the edge, eliminating reliance on external cloud APIs. To evaluate ELLMPEG, we collect a dedicated prompt dataset comprising 480 diverse queries covering different categories of FFmpeg and the Versatile Video Codec (VVC) encoder (VVenC) commands. We validate command generation accuracy and evaluate four open-source LLMs based on command validity, tokens generated per second, inference time, and energy efficiency. We also execute the generated commands to assess their runtime correctness and practical applicability. Experimental results show that Qwen2.5, when augmented with the ELLMPEG framework, achieves an average command-generation accuracy of 78 % with zero recurring API cost, outperforming all other open-source models across both the FFmpeg and VVenC datasets.

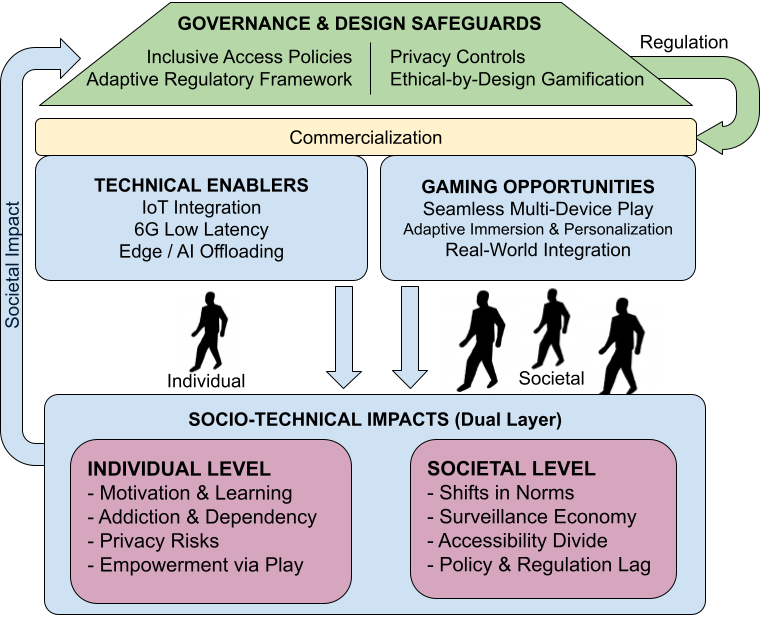

Title: From Latency to Engagement: Technical Synergies and Ethical Questions in IoT-Enabled Gaming

Authors: Kurt Horvath, Tom Tucek

Abstract: The convergence of video games with the Internet of Things (IoT), artificial intelligence (AI), and emerging 6G networks creates unprecedented opportunities and pressing challenges. On a technical level, IoT-enabled gaming requires ultra-low latency, reliable quality of service (QoS), and seamless multi-device integration supported by edge and cloud intelligence. On a societal level, gamification increasingly extends into education, health, and commerce, where points, badges, and immersive feedback loops can enhance engagement but also risk manipulation, privacy violations, and dependency. This position paper examines these dual dynamics by linking technical enablers, such as 6G connectivity, IoT integration, and edge/AI offloading, with ethical concerns surrounding behavioral influence, data usage, and accessibility. We propose a comparative perspective that highlights where innovation aligns with user needs and where safeguards are necessary. We identify open research challenges by combining technical and ethical analysis and emphasize the importance of regulatory and design frameworks to ensure responsible, inclusive, and sustainable IoT-enabled gaming.

Overview – 1st Workshop on Intelligent and Scalable Systems across the Computing Continuum

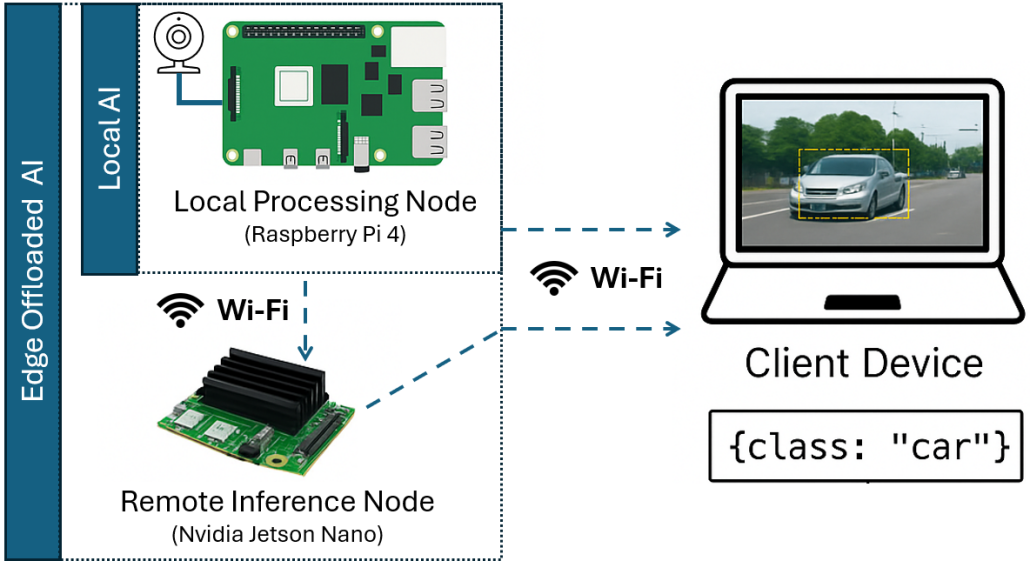

Title: Wi-Fi Enabled Edge Intelligence Framework for Smart City Traffic Monitoring using Low-Power IoT Cameras

Authors: Raphael Walcher, Kurt Horvath, Dragi Kimovski, Stojan Kitanov

Abstract: Real-time traffic monitoring in smart cities demands ultra-low latency processing to support time-critical decisions such as incident detection and congestion management. While cloud-based solutions offer robust computation, their inherent latency limits their applicability for such tasks. This work proposes a localized edge AI framework that connects low-power IoT camera sensors to a client, or applies offloading of inference to an NVIDIA Jetson Nano (GPU). Networking is achieved via Wi-Fi, enabling image classification without relying on wide-area infrastructure such as 5G, or wired networks. We evaluate two processing strategies: local inference on camera nodes and GPU-accelerated offloading to the Jetson Nano. We show that local processing is only feasible for lightweight models and low frame rates, whereas offloading enables near-real-time performance even for more complex models. These results demonstrate the viability of cost-effective, Wi-Fi-based edge AI deployments for latency-critical urban monitoring.

Overview – 1st Workshop on Intelligent and Scalable Systems across the Computing Continuum

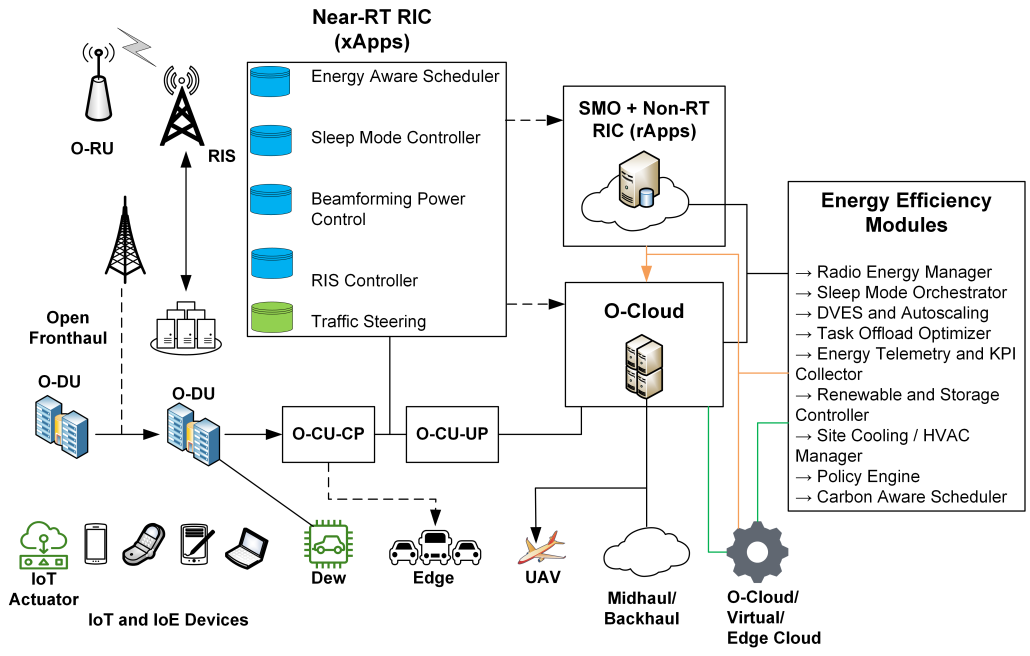

Title: 6G Network O-RAN Energy Efficiency Performance Evaluation

Authors:Ivan Petrov, Kurt Klaus Horvath, Stojan Kitanov, Dragi Kimovski, Fisnik Doko, Toni Janevski

Abstract: The Open Radio Access Network (O-RAN) paradigm introduces disaggregated and virtualized RAN elements connected through open interfaces, enabling AI-driven optimization across the RAN. It allows flexible energy management strategies by leveraging intelligent RIC (RAN Intelligent Controller), and computing continuum (Dew-Edge-Cloud) resources. The Open Radio Access Network (O-RAN) provides vendor-neutral, disaggregated RAN components and AI-driven control, making it a strong candidate for future networks. On the other hand, the transition to 6G networks requires more open, adaptable, and intelligent RAN architecture. Open RAN is envisioned as a key enabler for 6G, offering agility, cost efficiency, energy savings, and resilience. This paper assesses O-RAN’s performance in 6G mobile networks in terms of energy efficiency.

Paper Title: STEP-MR: A Subjective Testing and Eye-Tracking Platform for Dynamic Point Clouds in Mixed Reality

Conference Details: 32nd International Conference on Multimedia Modeling; Jan 29 – Jan 31, 2026; Prague, Czech Republic

Authors: Shivi Vats (AAU, Austria), Christian Timmerer (AAU, Austria), Hermann Hellwagner (AAU, Austria)

Abstract:

The use of point cloud (PC) streaming in mixed reality (MR) environments is of particular interest due to the immersiveness and the six degrees of freedom (6DoF) provided by the 3D content. However, this immersiveness requires significant bandwidth. Innovative solutions have been developed to address these challenges, such as PC compression and/or spatially tiling the PC to stream different portions at different quality levels. This paper presents a brief overview of a Subjective Testing and Eye-tracking Platform for dynamic point clouds in Mixed Reality (STEP-MR) for the Microsoft HoloLens 2. STEP-MR was used to conduct subjective tests (described in [1]) with 41 participants, yielding over 2000 responses and more than 150 visual attention maps, the results of which can be used, among other things, to improve dynamic (animated) point cloud streaming solutions mentioned above. Building on our previous platform, the new version now enables eye-tracking tests, including calibration and heatmap generation. Additionally, STEP-MR features modifications to the subjective tests’ functionality, such as a new rating scale and adaptability to participant movement during the tests, along with other user experience changes.