Multimedia Communication

Read more

Title: Fast multi-rate encoding for adaptive HTTP streaming

Authors: Hadi Amirpour (Alpen-Adria-Universität Klagenfurt, Austria), Ekrem Çetynkaya (Alpen-Adria-Universität Klagenfurt, Austria), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: According to embodiments of the disclosure, information of higher and lower quality encoded video segments is used to limit Rate-Distortion Optimization (RDO) for each Coding Unit Tree (CTU). A method first encodes the highest bit-rate segment and consequently uses it to encode the lowest bit-rate video segment. Block structure and selected reference frame of both highest and lowest bit-rate video segments are used to predict and shorten RDO process for each CTU in middle bit-rates. The method delays just one frame using parallel processing. This approach provides time-complexity reduction compared to the reference software for middle bit-rates while degradation is negligible. Read more

Samira Afzal (Alpen-Adria-Universität (AAU) Klagenfurt, Austria), Radu Prodan (Alpen-Adria-Universität (AAU) Klagenfurt, Austria), Christian Timmerer (Alpen-Adria-Universität (AAU) Klagenfurt and Bitmovin Inc., Austria)

Introduction:

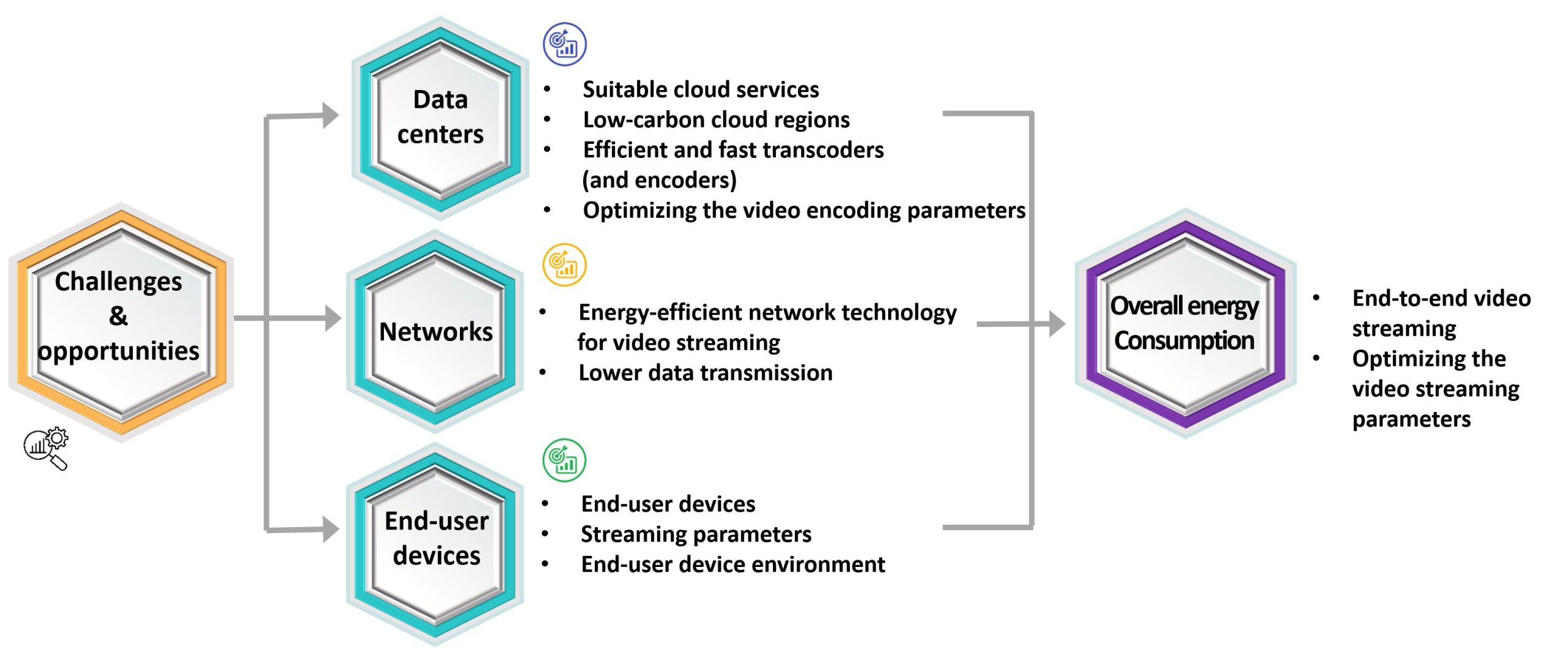

Regarding the Intergovernmental Panel on Climate Change (IPCC) report in 2021 and Sustainable Development Goal (SDG) 13 “climate action”, urgent action is needed against climate change and global greenhouse gas (GHG) emissions in the next few years [1]. This urgency also applies to the energy consumption of digital technologies. Internet data traffic is responsible for more than half of digital technology’s global impact, which is 55% of energy consumption annually. The Shift Project forecast [2] shows an increase of 25% in data traffic associated with 9% more energy consumption per year, reaching 8% of all GHG emissions in 2025.

Video flows represented 80% of global data flows in 2018, and this video data volume is increasing by 80% annually [2]. This exponential increase in the use of streaming video is due to (i) improvements in Internet connections and service offerings [3], (ii) the rapid development of video entertainment (e.g., video games and cloud gaming services), (iii) the deployment of Ultra High-Definition (UHD, 4K, 8K), Virtual Reality (VR), and Augmented Reality (AR), and (iv) an increasing number of video surveillance and IoT applications [4]. Interestingly, video processing and streaming generate 306 million tons of CO2, which is 20% of digital technology’s total GHG emissions and nearly 1% of worldwide GHG emissions [2].

While research has shown that the carbon footprint of video streaming has been decreasing in recent years [5], there is still a high need to invest in research and development of efficient next-generation computing and communication technologies for video processing technologies. This carbon footprint reduction is due to technology efficiency trends in cloud computing (e.g., renewable power), emerging modern mobile networks (e.g., growth in Internet speed), and end-user devices (e.g., users prefer less energy-intensive mobile and tablet devices over larger PCs and laptops). However, since the demand for video streaming is growing dramatically, it raises the risk of increased energy consumption.

Investigating energy efficiency during video streaming is essential to developing sustainable video technologies. The processes from video encoding to decoding and displaying the video on the end user’s screen require electricity, which results in CO2 emissions. Consequently, the key question becomes: “How can we improve energy efficiency for video streaming systems while maintaining an acceptable Quality of Experience (QoE)?”.

IEEE International Conference on Communications (ICC)

28 May – 01 June 2023– Rome, Italy

Reza Farahani (Alpen-Adria-Universität Klagenfurt), Abdelhak Bentaleb (Concordia University, Canada), Christian Timmerer (Alpen-Adria-Universität Klagenfurt), Mohammad Shojafar (University of Surrey, UK), Radu Prodan (Alpen-Adria-Universität Klagenfurt), and Hermann Hellwagner (Alpen-Adria-Universität Klagenfurt)

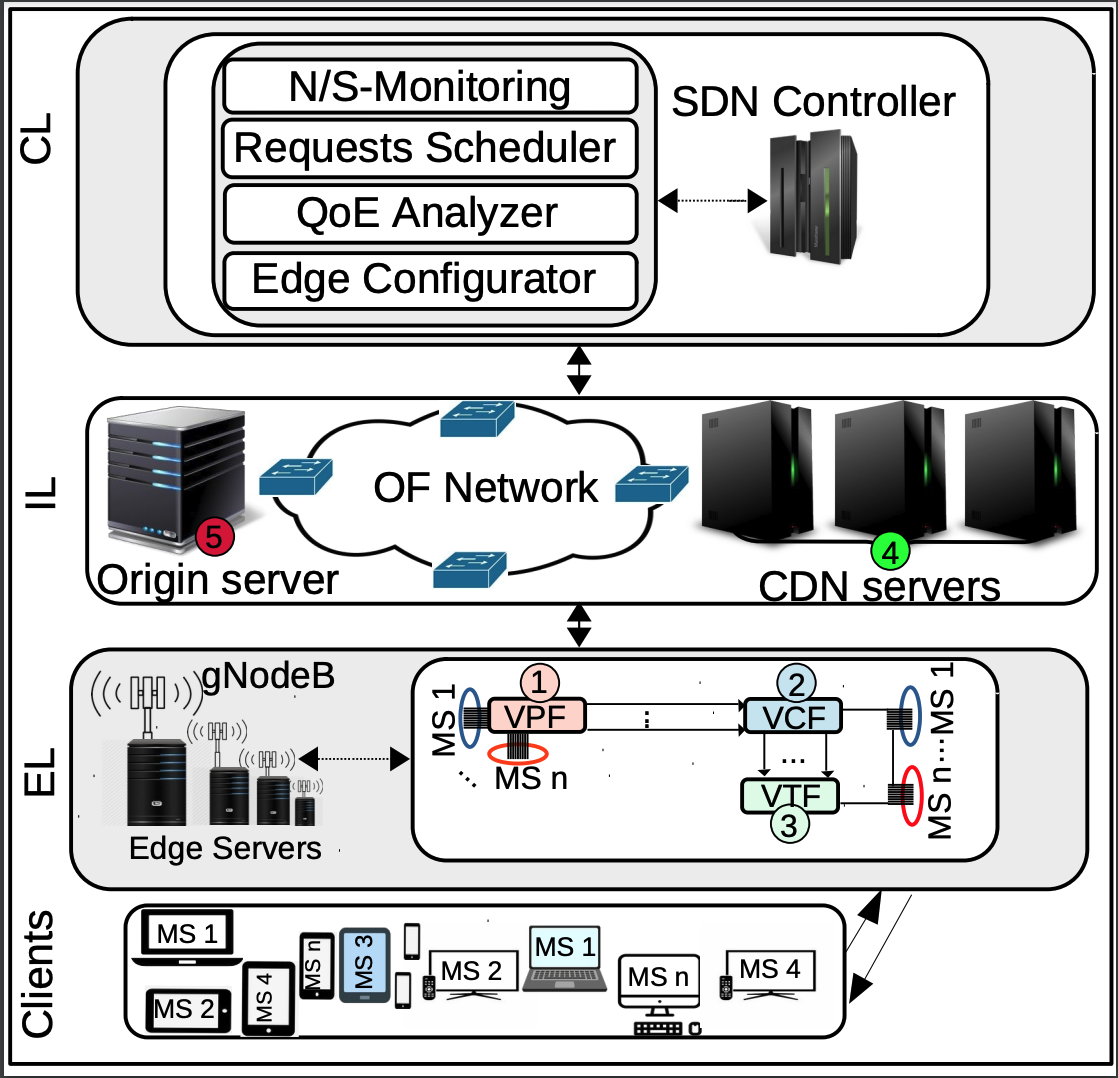

Abstract: 5G and 6G networks are expected to support various novel emerging adaptive video streaming services (e.g., live, VoD, immersive media, and online gaming) with versatile Quality of Experience (QoE) requirements such as high bitrate, low latency, and sufficient reliability. It is widely agreed that these requirements can be satisfied by adopting emerging networking paradigms like Software-Defined Networking (SDN), Network Function Virtualization (NFV), and edge computing. Previous studies have leveraged these paradigms to present network-assisted video streaming frameworks, but mostly in isolation without devising chains of Virtualized Network Functions (VNFs) that consider the QoE requirements of various types of Multimedia Services (MS).

To bridge the aforementioned gaps, we first introduce a set of multimedia VNFs at the edge of an SDN-enabled network, form diverse Service Function Chains (SFCs) based on the QoE requirements of different MS services. We then propose SARENA, an SFC-enabled ArchitectuRe for adaptive VidEo StreamiNg Applications. Next, we formulate the problem as a central scheduling optimization model executed at the SDN controller. We also present a lightweight heuristic solution consisting of two phases that run on the SDN controller and edge servers to alleviate the time complexity of the optimization model in large-scale scenarios. Finally, we design a large-scale cloud-based testbed, including 250 HTTP Adaptive Streaming (HAS) players requesting two popular MS applications (i.e., live and VoD), conduct various experiments, and compare its effectiveness with baseline systems. Experimental results illustrate that SARENA outperforms baseline schemes in terms of users’ QoE by at least 39.6%, latency by 29.3%, and network utilization by 30% in both MS services.

Index Terms—HAS; DASH; NFV; SFC; SDN, Edge Computing.

Journal Website: Journal of Network and Computer Applications

[PDF]

Samira Afzal (Alpen-Adria-Universität Klagenfurt), Vanessa Testoni (unico IDtech), Christian Esteve Rothenberg (University of Campinas), Prakash Kolan (Samsung Research America), and Imed Bouazizi (Qualcomm)

Abstract:

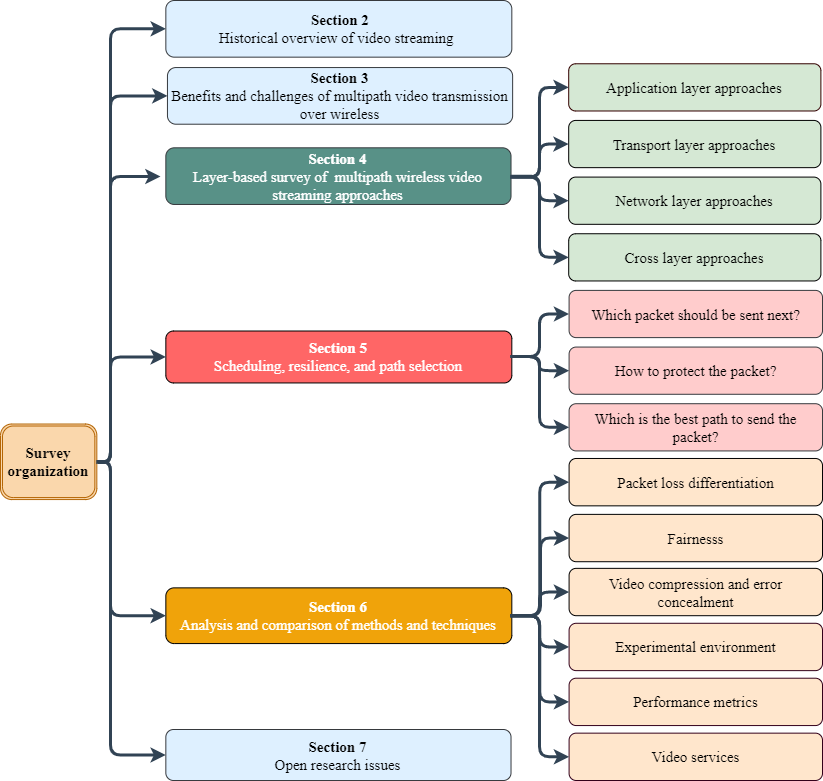

Demand for wireless video streaming services increases with users expecting to access high-quality video streaming experiences. Ensuring Quality of Experience (QoE) is quite challenging due to varying bandwidth and time constraints. Since most of today’s mobile devices are equipped with multiple network interfaces, one promising approach is to benefit from multipath communications. Multipathing leads to higher aggregate bandwidth and distributing video traffic over multiple network paths improves stability, seamless connectivity, and QoE. However, most of current transport protocols do not match the requirements of video streaming applications or are not designed to address relevant issues, such as networks heterogeneity, head-of-line blocking, and delay constraints. In this comprehensive survey, we first review video streaming standards

and technology developments. We then discuss the benefits and challenges of multipath video transmission over wireless. We provide a holistic literature review of multipath wireless video streaming, shedding light on the different alternatives from an end-to-end layered stack perspective, reviewing key multipath wireless scheduling functions, unveiling trade-offs of each approach, and presenting a suitable taxonomy to classify the

state-of-the-art. Finally, we discuss open issues and avenues for future work.

Collaborative Edge-Assisted Systems for HTTP Adaptive Video Streaming

5G/6G Innovation Center, University of Surrey, UK

6th January 2023 | Guildford, UK

Abstract: The proliferation of novel video streaming technologies, advancement of networking paradigms, and steadily increasing numbers of users who prefer to watch video content over the Internet rather than using classical TV have made video the predominant traffic on the Internet. However, designing cost-effective, scalable, and flexible architectures that support low-latency and high-quality video streaming is still a challenge for both over-the-top (OTT) and ISP companies. In this talk, we first introduce the principles of video streaming and the existing challenges. We then review several 5G/6G networking paradigms and explain how we can leverage networking technologies to form collaborative network-assisted video streaming systems for improving users’ quality of experience (QoE) and network utilization.

Reza Farahani is a last-year Ph.D. candidate at the University of Klagenfurt, Austria, and a Ph.D. visitor at the University of Surrey, Uk. He received his B.Sc. in 2014 and M.Sc. in 2019 from the university of Isfahan, IRAN, and the university of Tehran, IRAN, respectively. Currently, he is working on the ATHENA project in cooperation with its industry partner Bitmovin. His research is focused on designing modern network-assisted video streaming solutions (via SDN, NFV, MEC, SFC, and P2P paradigms), multimedia Communication, computing continuum challenges, and parallel and distributed systems. He also worked in different roles in the computer networks field, e.g., network administrator, ISP customer support engineer, Cisco network engineer, network protocol designer, network programmer, and Cisco instructor (R&S, SP).

IEEE Transactions on Network and Service Management (TNSM)

Alireza Erfanian (Alpen-Adria-Universität Klagenfurt, Austria), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt, Austria), Farzad Tashtarian (Alpen-Adria-Universität Klagenfurt, Austria), Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria), and Hermann Hellwagner.

Abstract—The edge computing paradigm brings cloud capabilities close to the clients. Leveraging the edge’s capabilities can improve video streaming services by employing the storage capacity and processing power at the edge for caching and transcoding tasks, respectively, resulting in video streaming services with higher quality and lower latency. In this paper, we propose CD-LwTE, a Cost- and Delay-aware Light-weight Transcoding approach at the Edge, in the context of HTTP Adaptive Streaming (HAS). The encoding of a video segment requires computationally intensive search processes. The main idea of CD-LwTE is to store the optimal search results as metadata for each bitrate of video segments and reuse it at the edge servers to reduce the required time and computational resources for transcoding. Aiming at minimizing the cost and delay of Video-on-Demand (VoD) services, we formulate the problem of selecting an optimal policy for serving segment requests at the edge server, including (i) storing at the edge server, (ii) transcoding from a higher bitrate at the edge server, and (iii) fetching from the origin or a CDN server, as a Binary Linear Programming (BLP) model. As a result, CD-LwTE stores the popular video segments at the edge and serves the unpopular ones by transcoding using metadata or fetching from the origin/CDN server. In this way, in addition to the significant reduction in bandwidth and storage costs, the transcoding time of a requested segment is remarkably decreased by utilizing its corresponding metadata. Moreover, we prove the proposed BLP model is an NP-hard problem and propose two heuristic algorithms to mitigate the time complexity of CD-LwTE. We investigate the performance of CD-LwTE in comprehensive scenarios with various video contents, encoding software, encoding settings, and available resources at the edge. The experimental results show that our approach (i) reduces the transcoding time by up to 97%, (ii) decreases the streaming cost, including storage, computation, and bandwidth costs, by up to 75%, and (iii) reduces delay by up to 48% compared to state-of-the-art approaches.

ICME`23 July, 2023, Brisbane, Australia

Organizers:

-

Hadi Amirpour, University of Klagenfurt

-

Angeliki Katsenou, Trinity College Dublin, IE and University of Bristol, UK

Abstracts

Video streaming in the context of HTTP Adaptive Streaming (HAS) is replacing legacy media platforms and its market share is growing rapidly due to its simplicity, reliability, and standard support (e.g., MPEG-DASH). It results in an increasing number of video content, where nowadays, video accounts for the vast majority of today’s internet traffic either in the form of user-generated content (UGC) or pristine cinematic content. For HAS, the video is usually encoded in multiple versions (i.e., representations) of different resolutions, bitrates, codecs, etc. and each representation is divided into chunks (i.e., segments) of equal length (e.g., 2-10 second) to enable dynamic, adaptive switching during streaming based on the user’s context conditions (e.g., network conditions, device characteristics, user preferences). Read more

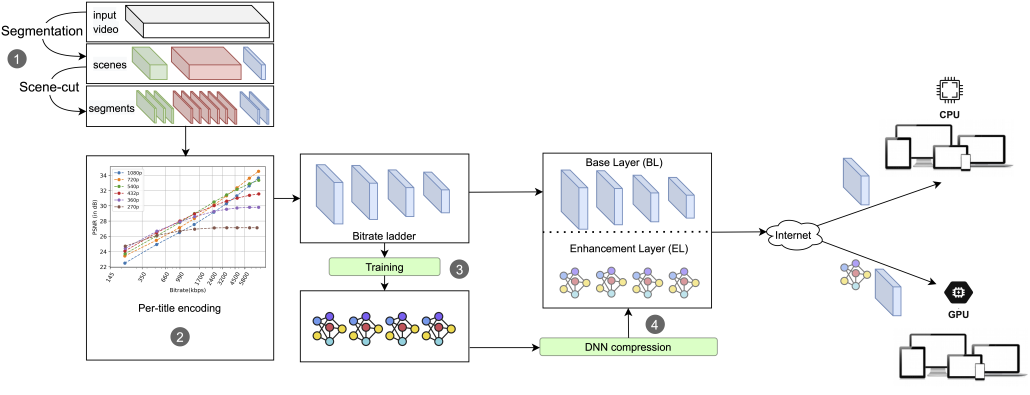

Authors: Hadi Amirpour (Alpen-Adria-Universität Klagenfurt, Austria), Mohammad Ghanbari (University of Essex, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: In HTTP Adaptive Streaming (HAS), each video is divided into smaller segments, and each segment is encoded at multiple pre-defined bitrates to construct a bitrate ladder. To optimize bitrate ladders, per-title encoding approaches encode each segment at various bitrates and resolutions to determine the convex hull. From the convex hull, an optimized bitrate ladder is constructed, resulting in an increased Quality of Experience (QoE) for end-users. With the ever-increasing efficiency of deep learning-based video enhancement approaches, they are more and more employed at the client-side to increase the QoE, specifically when GPU capabilities are available. Therefore, scalable approaches are needed to support end-user devices with both CPU and GPU capabilities (denoted as CPU-only and GPU-available end-users, respectively) as a new dimension of a bitrate ladder. Read more