The 15th ACM Multimedia Systems Conference (Technical Demos)

15-18 April, 2024 in Bari, Italy

Authors: Samuel Radler* (AAU, Austria) , Leon Prüller* (AAU, Austria), Emanuele Artioli (AAU, Austria), Farzad Tashtarian (AAU, Austria), and Christian Timmerer (AAU, Austria)

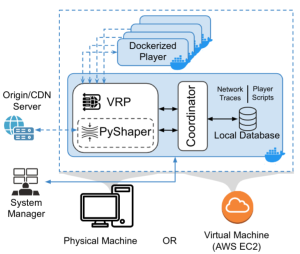

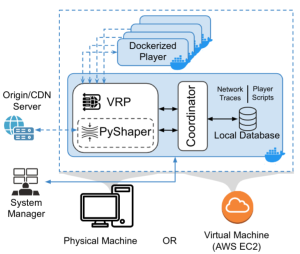

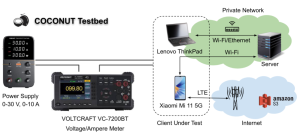

As streaming services become more commonplace, analyzing their behavior effectively under different network conditions is crucial. This is normally quite expensive, requiring multiple players with different bandwidth configurations to be emulated by a powerful local machine or a cloud environment. Furthermore, emulating a realistic network behavior or guaranteeing adherence to a real network trace is challenging. This paper presents PyStream, a simple yet powerful way to emulate a video streaming network, allowing multiple simultaneous tests to run locally. By leveraging a network of Docker containers, many of the implementation challenges are abstracted away, keeping the resulting system easily manageable and upgradeable. We demonstrate how PyStream not only reduces the requirements for testing a video streaming system but also improves the accuracy of the emulations with respect to the current state-of-the-art. On average, PyStream reduces the error between the original network trace and the bandwidth emulated by video players by a factor of 2-3 compared to Wondershaper, a common network traffic shaper in many video streaming evaluation environments. Moreover, PyStream decreases the cost of running experiments compared to existing cloud-based video streaming evaluation environments such as CAdViSE.