The 13th ACM Multimedia Systems Conference (ACM MMSys 2022)

June 14–17, 2022 | Athlone, Ireland

Reza Shokri Kalan (Digiturk Company, Istanbul), Reza Farahani (Alpen-Adria-Universität Klagenfurt), Emre Karsli (Digiturk Company, Istanbul), Christian Timmerer (Alpen-Adria-Universität Klagenfurt), and Hermann Hellwagner (Alpen-Adria-Universität Klagenfurt)

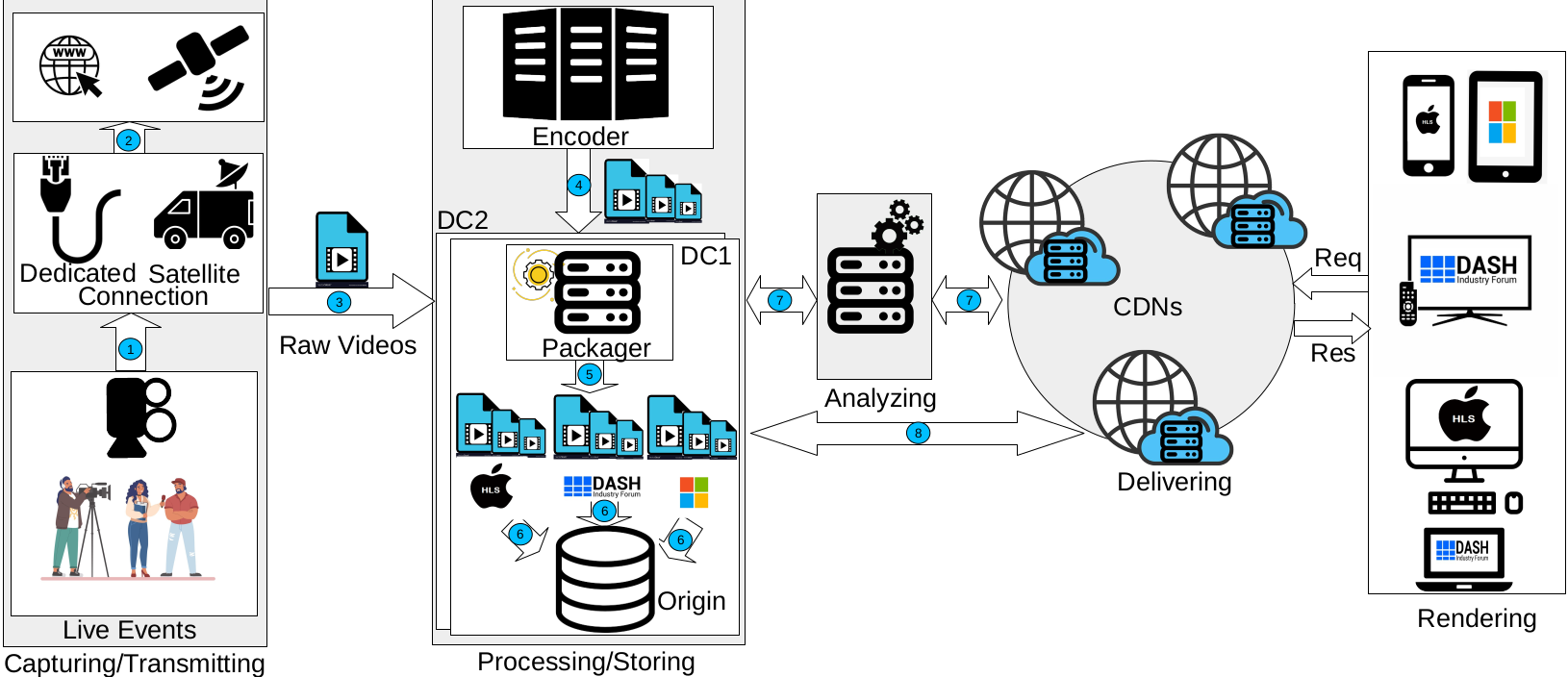

Over-the-Top (OTT) service providers need faster, cheaper, and Digital Rights Management (DRM)-capable video streaming solutions. Recently, HTTP Adaptive Streaming (HAS) has become the dominant video delivery technology over the Internet. In HAS, videos are split into short intervals called segments, and each segment is encoded at various qualities/bitrates (i.e., representations) to adapt to the available bandwidth. Utilizing different HAS-based technologies with various segment formats imposes extra cost, complexity, and latency to the video delivery system. Enabling an integrated format for transmitting and storing segments at Content Delivery Network (CDN) servers can alleviate the aforementioned issues. To this end, MPEG Common Media Application Format (CMAF) is presented as a standard format for cost-effective and low latency streaming. However, CMAF has not been adopted by video streaming providers yet and it is incompatible with most legacy end-user players. This paper reveals some useful steps for achieving low latency live video streaming that can be implemented for non-DRM sensitive contents before jumping to CMAF technology. We first design and instantiate our testbed in a real OTT provider environment, including a heterogeneous network and clients, and then investigate the impact of changing format, segment duration, and Digital Video Recording (DVR) window length on a real live event. The results illustrate that replacing the transport stream (.ts) format with fragmented MP4 (.fMP4) and shortening segments’ duration reduces live latency significantly.

Keywords: HAS, DASH, HLS, CMAF, Live Streaming, Low Latency

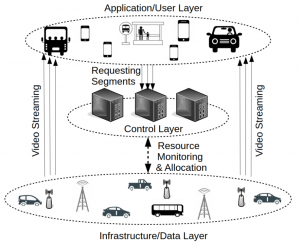

We design QoCoVi in two SDN-based operational modes: (i) centralized and (ii) distributed. In centralized mode, we can obtain a suitable solution by introducing a mixed-integer linear programming (MILP) optimization model that can be executed on the SDN controller. However, to cope with the computational overhead of the centralized approach in real IoV scenarios, we propose a fully distributed version of QoCoVi based on the proximal Jacobi alternating direction method of multipliers (ProxJ-ADMM) technique. The effectiveness of the proposed approach is confirmed through emulation with Mininet-WiFi in different scenarios.

We design QoCoVi in two SDN-based operational modes: (i) centralized and (ii) distributed. In centralized mode, we can obtain a suitable solution by introducing a mixed-integer linear programming (MILP) optimization model that can be executed on the SDN controller. However, to cope with the computational overhead of the centralized approach in real IoV scenarios, we propose a fully distributed version of QoCoVi based on the proximal Jacobi alternating direction method of multipliers (ProxJ-ADMM) technique. The effectiveness of the proposed approach is confirmed through emulation with Mininet-WiFi in different scenarios.