Title: INTENSE: In-depth Studies on Stall Events and Quality Switches and Their Impact on the Quality of Experience in HTTP Adaptive Streaming

Link: IEEE Access, A Multidisciplinary, Open-access Journal of the IEEE

[PDF]

Babak Taraghi (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Minh Nguyen (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Hadi Amirpour (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Christian Doppler Laboratory ATHENA, Alpen-Adria-Universität Klagenfurt)

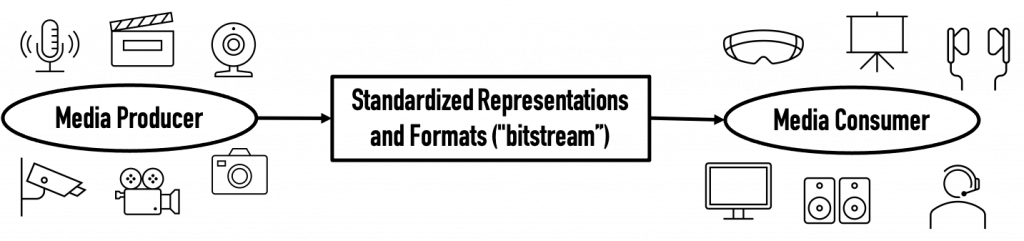

Abstract: With the recent growth of multimedia traffic over the Internet and emerging multimedia streaming service providers, improving Quality of Experience (QoE) for HTTP Adaptive Streaming (HAS) becomes more important. Alongside other factors, such as the media quality, HAS relies on the performance of the media player’s Adaptive Bitrate (ABR) algorithm to optimize QoE in multimedia streaming sessions. QoE in HAS suffers from weak or unstable internet connections and suboptimal ABR decisions. As a result of imperfect adaptiveness to the characteristics and conditions of the internet connection, stall events and quality level switches could occur and with different durations that negatively affect the QoE. In this paper, we address various identified open issues related to the QoE for HAS, notably (i) the minimum noticeable duration for stall events in HAS;(ii) the correlation between the media quality and the impact of stall events on QoE; (iii) the end-user preference regarding multiple shorter stall events versus a single longer stall event; and (iv) the end-user preference of media quality switches over stall events. Therefore, we have studied these open issues from both objective and subjective evaluation perspectives and presented the correlation between the two types of evaluations. The findings documented in this paper can be used as a baseline for improving ABR algorithms and policies in HAS.

Keywords: Crowdsourcing; HTTP Adaptive Streaming; Quality of Experience; Quality Switches; Stall Events; Subjective Evaluation; Objective Evaluation.