Authors: Zahra Najafabadi Samani, Narges Mehran, Dragi Kimovski, Shajulin Benedikt, Nishant Saurabh, Radu Prodan

IEEE Transactions on Parallel and Distributed Systems

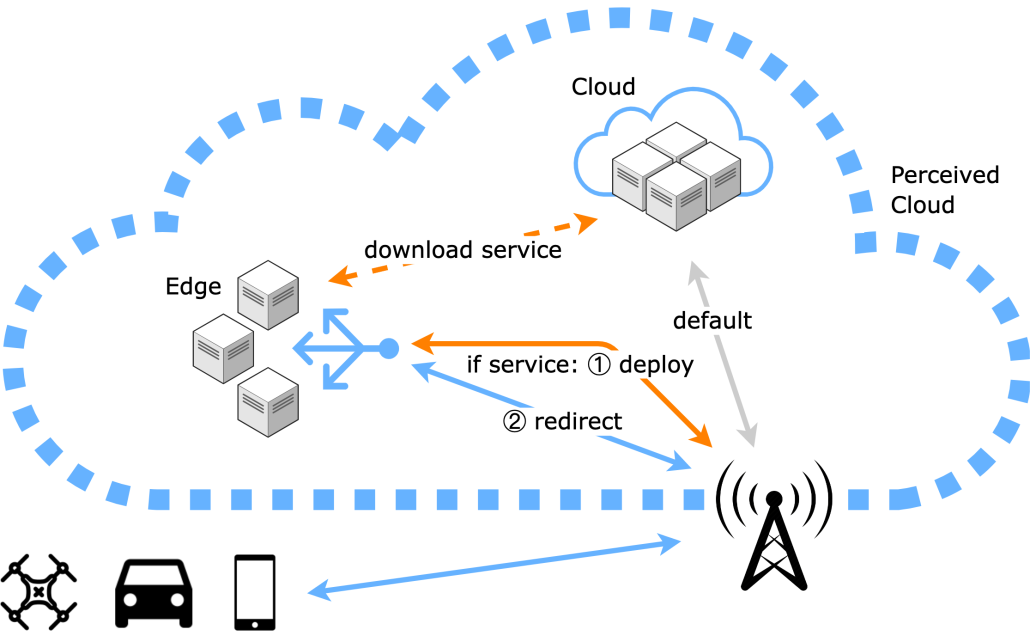

Abstract: Fog computing platforms became essential for deploying low-latency applications at the network’s edge. However, placing and managing time-critical applications over a Fog infrastructure with many heterogeneous and resource-constrained devices over a dynamic network is challenging. This paper proposes an incremental multilayer resource-aware partitioning (M-RAP) method that minimizes resource wastage and maximizes service placement and deadline satisfaction in a dynamic Fog with many application requests. M-RAP represents the heterogeneous Fog resources as a multilayer graph, partitions it based on the network structure and resource types, and constantly updates it upon dynamic changes in the underlying Fog infrastructure. Finally, it identifies the device partitions for placing the application services according to their resource requirements, which must overlap in the same low-latency network partition. We evaluated M-RAP through extensive simulation and two applications executed on a real testbed. The results show that M-RAP can place 1.6 times as many services, satisfy deadlines for 43% more applications, lower their response time by up to 58%, and reduce resource wastage by up to 54% compared to three state-of-the-art methods.