Read more

Read more

On February 8, 2023, EduDay – organised by the educational lab and students of the HAK 1 Klagenfurt – took place for the first time. Several hundred students were guided through the laboratories and got their first insight into research. CD laboratory ATHENA participated as well and presented background and results from the world of video streaming to the interested participants.

Find more info here.

Authors: Zahra Najafabadi Samani (Alpen-Adria-Universität Klagenfurt, Austria), Narges Mehran (Alpen-Adria-Universität Klagenfurt, Austria), Dragi Kimovski (Alpen-Adria-Universität Klagenfurt, Austria), Radu Prodan (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: The accelerating growth of modern distributed applications with low delivery deadlines leads to a paradigm shift towards the multi-tier computing continuum. However, the geographical dispersion, heterogeneity, and availability of the continuum resources may result in failures and quality of service degradation, significantly negating its advantages and lowering users’ satisfaction. We propose in this paper a proactive application placement PROS method relying on distributed coordination to prevent the quality of service violations through service-level agreements on the computing continuum. PROS employs a sigmoid function with adaptive weights for the different parameters to predict the service level agreement assurance of devices based on their past credentials and current capabilities. We evaluate PROS using two application workloads with different traffic stress levels up to 90 million services on a real testbed with 600 heterogeneous instances deployed over eight geographical locations. The results show that PROS increases the success rate by 7-33%, reduces the response time by 16-38%, and increases the deadline satisfaction rate by 19-42% compared to the two related work methods. A comprehensive simulation study with 1000 devices and a workload of up to 670 million services confirms the scalability of the results.

IEEE International Conference on Communications (ICC)

28 May – 01 June 2023– Rome, Italy

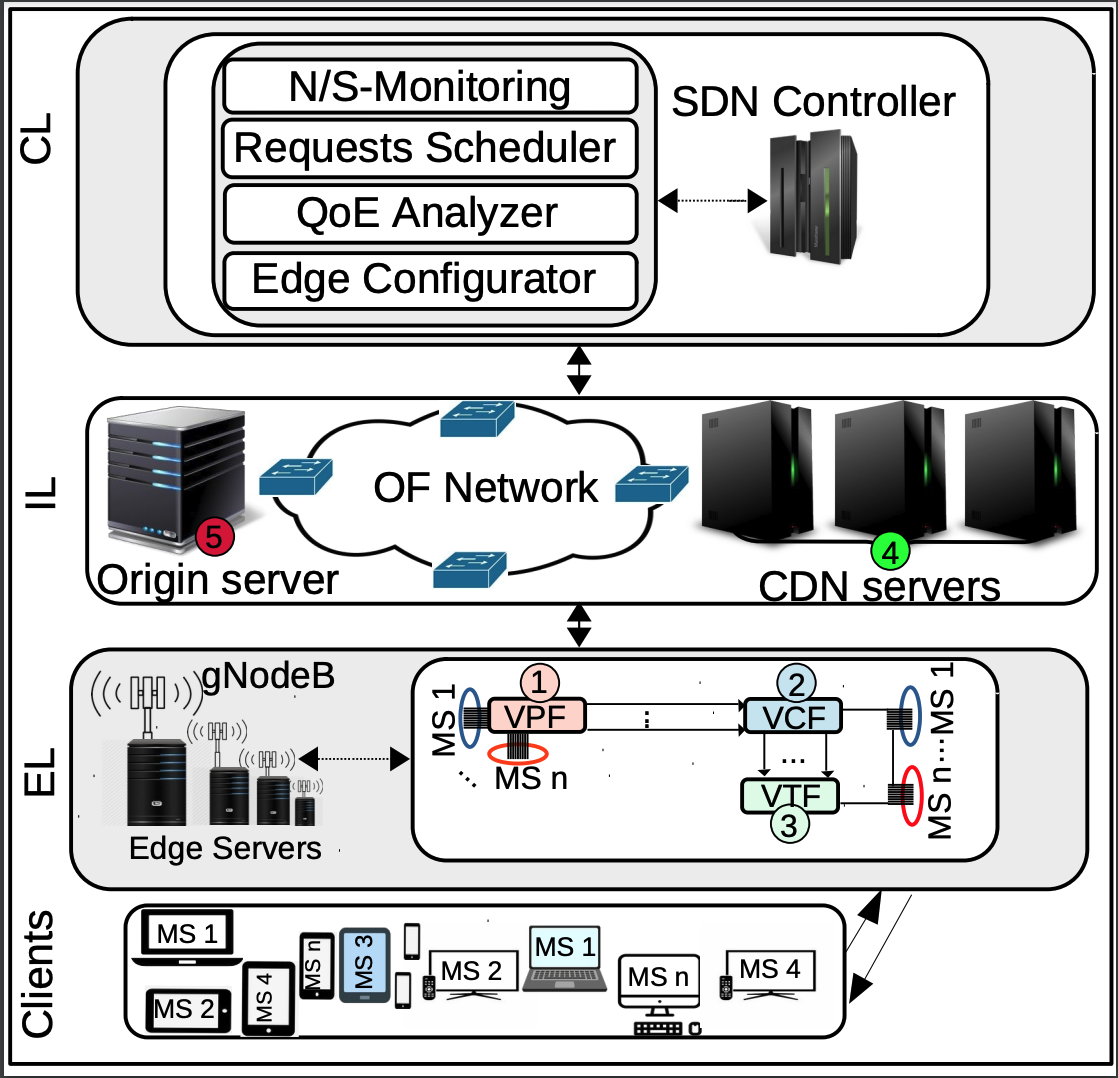

Reza Farahani (Alpen-Adria-Universität Klagenfurt), Abdelhak Bentaleb (Concordia University, Canada), Christian Timmerer (Alpen-Adria-Universität Klagenfurt), Mohammad Shojafar (University of Surrey, UK), Radu Prodan (Alpen-Adria-Universität Klagenfurt), and Hermann Hellwagner (Alpen-Adria-Universität Klagenfurt)

Abstract: 5G and 6G networks are expected to support various novel emerging adaptive video streaming services (e.g., live, VoD, immersive media, and online gaming) with versatile Quality of Experience (QoE) requirements such as high bitrate, low latency, and sufficient reliability. It is widely agreed that these requirements can be satisfied by adopting emerging networking paradigms like Software-Defined Networking (SDN), Network Function Virtualization (NFV), and edge computing. Previous studies have leveraged these paradigms to present network-assisted video streaming frameworks, but mostly in isolation without devising chains of Virtualized Network Functions (VNFs) that consider the QoE requirements of various types of Multimedia Services (MS).

To bridge the aforementioned gaps, we first introduce a set of multimedia VNFs at the edge of an SDN-enabled network, form diverse Service Function Chains (SFCs) based on the QoE requirements of different MS services. We then propose SARENA, an SFC-enabled ArchitectuRe for adaptive VidEo StreamiNg Applications. Next, we formulate the problem as a central scheduling optimization model executed at the SDN controller. We also present a lightweight heuristic solution consisting of two phases that run on the SDN controller and edge servers to alleviate the time complexity of the optimization model in large-scale scenarios. Finally, we design a large-scale cloud-based testbed, including 250 HTTP Adaptive Streaming (HAS) players requesting two popular MS applications (i.e., live and VoD), conduct various experiments, and compare its effectiveness with baseline systems. Experimental results illustrate that SARENA outperforms baseline schemes in terms of users’ QoE by at least 39.6%, latency by 29.3%, and network utilization by 30% in both MS services.

Index Terms—HAS; DASH; NFV; SFC; SDN, Edge Computing.

We meet Felix Schniz for an interview in Lakeside Park, in the CD laboratory Athena, building B12B, to learn something about him and his work and why he chose his career. For those who don´t yet know Felix: he is always neatly dressed, has a smile on his lips and is eager for a mutual exchange of ideas and opinions. So, he was quick to accept the invitation to be the first person on a new journey from “People Behind Informatics”. He is passionate about his work and is happy to share his views with us.

Hello Felix, thanks for taking the time to talk to us. Please tell me something about yourself, where you come from, and how your professional career has evolved.

I was born in Bietigheim-Bissingen near Stuttgart. I studied in Mannheim, with the focus of my Bachelor’s degree in English and American Studies. For my Master, I specialized in culture in the process of modernity. In addition to literature and film, we also dealt with digitization processes and that’s how I came to the video game area. That was my “unusual entry” into technical sciences. After my Master’s degree, it was clear to me: I wanted to write a doctoral thesis on video games. The academic path is simply mine, and the topic offers many exciting perspectives, as it is still unexplored in large parts. During my research for the right environment for such a research project, I met René Schallegger at a conference in Oxford. We stay in contact. When a vacancy for a university assistant was advertised at the Department of English in 2016, I applied for this position, started my doctorate at the same time and have been here since then.

Such a coincidence, and very lucky that you found exactly what you were looking for. How was your start at the University of Klagenfurt?

I started immediately and also took on the role of the SPL (programme director) of the Master’s degree in “Game Studies and Engineering“, which combines both – humanities and technical aspects. This is also what is special about this programme: the students learn technical approaches to video games and what kind of a role a technical medium plays in society.

What do you particularly like about your work?

I am taken seriously and can combine my passion for technology and humanities. I am very happy to question: What is the reason for that, what is behind it, and what else needs to be considered? I can live that to the full in my work.

And how did your doctorate continue?

In my doctorate, I asked the research question of what a video game experience actually is. It’s not that easy to name and has to be illuminated from many sides. Philosophically – psychologically – sociologically – media science… The path goes from one’s own, personal to the technical implementation. I wrote theoretical basics, worked with content analyses and scientifically processed my own experiences. This gave me a new, exciting field of questions for myself and research on video games – because how can we speak scientifically about the content of the medium when we experience it in such a personal way?

What consensus emerged for you?

Video games help us to get a bigger, better picture of people in the digital age. We have to ask ourselves what kind of influence video games in the future can and should have and need to raise awareness of what kind of responsibility video game programmers have. Programmers should also ask themselves what they want to offer people. The virtual worlds that open video games can offer us a lot, but we have to learn how to deal with them.

In short, I have to ask myself: What do I want to achieve with technology? What role should it play in my life?

Over the past few years, one has been able to follow what role virtual worlds can play in the lives of people. The well-known video game “Fortnite”, for example, was suddenly not just a popular game, but also a much-needed social meeting point, and a retreat for young people, whose social and private spaces were taken away by the pandemic.

Video games can be of great importance for each of us. They can offer us things we need emotionally, socially, or intellectually, or allow us to explore ourselves. This does not mean that the virtual should replace the real world – but it can be a great addition to it. In order to continue to pursue these thoughts in targeted extracts, I also wrote a lot about coping with grief in addition to my doctoral thesis. I am currently working on a book about the spiritual experience of interactive media in general. It will be published later this year.

Thank you very much for inviting us into your interesting area of work. We wish you a lot of joy and success in your favourite research area.

Journal Website: Journal of Network and Computer Applications

[PDF]

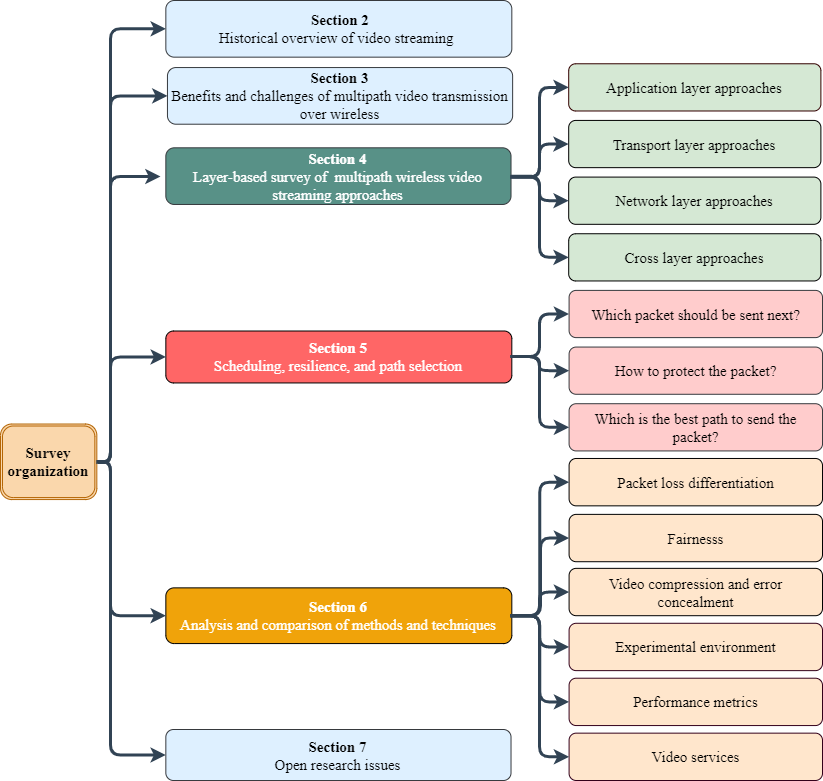

Samira Afzal (Alpen-Adria-Universität Klagenfurt), Vanessa Testoni (unico IDtech), Christian Esteve Rothenberg (University of Campinas), Prakash Kolan (Samsung Research America), and Imed Bouazizi (Qualcomm)

Abstract:

Demand for wireless video streaming services increases with users expecting to access high-quality video streaming experiences. Ensuring Quality of Experience (QoE) is quite challenging due to varying bandwidth and time constraints. Since most of today’s mobile devices are equipped with multiple network interfaces, one promising approach is to benefit from multipath communications. Multipathing leads to higher aggregate bandwidth and distributing video traffic over multiple network paths improves stability, seamless connectivity, and QoE. However, most of current transport protocols do not match the requirements of video streaming applications or are not designed to address relevant issues, such as networks heterogeneity, head-of-line blocking, and delay constraints. In this comprehensive survey, we first review video streaming standards

and technology developments. We then discuss the benefits and challenges of multipath video transmission over wireless. We provide a holistic literature review of multipath wireless video streaming, shedding light on the different alternatives from an end-to-end layered stack perspective, reviewing key multipath wireless scheduling functions, unveiling trade-offs of each approach, and presenting a suitable taxonomy to classify the

state-of-the-art. Finally, we discuss open issues and avenues for future work.

Journal: Sensors

Authors: Akif Quddus Khan, Nikolay Nikolov, Mihhail Matskin,Radu Prodan, Dumitru Roman, Bekir Sahin, Christoph Bussler, Ahmet Soylu

Abstract: Big data pipelines are developed to process data characterized by one or more of the three big data features, commonly known as the three Vs (volume, velocity, and variety), through a series of steps (e.g., extract, transform, and move), making the ground work for the use of advanced analytics and ML/AI techniques. Computing continuum (i.e., cloud/fog/edge) allows access to virtually infinite amount of resources, where data pipelines could be executed at scale; however, the implementation of data pipelines on the continuum is a complex task that needs to take computing resources, data transmission channels, triggers, data transfer methods, integration of message queues, etc., into account. The task becomes even more challenging when data storage is considered as part of the data pipelines. Local storage is expensive, hard to maintain, and comes with several challenges (e.g., data availability, data security, and backup). The use of cloud storage, i.e., storage-as-a-service (StaaS), instead of local storage has the potential of providing more flexibility in terms of scalability, fault tolerance, and availability. In this article, we propose a generic approach to integrate StaaS with data pipelines, i.e., computation on an on-premise server or on a specific cloud, but integration with StaaS, and develop a ranking method for available storage options based on five key parameters: cost, proximity, network performance, server-side encryption, and user weights/preferences. The evaluation carried out demonstrates the effectiveness of the proposed approach in terms of data transfer performance, utility of the individual parameters, and feasibility of dynamic selection of a storage option based on four primary user scenarios.

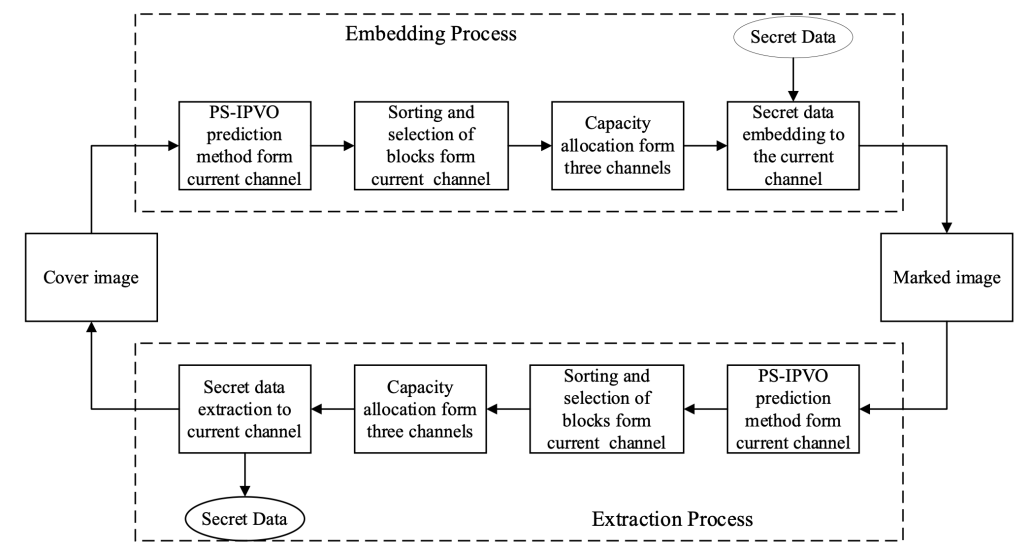

Authors: Ningxiong Maoa (Southwest Jiaotong University), Hongjie Hea (Southwest Jiaotong University), Fan Chenb (Southwest Jiaotong University), Lingfeng Qua (Southwest Jiaotong University), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt, Austria), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: Color image Reversible Data Hiding (RDH) is getting more and more important since the number of its applications is steadily growing. This paper proposes an efficient color image RDH scheme based on pixel value ordering (PVO), in which the channel correlation is fully utilized to improve the embedding performance. In the proposed method, the channel correlation is used in the overall process of data embedding, including prediction stage, block selection and capacity allocation. In the prediction stage, since the pixel values in the co-located blocks in different channels are monotonically consistent, the large pixel values are collected preferentially by pre-sorting the intra-block pixels. This can effectively improve the embedding capacity of RDH based on PVO. In the block selection stage, the description accuracy of block complexity value is improved by exploiting the texture similarity between the channels. The smoothing the block is then preferentially used to reduce invalid shifts. To achieve low complexity and high accuracy in capacity allocation, the proportion of the expanded prediction error to the total expanded prediction error in each channel is calculated during the capacity allocation process. The experimental results show that the proposed scheme achieves significant superiority in fidelity over a series of state-of-the-art schemes. For example, the PSNR of the Lena image reaches 62.43dB, which is a 0.16dB gain compared to the best results in the literature with a 20,000bits embedding capacity.

Keywords—Reversible data hiding, color image, pixel value ordering, channel correlation

IEEE ISM’2022 (https://www.ieee-ism.org/)

Authors: Shivi Vats, Jounsup Park, Klara Nahrstedt, Michael Zink, Ramesh Sitaraman, and Hermann Hellwagner

Abstract: In a 5G testbed, we use 360° video streaming to test, measure, and demonstrate the 5G infrastructure, including the capabilities and challenges of edge computing support. Specifically, we use the SEAWARE (Semantic-Aware View Prediction) software system, originally described in [1], at the edge of the 5G network to support a 360° video player (handling tiled videos) by view prediction. Originally, SEAWARE performs semantic analysis of a 360° video on the media server, by extracting, e.g., important objects and events. This video semantic information is encoded in specific data structures and shared with the client in a DASH streaming framework. Making use of these data structures, the client/player can perform view prediction without in-depth, computationally expensive semantic video analysis. In this paper, the SEAWARE system was ported and adapted to run (partially) on the edge where it can be used to predict views and prefetch predicted segments/tiles in high quality in order to have them available close to the client when requested. The paper gives an overview of the 5G testbed, the overall architecture, and the implementation of SEAWARE at the edge server. Since an important goal of this work is to achieve low motion-to-glass latencies, we developed and describe “tile postloading”, a technique that allows non-predicted tiles to be fetched in high quality into a segment already available in the player buffer. The performance of 360° tiled video playback on the 5G infrastructure is evaluated and presented. Current limitations of the 5G network in use and some challenges of DASH-based streaming and of edge-assisted viewport prediction under “real-world” constraints are pointed out; further, the performance benefits of tile postloading are disclosed.

IEEE Transactions on Image Processing (TIP)

Journal Website

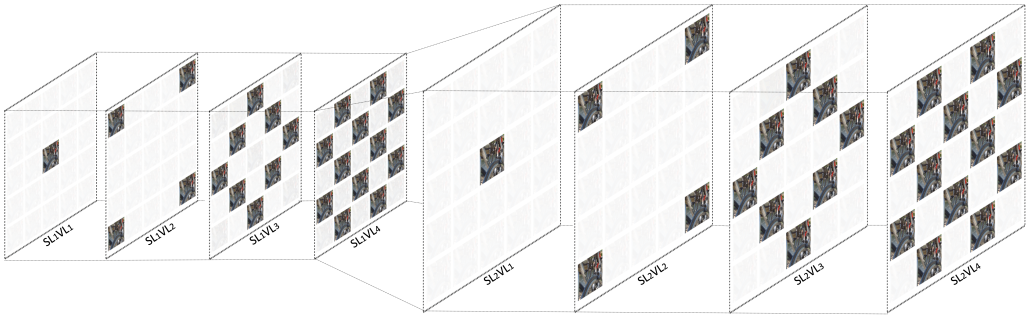

Authors: Hadi Amirpour (Alpen-Adria-Universität Klagenfurt, Austria), Christine Guillemot (INRIA, France), Mohammad Ghanbari (University of Essex, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

Abstract: Light field imaging, which captures both spatial and angular information, improves user immersion by enabling post-capture actions, such as refocusing and changing view perspective. However, light fields represent very large volumes of data with a lot of redundancy that coding methods try to remove. State-of-the-art coding methods indeed usually focus on improving compression efficiency and overlook other important features in light field compression such as scalability. In this paper, we propose a novel light field image compression method that enables (i) viewport scalability, (ii) quality scalability, (iii) spatial scalability, (iv) random access, and (v) uniform quality distribution among viewports, while keeping compression efficiency high. To this end, light fields in each spatial resolution are divided into sequential viewport layers, and viewports in each layer are encoded using the previously encoded viewports. In each viewport layer, \revision{the} available viewports are used to synthesize intermediate viewports using a video interpolation deep learning network. The synthesized views are used as virtual reference images to enhance the quality of intermediate views. An image super-resolution method is applied to improve the quality of the lower spatial resolution layer. The super-resolved images are also used as virtual reference images to improve the quality of the higher spatial resolution layer.

The proposed structure also improves the flexibility of light field streaming, provides random access to the viewports, and increases error resiliency. The experimental results demonstrate that the proposed method achieves a high compression efficiency and it can adapt to the display type, transmission channel, network condition, processing power, and user needs.

Keywords—Light field, compression, scalability, random access, deep learning.