The 5th Annual Lifelog Search Challenge (LSC 2022), co-organized by Klaus Schöffmann, will be this year’s grand challenge at the ACM International Conference on Multimedia Retrieval (ICMR 2022) in Newark, NJ, USA. More information here: https://www.icmr2022.org/program/challenges/

Medical Multimedia Information Systems

Klaus Schöffmann will give an invited talk about Relevant Content Detection in Cataract Surgery Videos at the IEEE International Conference on Image Processing Theory, Tools and Applications (IPTA) on April 19, 2022, in Salzburg, Austria. More information here: https://ipta-conference.com/ipta22/index.php/invited-speakers

Klaus Schöffmann will give a tutorial about Medical Video Processing at the IEEE International Conference on Image Processing Theory, Tools and Applications (IPTA) on April 19, 2022, in Salzburg, Austria. More information here: https://ipta-conference.com/ipta22/index.php/invited-speakers

We are happy that our 2nd International Workshop on Interactive Multimedia Retrieval (IMuR) has been accepted for the ACM Multimedia Conference 2022 (ACMMM) in Lisbon, Portugal. More information can be found here: https://sites.google.com/view/imur2022

On April 6th, 2022, Natalia Mathá (former Sokolova) successfully defended her thesis on “Relevance Detection and Relevance-Based Video Compression in Cataract Surgery Videos” under the supervision of Assoc.-Prof. Klaus Schöffmann and Assoc.-Prof. Christian Timmerer. The defense was chaired by Univ.-Prof. Hermann Hellwagner and the examiners were Assoc.-Prof. Konstantin Schekotihin and Assoc.-Prof. Mathias Lux. Congratulations to Dr. Mathá for this great achievement!

On April 6th, 2022, Natalia Mathá (former Sokolova) successfully defended her thesis on “Relevance Detection and Relevance-Based Video Compression in Cataract Surgery Videos” under the supervision of Assoc.-Prof. Klaus Schöffmann and Assoc.-Prof. Christian Timmerer. The defense was chaired by Univ.-Prof. Hermann Hellwagner and the examiners were Assoc.-Prof. Konstantin Schekotihin and Assoc.-Prof. Mathias Lux. Congratulations to Dr. Mathá for this great achievement!

Congratulations to Natalia Sokolova, who got her journal paper on “Automatic detection of pupil reactions in cataract surgery videos” accepted in the PLOS ONE journal. This work has been (co-)authored by Natalia Sokolova, Klaus Schoeffmann, Mario Taschwer, Stephanie Sarny, Doris Putzgruber-Adamitsch, and Yosuf El-Shabrawi.

With his master thesis about “Animating Characters using Deep Learning based Pose Estimation”, Fabian Schober won the “Dynatrace Outstanding IT-Thesis Award” (DO*IT*TA). The award brings attention to extraordinary theses, motivates creativity, and provides insight into modern technologies.

In his thesis, Fabian Schober focuses on animating 2D (video game) characters using the PoseNet pose estimation model. He delivers a proof of concept on how new machine learning technologies can assist in video game development. Read more at the University press release (German only).

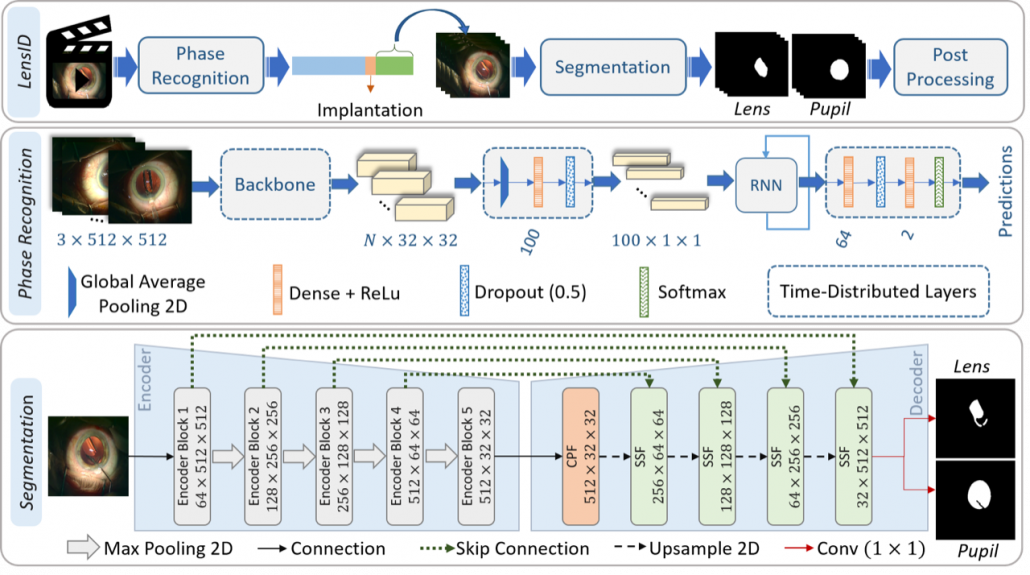

Our paper about LensID – a CNN-RNN-based framework for irregularity detection in cataract surgery videos has been accepted at the prestigious MICCAI 2021 conference (International Conference on Medical Image Computing & Computer Assisted Intervention). Negin Ghamsarian will present the details of her work on this topic in the end of September.

Authors: Negin Ghamsarian, Mario Taschwer, Doris Putzgruber-Adamitsch, Stephanie Sarny, and Klaus Schoeffmann

Link: https://www.miccai2021.org/en/

Authors: Nakisa Shams (ETS, Montreal, Canada), Hadi Amirpour (Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Bitmovin), and Mohammad Ghanbari (School of Computer Science and Electronic Engineering, University of Essex, Colchester, UK)

Abstract: Cognitive radio networks by utilizing the spectrum holes in licensed frequency bands are able to efficiently manage the radio spectrum. A significant improvement in spectrum use can be achieved by giving secondary users access to these spectrum holes. Predicting spectrum holes can save significant energy that is consumed to detect spectrum holes. This is because the secondary users can only select the channels that are predicted to be idle channels. However, collisions can occur either between a primary user and secondary users or among the secondary users themselves. This paper introduces a centralized channel allocation algorithm in a scenario with multiple secondary users to control both primary and secondary collisions. The proposed allocation algorithm, which uses a channel status predictor, provides a good performance with fairness among the secondary users while they have the minimal interference with the primary user. The simulation results show that the probability of a wrong prediction of an idle channel state in a multi-channel system is less than 0.9%. In addition, the channel state prediction saves the sensing energy up to 73%, and the utilization of the spectrum can be improved more than 77%.

Keywords: Cognitive radio, Biological neural networks, Prediction, Idle channel.

International Congress on Information and Communication Technology

25-26 February 2021, London, UK

Today, Klaus Schöffmann will present his keynote talk on “Deep Video Understanding and the User” at the ACM Multimedia 2020 Grand Challenge (GC) on “Deep Video Understanding”. The talk will highlight user aspects of automatic video content search, based on deep neural networks, and show several examples where users have serious issues in finding the correct content scene, when video search systems rely too much on the “automatic search” scenario and ignore the user behind. Registered users of ACMMM2020 can watch the talk online; the corresponding GC is scheduled for October 14 from 21:00-22:00 (15:00-16:00 NY Time).

Link: https://2020.acmmm.org/