Presentation of Radu Prodan on “Massive Graphs on the Computing Continuum” in the seminar on “AI meets complex knowledge structures: Neuro-Symbolic AI and Graph Technologies” at the Oslo Metropolitan University.

Presentation of Radu Prodan on “Massive Graphs on the Computing Continuum” in the seminar on “AI meets complex knowledge structures: Neuro-Symbolic AI and Graph Technologies” at the Oslo Metropolitan University.

Babak Taraghi , Hermann Hellwagner and Christian Timmerer (Alpen-Adria-Universität Klagenfurt)

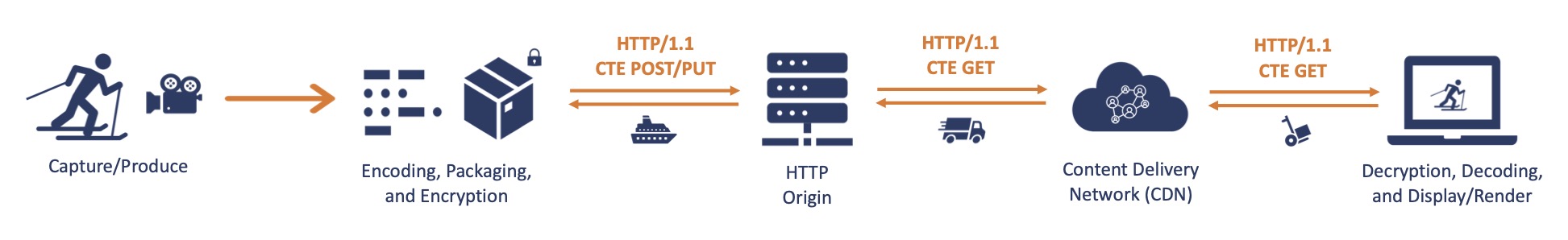

Low-latency live streaming by HTTP Chunked Transfer Encoding

Abstract: Live media streaming is a challenging task by itself, and when it comes to use cases that define low-latency as a must, the complexity will rise multiple times. In a typical media streaming session, the main goal can be declared as providing the highest possible Quality of Experience (QoE), which has proved to be measurable using quality models and various metrics. In a low-latency media streaming session, the requirements are to provide the lowest possible delay between the moment a frame of video is captured and the moment that the captured frame is rendered on the client screen, also known as end-to-end (E2E) latency and maintain the QoE. This paper proposes a sophisticated cloud-based and open-source testbed that facilitates evaluating a low-latency live streaming session as the primary contribution. Live Low-Latency Cloud-based Adaptive Video Streaming Evaluation (LLL-CAdViSE) framework is enabled to asses the live streaming systems running on two major HTTP Adaptive Streaming (HAS) formats, Dynamic Adaptive Streaming over HTTP (MPEG-DASH) and HTTP Live Streaming (HLS). We use Chunked Transfer Encoding (CTE) to deliver Common Media Application Format (CMAF) chunks to the media players. Our testbed generates the test content (audiovisual streams). Therefore, no test sequence is required, and the encoding parameters (e.g., encoder, bitrate, resolution, latency) are defined separately for each experiment. We have integrated the ITU-T P.1203 quality model inside our testbed. To demonstrate the flexibility and power of LLL-CAdViSE, we have presented a secondary contribution in this paper; we have conducted a set of experiments with different network traces, media players, ABR algorithms, and with various requirements (e.g., E2E latency (typical/reduced/low/ultra-low), diverse bitrate ladders, and catch-up logic) and presented the essential findings and the experimental results.

Keywords: Live Streaming; Low-latency; HTTP Adaptive Streaming; Quality of Experience; Objective Evaluation, Open-source Testbed.

14th ACM Multimedia Systems Conference (MMSys)

7 – 10 June 2023 | Vancouver, BC, Canada

Daniele Lorenzi (Alpen-Adria-Universität Klagenfurt)

Abstract:

Video streaming services account for the majority of today’s traffic on the Internet, and according to recent studies, this share is expected to continue growing. This implies that many people around the globe utilize video streaming services on a daily basis to fruit video content. Given this broad utilization, research in video streaming is recently moving towards energy-aware approaches, which aim at the minimization of the energy consumption of the devices involved. On the other side, the perception of quality delivered to the user plays an important role, and the advent of HTTP Adaptive Streaming (HAS) changed the way quality is perceived. The focus moved from the Quality of Service (QoS) towards the Quality of Experience (QoE) of the user taking part in the streaming session. Therefore video streaming services need to develop Adaptive BitRate (ABR) techniques to deal with different network environments on the client side or appropriate end-to-end strategies to provide high QoE to the users. The scope of this doctoral study is within the end-to-end environment with a focus on the end-users domain, referred to as the player environment, including video content consumption and interactivity. This thesis aims to investigate and develop different techniques to increase the delivered QoE to the users and reduce the energy consumption of the end devices in HAS context. We present four main research questions to target the related challenges in the domain of content consumption for HAS systems.

On Friday and Saturday (March 10 and March 11, 2023), Sebastian Uitz presented his game “A Webbing Journey” with his partner Michael Steinkellner and Noel Treese at the Button Festival at the Messe Graz. Their booth consisted of 2 PC, a Steam Deck and a Nintendo Switch, all running the game. This was the first time the two handheld devices were used at an event, and people loved playing on them. The Nintendo Switch was a fan favourite for all the kids. The older players tended to the Steam Deck because it’s still a new console, and most of them had never had the chance to play on it before. Similar to other events, a lot of feedback in the form of new ideas for quests and possibilities to extend the game were gathered, which will be implemented in the following weeks and months, ready for the next event in May.

5th Workshop on Parallel AI and Systems for the Edge (PAISE 2023) held in conjunction with 37th IEEE International Parallel & Distributed Processing Symposium (IPDPS 2023) St. Petersburg, Florida, USA

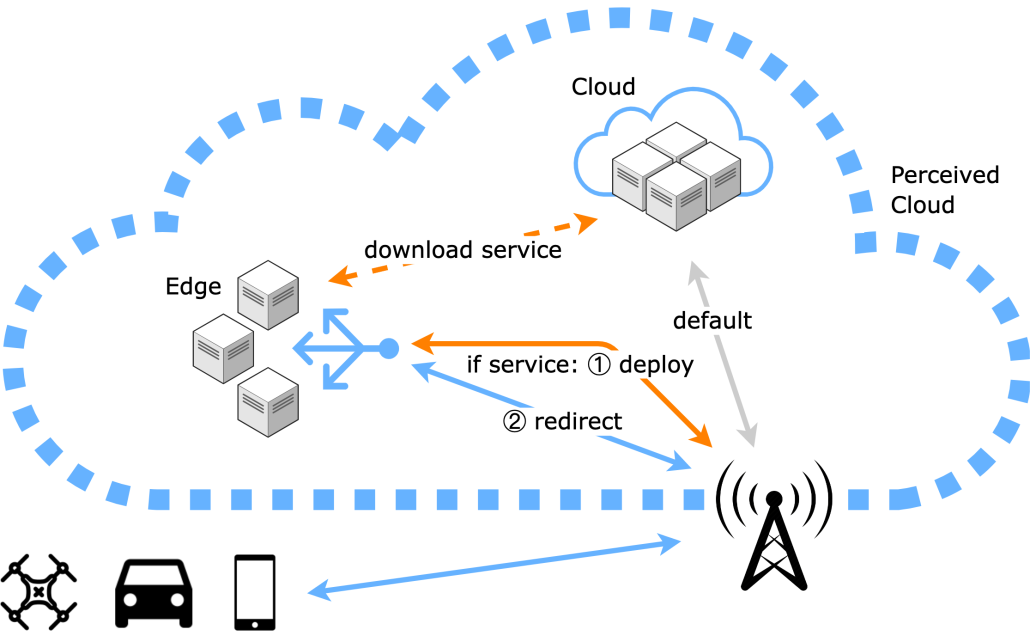

Authors: Josef Hammer and Hermann Hellwagner, Alpen-Adria-Universität Klagenfurt

Abstract: Multi-access Edge Computing (MEC) is a central piece of 5G telecommunication systems and is essential to satisfy the challenging low-latency demands of future applications. MEC provides a cloud computing platform at the edge of the radio access network. Our previous publications argue that edge computing should be transparent to clients, leveraging Software-Defined Networking (SDN). While we introduced a solution to implement such a transparent approach, one question remained: How to handle user requests to a service that is not yet running in a nearby edge cluster? One advantage of the transparent edge is that one could process the initial request in the cloud. However, this paper argues that on-demand deployment might be fast enough for many services, even for the first request. We present an SDN controller that automatically deploys an application container in a nearby edge cluster if no instance is running yet. In the meantime, the user’s request is forwarded to another (nearby) edge cluster or kept waiting to be forwarded immediately to the newly instantiated instance. Our performance evaluations on a real edge/fog testbed show that the waiting time for the initial request – e.g., for an nginx-based service – can be as low as 0.5 seconds – satisfactory for many applications.

The seminar talks every two weeks are co-organized together with the research group of Networks and Distributed Computing at the University of Liverpool, as part of the Durham-Liverpool synergy. The contact person of this synergy in Liverpool is Leszek Gasieniec.

The seminar talks will be streamed online on zoom. Whenever the speaker is physically present in Durham, the presentation will also be in the Vis-Lab at the 1st floor of the MCS building (in addition to zoom streaming). Please refer to the schedule below for any room changes at some selected talks.

NESTiD Seminar Coordinator: George Mertzios

Title: Extreme and Sustainable Graph Processing for Urgent Societal Challenges in Europe: The Graph-Massivizer project

Abstract: Graph-Massivizer is a Horizon Europe project that researches and develops a high-performance, scalable, and sustainable platform for information processing and reasoning based on the massive graph representation of extreme data. It delivers a toolkit of five open-source software tools and FAIR graph datasets covering the sustainable lifecycle of processing extreme data as massive graphs. The tools focus on holistic usability (from extreme data ingestion and massive graph creation), automated intelligence (through analytics and reasoning), performance modelling, and environmental sustainability tradeoffs, supported by credible data-driven evidence across the computing continuum. The automated operation based on the emerging serverless computing paradigm supports experienced and novice stakeholders from a broad group of large and small organisations to capitalise on extreme data through massive graph programming and processing. Graph Massivizer validates its innovation on four complementary use cases considering their extreme data properties and coverage of the three sustainability pillars (economy, society, and environment): sustainable green finance, global environment protection foresight, green AI for the sustainable automotive industry, and data centre digital twin for exascale computing. Graph Massivizer promises 70% more efficient analytics than AliGraph, and 30% improved energy awareness for ETL storage operations than Amazon Redshift. Furthermore, it aims to demonstrate a possible two-fold improvement in data centre energy efficiency and over 25% lower GHG emissions for basic graph operations. Graph-Massivizer gathers an interdisciplinary group of twelve partners from eight countries, covering four academic universities, two applied research centres, one HPC centre, two SMEs and two large enterprises. It leverages the world-leading roles of European researchers in graph processing and serverless computing and uses leadership-class European infrastructure in the computing continuum.

Jesús Aguilar Armijo (Alpen-Adria-Universität Klagenfurt), Christian Timmerer (Alpen-Adria-Universität Klagenfurt) and Hermann Hellwagner (Alpen-Adria-Universität Klagenfurt)

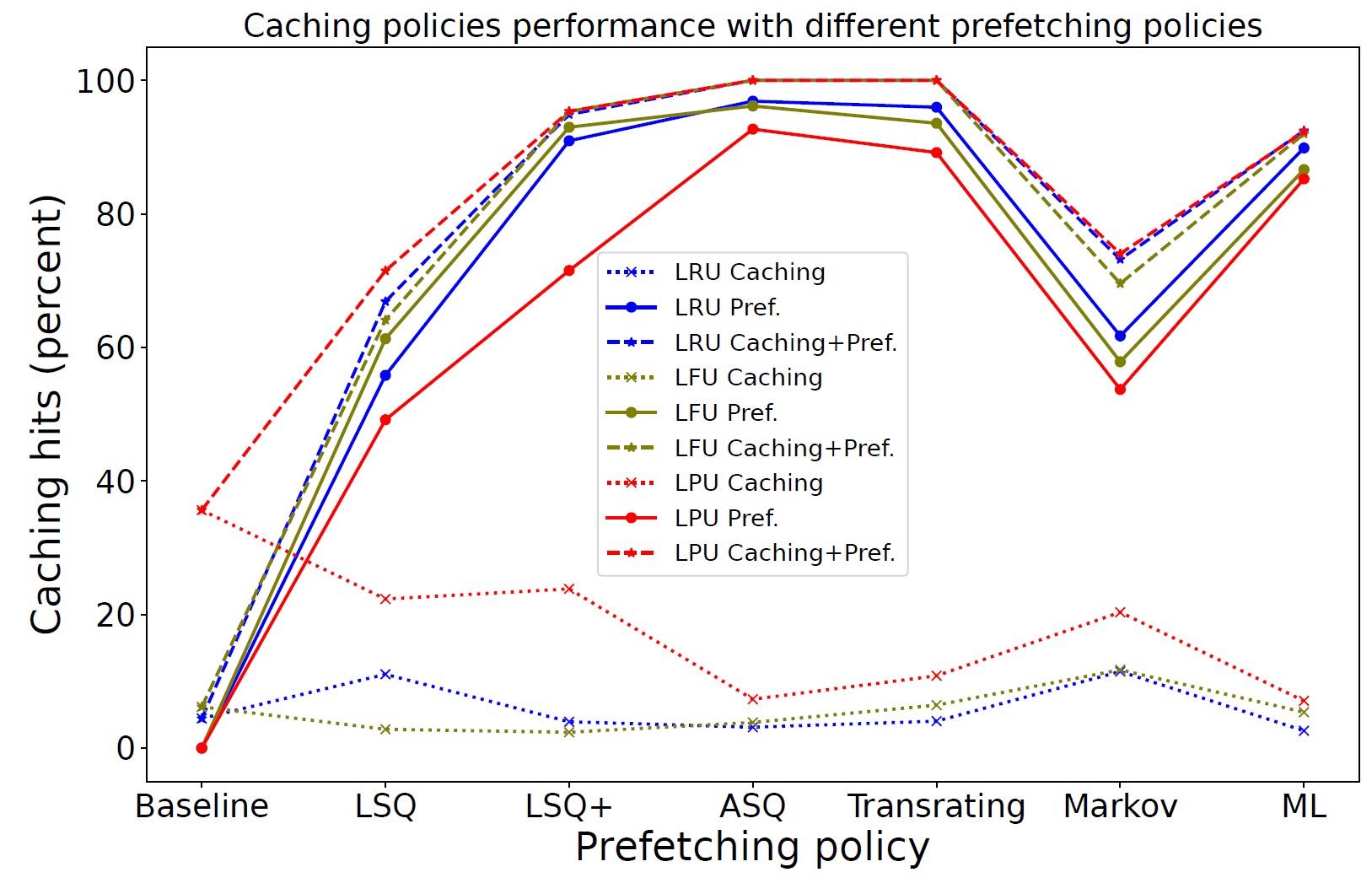

Abstract: Multi-access Edge Computing (MEC) is a new paradigm that brings storage and computing close to the clients. MEC enables the deployment of complex network-assisted mechanisms for video streaming that improve clients’ Quality of Experience (QoE). One of these mechanisms is segment prefetching, which transmits the future video segments in advance closer to the client to serve content with lower latency. In this work, for HAS-based (HTTP Adaptive Streaming) video streaming and specifically considering a cellular (e.g., 5G) network edge, we present our approach Segment Prefetching and Caching at the Edge for Adaptive Video Streaming (SPACE). We propose and analyze different segment prefetching policies that differ in resource utilization, player and radio metrics needed, and deployment complexity. This variety of policies can dynamically adapt to the network’s current conditions and the service provider’s needs. We present segment prefetching policies based on diverse approaches and techniques: past segment requests, segment transrating (i.e., reducing segment bitrate/quality), Markov prediction model, machine learning to predict future segment requests, and super-resolution.We study their performance and feasibility using metrics such as QoE characteristics, computing times, prefetching hits, and link bitrate consumption. We analyze and discuss which segment prefetching policy is better under which circumstances, as well as the influence of the client-side Adaptive Bit Rate (ABR) algorithm and the set of available representations (“bitrate ladder”) in segment prefetching. Moreover, we examine the impact on segment prefetching of different caching policies for (pre-)fetched segments, including Least Recently Used (LRU), Least Frequently Used (LFU), and our proposed popularity-based caching policy Least Popular Used (LPU).

Keywords: Adaptive video streaming, content delivery, HAS, edge computing, cellular network edge, MEC, segment prefetching, segment caching.

Alpen-Adria Universität Klagenfurt, Institute of Information Technology Chinese Academy of Sciences, Institute of Automation Johannes-Kepler-Universität Linz, Intelligent Transport Systems- Sustainable Transport Logistics 4.0 Logoplan – Logistik, Verkehrs und Umweltschutz Consulting GmbH Intact GmbH Chinese Academy of Sciences, Institute of Computing Technology

Alpen-Adria Universität Klagenfurt, Institute of Information Technology Chinese Academy of Sciences, Institute of Automation Johannes-Kepler-Universität Linz, Intelligent Transport Systems- Sustainable Transport Logistics 4.0 Logoplan – Logistik, Verkehrs und Umweltschutz Consulting GmbH Intact GmbH Chinese Academy of Sciences, Institute of Computing Technology

Title: Boosting the Impact of Extreme and Sustainable Graph Processing for Urgent Societal Challenges in Europe

The First Workshop on Serverless, Extreme-Scale, and Sustainable Graph Processing Systems (GraphSys), Co-located with ICPE 2023, April 15-19, Coimbra, Portugal (https://sites.google.com/view/graphsys23/home)

Authors: Nuria de Lama Sanchez (International Data Corporation Madrid, Spain), Peter Haase (metaphacts GmbH Walldorf, Germany), Dumitru Roman (SINTEF AS), and Radu Prodan (University of Klagenfurt

Klagenfurt, Austria)

Abstract: We explore the potential of the Graph-Massivizer project funded by the Horizon Europe research and innovation program of the European Union to boost the impact of extreme and sustainable graph processing for mitigating existing urgent societal challenges. Current graph processing platforms do not support diverse workloads, models, languages, and algebraic frameworks. Existing specialized platforms are difficult to use by non-experts and suffer from limited portability and interoperability, leading to redundant efforts and inefficient resource and energy consumption due to vendor and even platform lock-in. While synthetic data emerged as an invaluable resource overshadowing actual data for developing robust artificial intelligence analytics, graph generation remains a challenge due to extreme dimensionality and complexity. On the European scale, this practice is unsustainable and, thus, threatens the possibility of creating a climate-neutral and sustainable economy based on graph data. Making graph processing sustainable is essential but needs credible evidence. The grand vision of the Graph-Massivizer project is a technological solution, coupled with field experiments and experience-sharing, for a high-performance and sustainable graph processing of extreme data with a proper response for any need and organizational size by 2030.

Title: Large-scale graph processing and simulation with serverless workflows in federated FaaS

The First Workshop on Serverless, Extreme-Scale, and Sustainable Graph Processing Systems (GraphSys), Co-located with ICPE 2023, April 15-19, Coimbra, Portugal (https://sites.google.com/view/graphsys23/home)

Authors: Sashko Ristov (Universität Innsbruck, Austria), Reza Farahani (Alpen-Adria-Universität Klagenfurt, Austria), Radu Prodan (Alpen-Adria-Universität Klagenfurt, Austria)

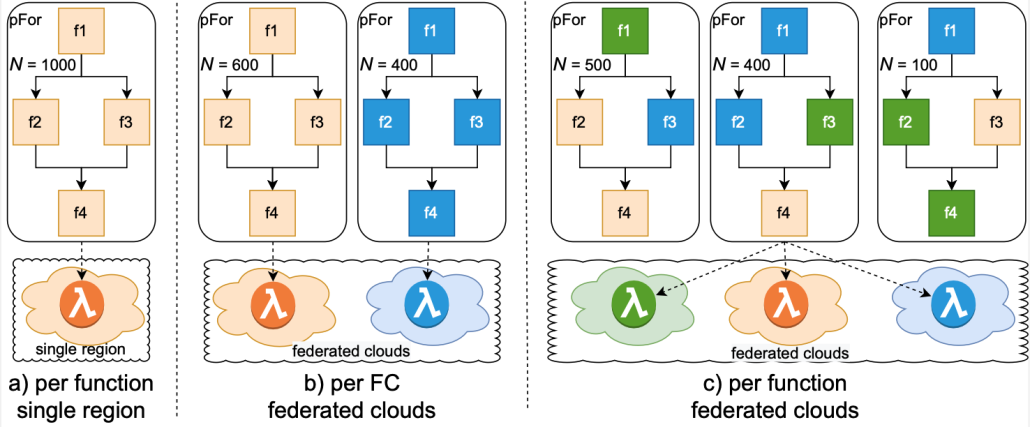

Abstract: Serverless computing offers a cheap and easy way to code lightweight functions that can be invoked based on some events to perform some simple tasks. For more complicated processing, multiple serverless functions can be orchestrated as a directed acyclic graph and form a serverless workflow or function choreography (FC). While all top cloud providers offer FC systems, as well as there are many open-source FC systems, they are focused on how to describe data flow and control flow between serverless functions of the FC, they rarely consider data that is processed, which often is in the form of a graph. In this paper, we review the support for graph processing of the existing serverless workflow management systerms, detect gaps, and recommend future directions for large-scale graph processing with serverless computing.