Josef Hammer presented the paper “Distributed On-Demand Deployment for Transparent Access to 5G Edge Computing Services” at the 5th Workshop on Parallel AI and Systems for the Edge (PAISE 2023). The workshop was held in conjunction with the 37th IEEE International Parallel & Distributed Processing Symposium (IPDPS 2023) in St. Petersburg, Florida, USA.

Authors: Josef Hammer and Hermann Hellwagner, Alpen-Adria-Universität Klagenfurt

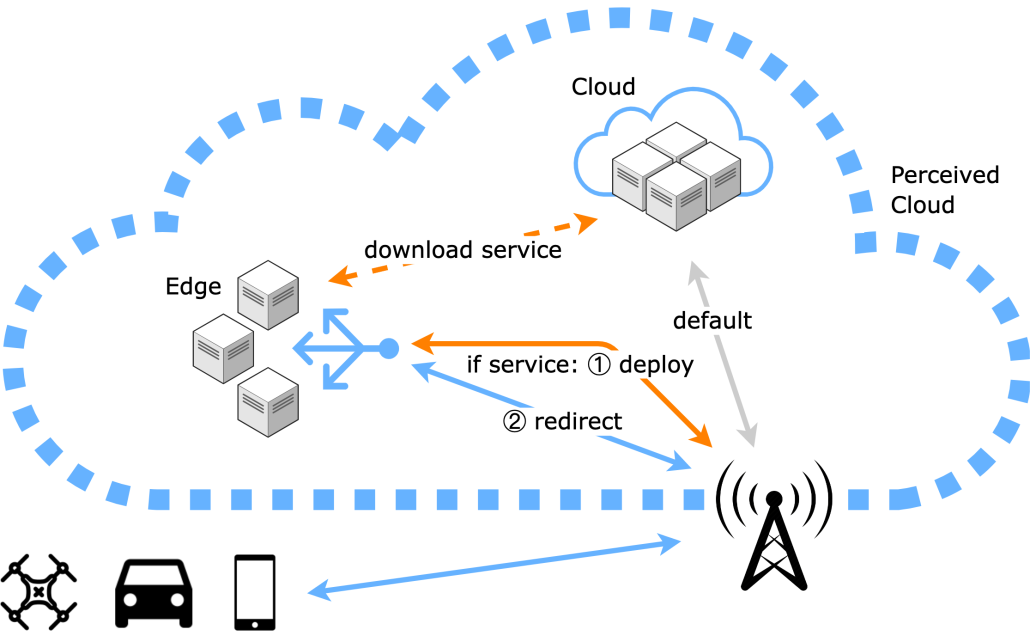

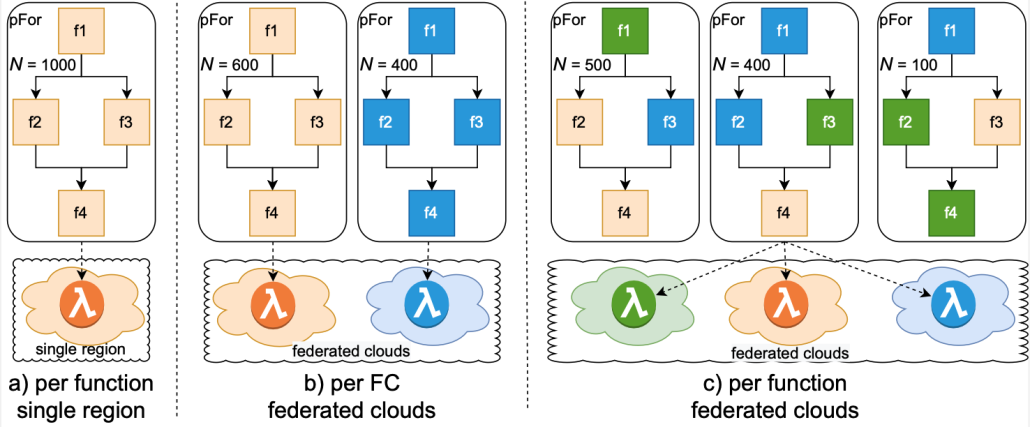

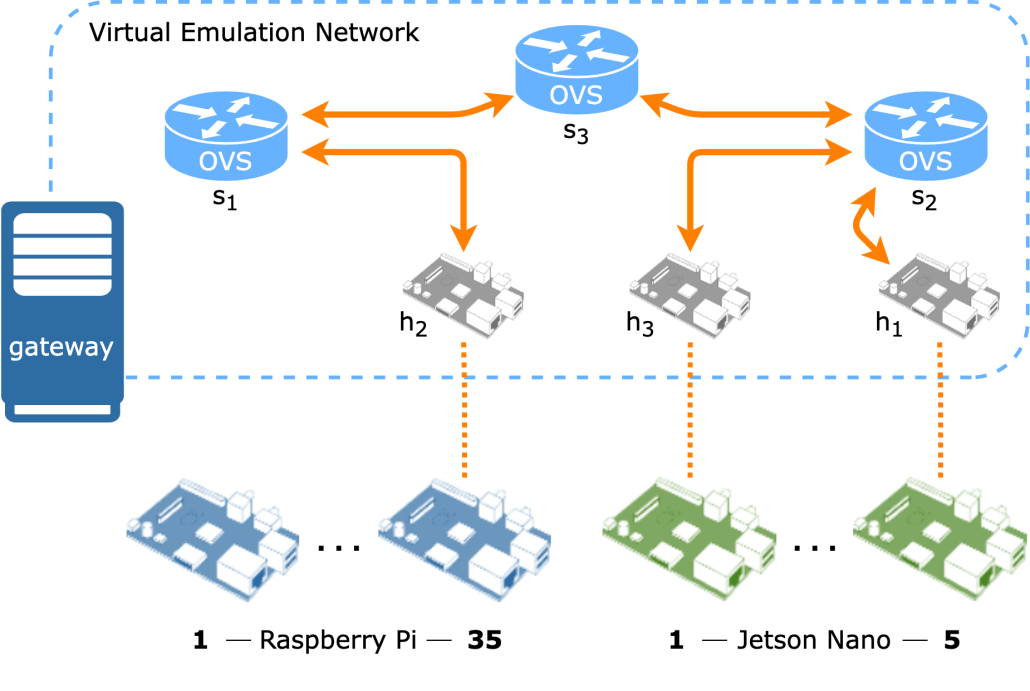

Abstract: Multi-access Edge Computing (MEC) is a central piece of 5G telecommunication systems and is essential to satisfy the challenging low-latency demands of future applications. MEC provides a cloud computing platform at the edge of the radio access network. Our previous publications argue that edge computing should be transparent to clients, leveraging Software-Defined Networking (SDN). While we introduced a solution to implement such a transparent approach, one question remained: How to handle user requests to a service that is not yet running in a nearby edge cluster? One advantage of the transparent edge is that one could process the initial request in the cloud. However, this paper argues that on-demand deployment might be fast enough for many services, even for the first request. We present an SDN controller that automatically deploys an application container in a nearby edge cluster if no instance is running yet. In the meantime, the user’s request is forwarded to another (nearby) edge cluster or kept waiting to be forwarded immediately to the newly instantiated instance. Our performance evaluations on a real edge/fog testbed show that the waiting time for the initial request – e.g., for an nginx-based service – can be as low as 0.5 seconds – satisfactory for many applications.

For more information about the research, visit the website: https://edge.itec.aau.at/.