Paper accepted: Content-aware Reference Frame Synthesis for Enhanced Inter Prediction

Authors: Mohammad Ghasempour (AAU, Austria), Yiying Wei (AAU, Austria), Hadi Amirpour (AAU, Austria), and Christian Timmerer (AAU, Austria)

Venue: European Signal Processing Conference (EUSIPCO)

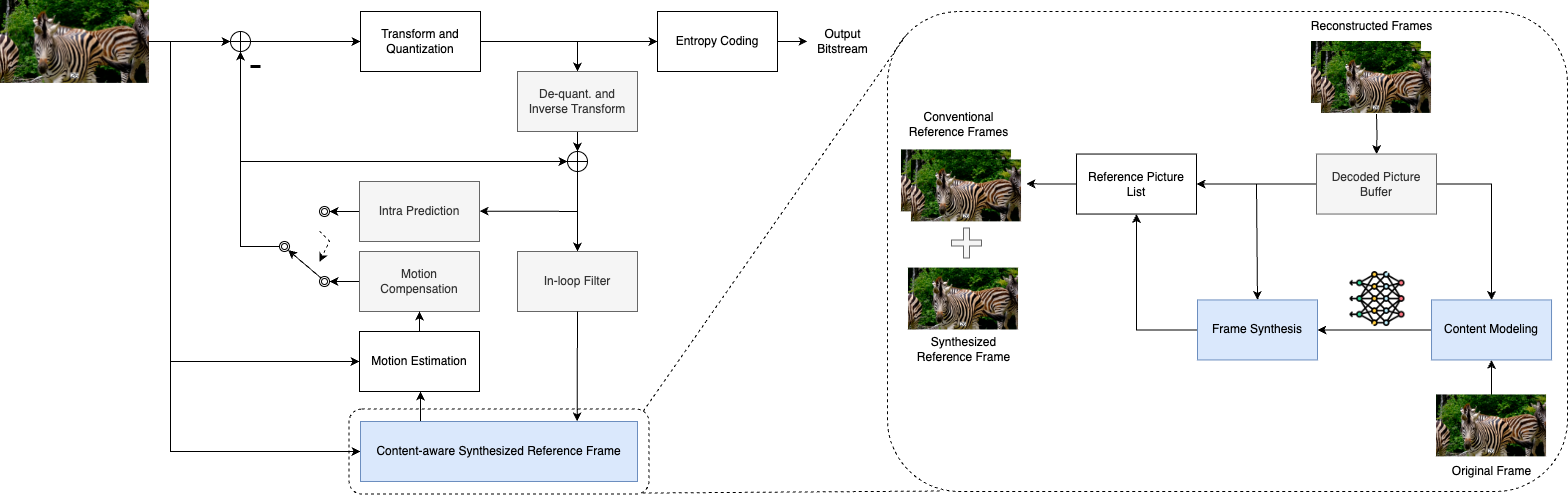

Abstract: Video coding relies heavily on reducing spatial and temporal redundancy to enable efficient transmission. To tackle the temporal redundancy, each video frame is predicted from the previously encoded frames, known as reference frames. The quality of this prediction is highly dependent on the quality of the reference frames. Recent advancements in machine learning are motivating the exploration of frame synthesis to generate high-quality reference frames. However, the efficacy of such models depends on training with content similar to that encountered during usage, which is challenging due to the diverse nature of video data. This paper introduces a content-aware reference frame synthesis to enhance inter-prediction efficiency. Unlike conventional approaches that rely on pre-trained models, our proposed framework optimizes a deep learning model for each content by fine-tuning only the last layer of the model, requiring the transmission of only a few kilobytes of additional information to the decoder. Experimental results show that the proposed framework yields significant bitrate savings of 12.76%, outperforming its counterpart in the pre-trained framework, which only achieves 5.13% savings in bitrate.