SVD: Spatial Video Dataset

ACM Multimedia 2025

October 27 – October 31, 2025

Dublin, Ireland

[PDF]

MH Izadimehr, Milad Ghanbari, Guodong Chen, Wei Zhou, Xiaoshuai Hao, Mallesham Dasari, Christian Timmerer, Hadi Amirpour

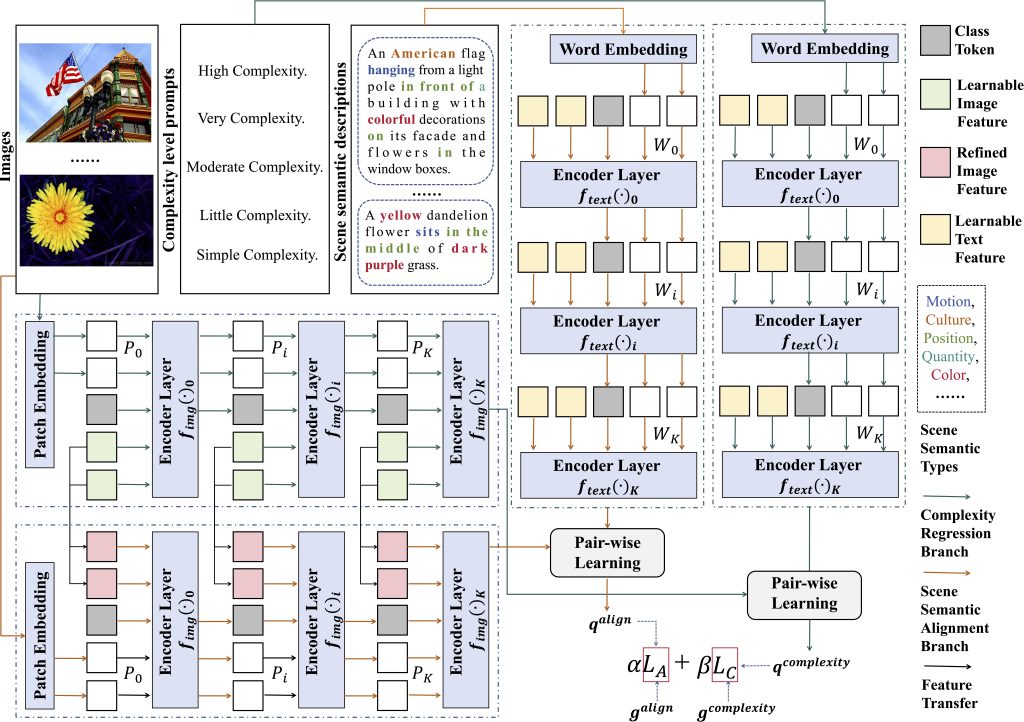

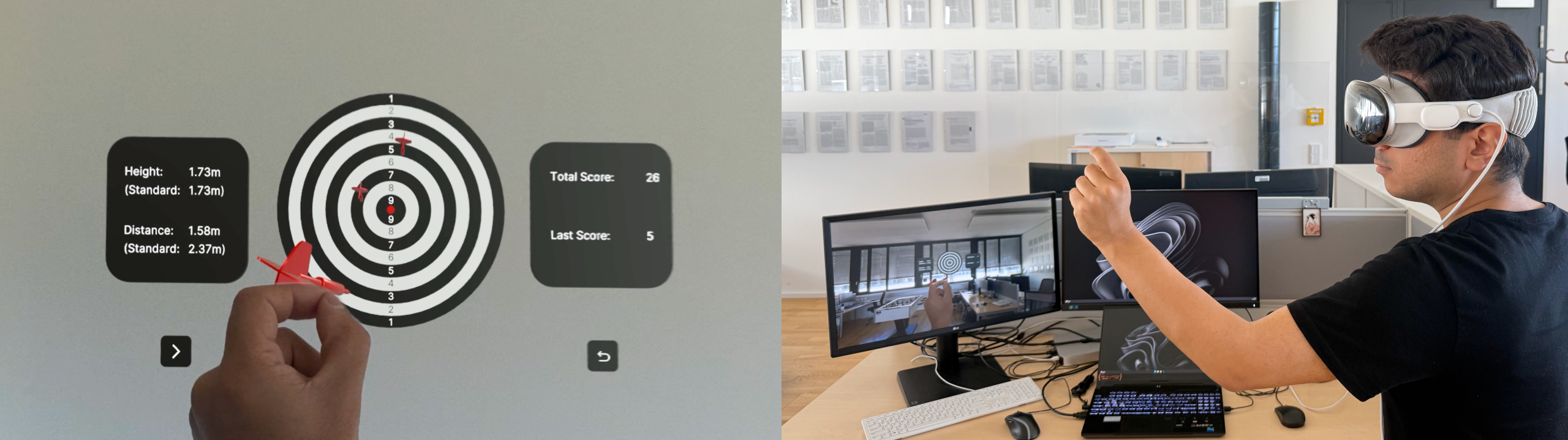

Abstract: Stereoscopic video has long been the subject of research due to its ability to deliver immersive three-dimensional content to a wide range of applications, from virtual and augmented reality to advanced human–computer interaction. The dual‑view format inherently provides binocular disparity cues that enhance depth perception and realism, making it indispensable for fields such as telepresence, 3D mapping, and robotic vision. Until recently, however, end‑to‑end pipelines for capturing, encoding, and viewing high‑quality 3D video were neither widely accessible nor optimized for consumer‑grade devices. Today’s smartphones, such as the iPhone Pro and modern HMDs like the AVP, offer built‑in support for stereoscopic video capture, hardware‑accelerated encoding, and seamless playback on devices like the AVP and Meta Quest 3, which require minimal user intervention. Apple refers to this streamlined workflow as spatial Video. Making the full stereoscopic video process available to everyone has made new applications possible. Despite these advances, there remains a notable absence of publicly available datasets that include the complete spatial video pipeline on consumer platforms, hindering reproducibility and comparative evaluation of emerging algorithms.

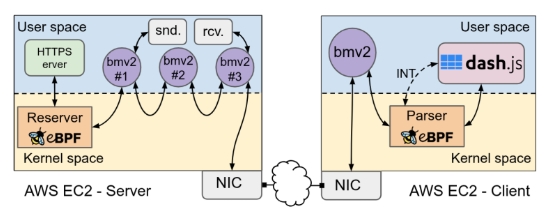

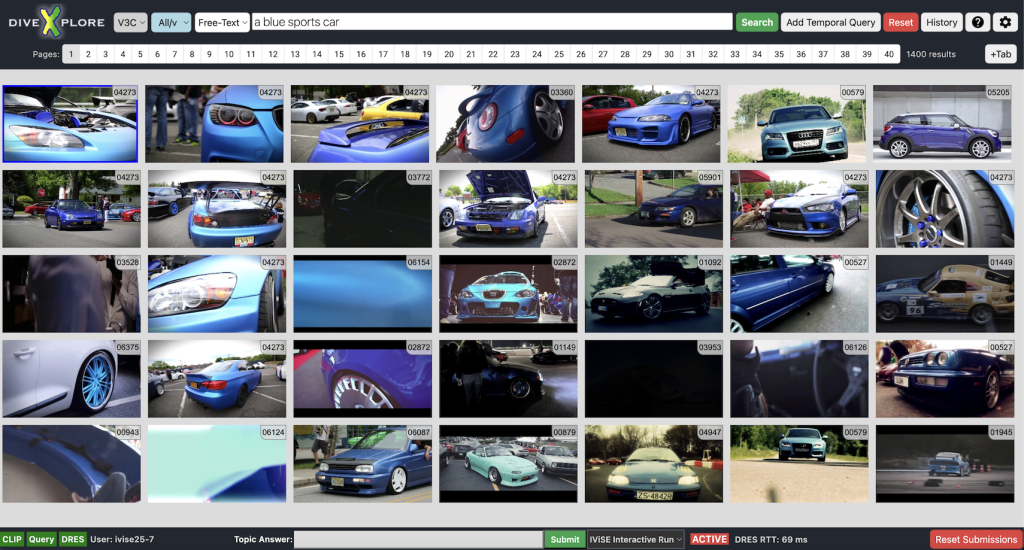

In this paper, we introduce SVD, a spatial video dataset comprising 300 five-second video sequences, i.e., 150 captured using an iPhone Pro and 150 with an AVP. Additionally, 10 longer videos with a minimum duration of 2 minutes have been recorded. The SVD is publicly released under an open source license to facilitate research in codec performance evaluation, subjective and objective Quality of Experience assessment, depth‑based computer vision, stereoscopic video streaming, and other emerging 3D applications such as neural rendering and volumetric capture. Link to the dataset: https://cd-athena.github.io/SVD/.