Paper accepted @ ICASSP 2026

2026 IEEE International Conference on Acoustics, Speech, and Signal Processing

4 – 8 May, 2026

Barcelona, Spain

Paper title: Dual-guided Generative Frame Interpolation

Yiying Wei (AAU, Austria), Hadi Amirpour (AAU, Austria), and Christian Timmerer (AAU, Austria)

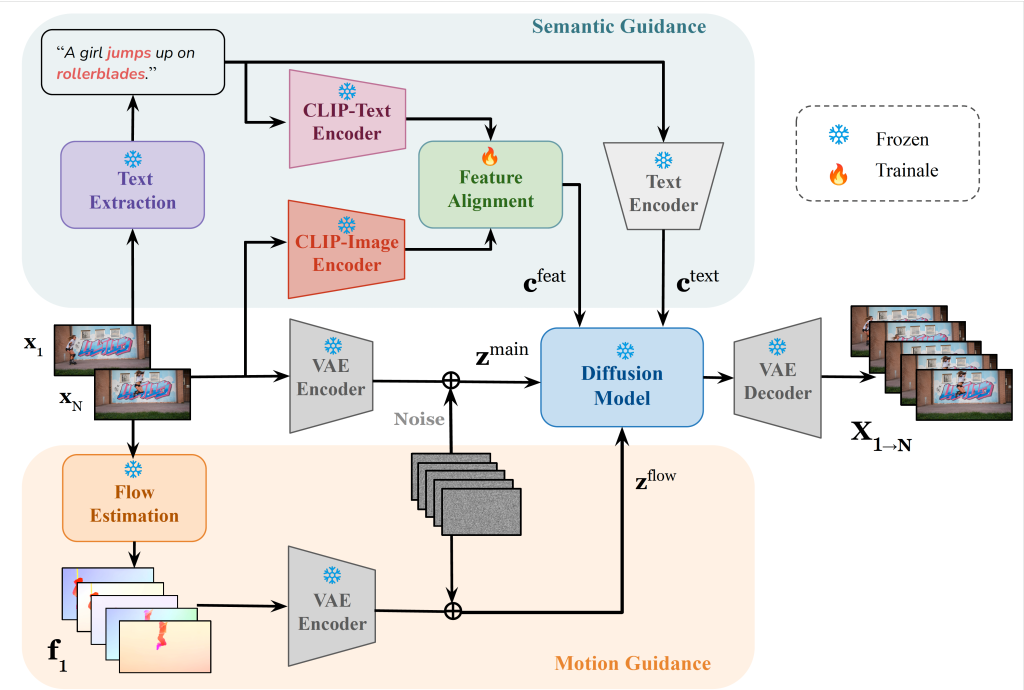

Abstract: Video frame interpolation (VFI) aims to generate intermediate frames between given keyframes to enhance temporal resolution and visual smoothness. While conventional optical flow–based methods and recent generative approaches achieve promising results, they often struggle with large displacements, failing to maintain temporal coherence and semantic consistency. In this work, we propose dual-guided generative frame interpolation (DGFI), a framework that integrates semantic guidance from vision-language models and flow guidance into a pre-trained diffusion-based image-to-video (I2V) generator. Specifically, DGFI extracts textual descriptions and injects multimodal embeddings to capture high-level semantics, while estimated motion guidance provides smooth transitions. Experiments on public datasets demonstrate the effectiveness of our dual-guided method over the state-of-the-art approaches.