IEEE TCSVT: DeepStream: Video Streaming Enhancements using Compressed Deep Neural Networks

Authors: Hadi Amirpour (Alpen-Adria-Universität Klagenfurt, Austria), Mohammad Ghanbari (University of Essex, UK), and Christian Timmerer (Alpen-Adria-Universität Klagenfurt, Austria)

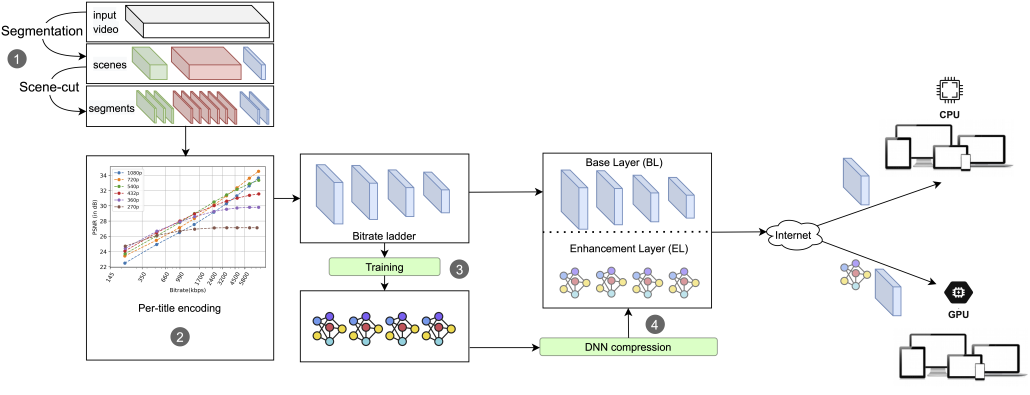

Abstract: In HTTP Adaptive Streaming (HAS), each video is divided into smaller segments, and each segment is encoded at multiple pre-defined bitrates to construct a bitrate ladder. To optimize bitrate ladders, per-title encoding approaches encode each segment at various bitrates and resolutions to determine the convex hull. From the convex hull, an optimized bitrate ladder is constructed, resulting in an increased Quality of Experience (QoE) for end-users. With the ever-increasing efficiency of deep learning-based video enhancement approaches, they are more and more employed at the client-side to increase the QoE, specifically when GPU capabilities are available. Therefore, scalable approaches are needed to support end-user devices with both CPU and GPU capabilities (denoted as CPU-only and GPU-available end-users, respectively) as a new dimension of a bitrate ladder.

To address this need, we propose DeepStream, a scalable content-aware per-title encoding approach to support both CPU-only and GPU-available end-users. (i) To support backward compatibility, DeepStream constructs a bitrate ladder based on any existing per-title encoding approach. Therefore, the video content will be provided for legacy end-user devices with CPU-only capabilities as a base layer (BL). (ii) For high-end end-user devices with GPU capabilities, an enhancement layer (EL) is added on top of the base layer comprising lightweight video super-resolution deep neural networks (DNNs) for each bitrate-resolution pair of the bitrate ladder. A content-aware video super-resolution approach leads to higher video quality, however, at the cost of bitrate overhead. To reduce the bitrate overhead for streaming content-aware video super-resolution DNNs, DeepCABAC, context-adaptive binary arithmetic coding for DNN compression, is used. Furthermore, the similarity among (i) segments within a scene and (ii) frames within a segment are used to reduce the training costs of DNNs.

Experimental results show bitrate savings of 34% and 36% to maintain the same PSNR and VMAF, respectively, for GPU-available end-users, while the CPU-only users get the desired video content as usual.

Keywords—HTTP adaptive streaming, per-title encoding, video streaming, video super-resolution.