5-19 July, 2024, Niagra Falls, Canada

The first workshop on Surpassing Latency Limits in Adaptive Live Video Streaming (LIVES 2024) aims to bring together researchers and developers to satisfy the data-intensive processing requirements and QoE challenges of live video streaming applications through leveraging heuristic and learning-based approaches.

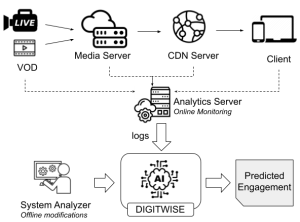

Delivering video content from a video server to viewers over the Internet is time-consuming in the streaming workflow and has to be handled to offer an uninterrupted streaming experience. The end-to-end latency, i.e., from the camera capture to the user device, particularly problematic for live streaming. Some streaming-based applications, such as virtual events, esports, online learning, gaming, webinars, and all-hands meetings, require low latency for their operation. Video streaming is ubiquitous in many applications, devices, and fields. Delivering high Quality-of-Experience (QoE) to the streaming viewers is crucial, while the requirement to process a large amount of data to satisfy such QoE cannot be handled with human-constrained possibilities. Satisfying the requirements of low latency video streaming applications require the streaming workflow to be optimized and streamlined all together, that includes: media provisioning (capturing, encoding, packaging, an ingesting to the origin server), media delivery (from the origin to the CDN and from the CDN to the end users), media playback (end user video player).

Please click here for more information.