Title: FaaScinating Resilience for Serverless Function Choreographies in Federated Clouds

Authors: Sasko Ristov, Dragi Kimovski, Thomas Fahringer

Abstract: Cloud applications often benefit from deployment on serverless technology Function-as-a-Service (FaaS), which may instantly spawn numerous functions and charge users for the period when serverless functions are running. Maximum benefit is achieved when functions are orchestrated in a workflow or function choreographies (FCs). However, many provider limitations specific for FaaS, such as maximum concurrency or duration often increase the failure rate, which can severely hamper the execution of entire FCs. Current support for resilience is often limited to function retries or try-catch, which are applicable within the same cloud region only. To overcome these limitations, we introduce rAF CL, a middleware platform that maintains the reliability of complex FCs in federated clouds. In order to support resilient FC execution under rAF CL, our model creates an alternative strategy for each function based on the required availability specified by the user. Alternative strategies are not restricted to the same cloud region, but may contain alternative functions across five providers, invoked concurrently in a single alternative plan or executed subsequently in multiple alternative plans. With this approach, rAF CL offers flexibility in terms of cost-performance trade-off. We evaluated rAF CL by running three real-life applications across three cloud providers. Experimental results demonstrated that rAF CL outperforms the resilience of AWS Step Functions, increasing the success rate of the entire FC by 53.45%, while invoking only 3.94% more functions with zero wasted function invocations. rAF CL significantly improves the availability of entire FCs to almost 1 and survives even after massive failures of alternative functions

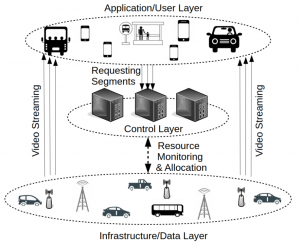

We design QoCoVi in two SDN-based operational modes: (i) centralized and (ii) distributed. In centralized mode, we can obtain a suitable solution by introducing a mixed-integer linear programming (MILP) optimization model that can be executed on the SDN controller. However, to cope with the computational overhead of the centralized approach in real IoV scenarios, we propose a fully distributed version of QoCoVi based on the proximal Jacobi alternating direction method of multipliers (ProxJ-ADMM) technique. The effectiveness of the proposed approach is confirmed through emulation with Mininet-WiFi in different scenarios.

We design QoCoVi in two SDN-based operational modes: (i) centralized and (ii) distributed. In centralized mode, we can obtain a suitable solution by introducing a mixed-integer linear programming (MILP) optimization model that can be executed on the SDN controller. However, to cope with the computational overhead of the centralized approach in real IoV scenarios, we propose a fully distributed version of QoCoVi based on the proximal Jacobi alternating direction method of multipliers (ProxJ-ADMM) technique. The effectiveness of the proposed approach is confirmed through emulation with Mininet-WiFi in different scenarios.