Authors: Reza Farahani (AAU, Austria), and Vignesh V Menon (Fraunhofer HHI, Berlin, Germany)

Venue: The 12th European Workshop on Visual Information Processing (EUVIP 2024)

08-11 September, 2024 in Geneva, Switzerland

Authors: Reza Farahani (AAU, Austria), and Vignesh V Menon (Fraunhofer HHI, Berlin, Germany)

Venue: The 12th European Workshop on Visual Information Processing (EUVIP 2024)

08-11 September, 2024 in Geneva, Switzerland

The 15th ACM Multimedia Systems Conference was held from 15-18 April, 2024 in Bari, Italy. MMSys 2024 provides a forum to leading researchers from academia and industry to present and share their latest findings in multimedia systems.

Christian Timmerer, Mathias Lux, Samira Afzal, Christian Bauer, Daniele Lorenzi, Emanuele Artioli, Mohammad Ghasempour, Shivi Vats, and Armin Lachini participated and presented ATHENA, GAIA, and SPIRIT contributions:

Within the Organizing Committee Christian Timmerer officiated as TPC Chair and Farzad Tashtarian as Proceeding Chair.

On Friday, 12 April 2024, seven representatives of the Pioneers of Game Development Austria (https://pgda.at/) visited the University of Klagenfurt for the event Press Start: Your Journey into Game Development, organised by the master’s programme Game Studies and Engineering. The PGDA members, composed of different video game developers from all over Austria, provided insightful talks, gave feedback on student game projects, and provided personal support during a mentoring café. Attracting about 50 GSE students, academic staff, senate members, and many potential new students, the event can be considered the most successful in recent GSE history.

Wenn Tom Tuček über die Welt spricht, muss er stets konkretisieren: Handelt es sich um die reale Welt oder um virtuelle Welten? Der Doktorand am Institut für Informationstechnologie beschäftigt sich aktuell mit digital humans, also virtuellen Figuren, denen wir beispielsweise in Videospielen begegnen. Tom Tuček möchte gerne wissen, wie sich der Kontakt mit digitalen Menschen, die mit neuer Künstlicher Intelligenz ausgestattet werden, auf die Spieler:innen auswirkt.

Read the whole interview here: https://www.aau.at/blog/das-spiel-mit-dem-digitalen-menschen/

Title: DeepVCA: Deep Video Complexity Analyzer

Authors: Hadi Amirpour (AAU, Klagenfurt, Austria), Klaus Schoeffmann (AAU, Klagenfurt, Austria), Mohammad Ghanbari (University of Essex, UK), Christian Timmerer (AAU, Klagenfurt, Austria)

Abstract: Video streaming and its applications are growing rapidly, making video optimization a primary target for content providers looking to enhance their services. Enhancing the quality of videos requires the adjustment of different encoding parameters such as bitrate, resolution, and frame rate. To avoid brute force approaches for predicting optimal encoding parameters, video complexity features are typically extracted and utilized. To predict optimal encoding parameters effectively, content providers traditionally use unsupervised feature extraction methods, such as ITU-T’s Spatial Information ( SI ) and Temporal Information ( TI ) to represent the spatial and temporal complexity of video sequences. Recently, Video Complexity Analyzer (VCA) was introduced to extract DCT-based features to represent the complexity of a video sequence (or parts thereof). These unsupervised features, however, cannot accurately predict video encoding parameters. To address this issue, this paper introduces a novel supervised feature extraction method named DeepVCA, which extracts the spatial and temporal complexity of video sequences using deep neural networks. In this approach, the encoding bits required to encode each frame in intra-mode and inter-mode are used as labels for spatial and temporal complexity, respectively. Initially, we benchmark various deep neural network structures to predict spatial complexity. We then leverage the similarity of features used to predict the spatial complexity of the current frame and its previous frame to rapidly predict temporal complexity. This approach is particularly useful as the temporal complexity may depend not only on the differences between two consecutive frames but also on their spatial complexity. Our proposed approach demonstrates significant improvement over unsupervised methods, especially for temporal complexity. As an example application, we verify the effectiveness of these features in predicting the encoding bitrate and encoding time of video sequences, which are crucial tasks in video streaming. The source code and dataset are available at https://github.com/cd-athena/ DeepVCA.

Author: Emanuele Artioli

Abstract: Video streaming stands as the cornerstone of telecommunication networks, constituting over 60% of mobile data traffic as of June 2023. The paramount challenge faced by video streaming service providers is ensuring high Quality of Experience (QoE) for users. In HTTP Adaptive Streaming (HAS), including DASH and HLS, video content is encoded at multiple quality versions, with an Adaptive Bitrate (ABR) algorithm dynamically selecting versions based on network conditions. Concurrently, Artificial Intelligence (AI) is revolutionizing the industry, particularly in content recommendation and personalization. Leveraging user data and advanced algorithms, AI enhances user engagement, satisfaction, and video quality through super-resolution and denoising techniques.

However, challenges persist, such as real-time processing on resource-constrained devices, the need for diverse training datasets, privacy concerns, and model interpretability. Despite these hurdles, the promise of Generative Artificial Intelligence emerges as a transformative force. Generative AI, capable of synthesizing new data based on learned patterns, holds vast potential in the video streaming landscape. In the context of video streaming, it can create realistic and immersive content, adapt in real time to individual preferences, and optimize video compression for seamless streaming in low-bandwidth conditions.

This research proposal outlines a comprehensive exploration at the intersection of advanced AI algorithms and digital entertainment, focusing on the potential of generative AI to elevate video quality, user interactivity, and the overall streaming experience. The objective is to integrate generative models into video streaming pipelines, unraveling novel avenues that promise a future of dynamic, personalized, and visually captivating streaming experiences for viewers.

Based on the 2023 TPDS editorial data and his excellent performance, Radu Prodan received the 2024 IEEE TPDS Award for Editorial Excellence. His achievement will be recognized by IEEE and his name will appear at the IEEE award website https://next-test.computer.org/digital-library/journals/td/tpds-award-for-editorial-excellence.

Congratulations!

Authors: Sandro Linder (AAU, Austria), Samira Afzal (AAU, Austria), Christian Bauer (AAU, Austria), Hadi Amirpour (AAU, Austria), Radu Prodan (AAU, Austria), and Christian Timmerer (AAU, Austria)

Venue: The 15th ACM Multimedia Systems Conference (Open-source Software and Datasets)

Abstract: Video streaming constitutes 65 % of global internet traffic, prompting an investigation into its energy consumption and CO2 emissions. Video encoding, a computationally intensive part of streaming, has moved to cloud computing for its scalability and flexibility. However, cloud data centers’ energy consumption, especially video encoding, poses environmental challenges. This paper presents VEED, a FAIR Video Encoding Energy and CO2 Emissions Dataset for Amazon Web Services (AWS) EC2 instances. Additionally, the dataset also contains the duration, CPU utilization, and cost of the encoding. To prepare this dataset, we introduce a model and conduct a benchmark to estimate the energy and CO2 emissions of different Amazon EC2 instances during the encoding of 500 video segments with various complexities and resolutions using Advanced Video Coding (AVC)

and High-Efficiency Video Coding (HEVC). VEED and its analysis can provide valuable insights for video researchers and engineers to model energy consumption, manage energy resources, and distribute workloads, contributing to the sustainability of cloud-based video encoding and making them cost-effective. VEED is available at Github.

Authors: Christian Bauer (AAU, Austria), Samira Afzal (AAU, Austria), Sandro Linder (AAU, Austria), Radu Prodan (AAU,Austria), and Christian Timmerer (AAU, Austria)

Venue: The 15th ACM Multimedia Systems Conference (Open-source Software and Datasets)

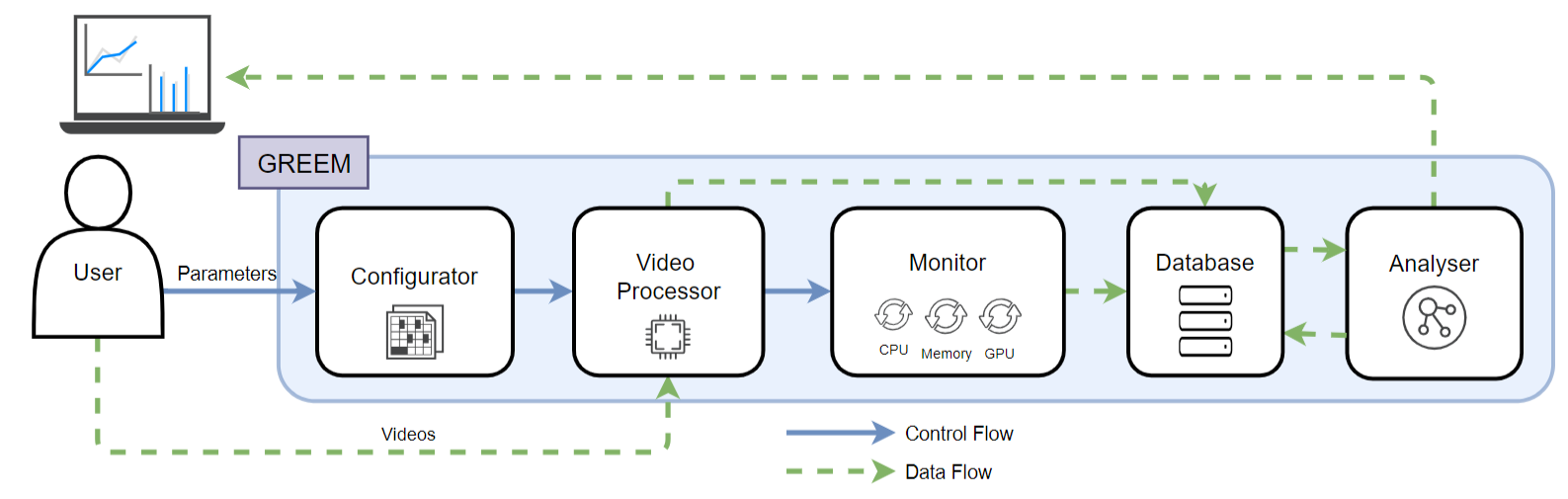

Abstract: Addressing climate change requires a global decrease in greenhouse gas (GHG) emissions. In today’s digital landscape, video streaming significantly influences internet traffic, driven by the widespread use of mobile devices and the rising popularity of streaming plat-

forms. This trend emphasizes the importance of evaluating energy consumption and the development of sustainable and eco-friendly video streaming solutions with a low Carbon Dioxide (CO2) footprint. We developed a specialized tool, released as an open-source library called GREEM , addressing this pressing concern. This tool measures video encoding and decoding energy consumption and facilitates benchmark tests. It monitors the computational impact on hardware resources and offers various analysis cases. GREEM is helpful for developers, researchers, service providers, and policy makers interested in minimizing the energy consumption of video encoding and streaming.

Authors: Seyedehhaleh Seyeddizaji, Joze Martin Rozanec, Reza Farahani, Dumitru Roman and Radu Prodan

Venue: The 2nd Workshop on Serverless, Extreme-Scale, and Sustainable Graph Processing Systems Co-located with ICPE 2024

Abstract: While graph sampling is key to scalable processing, little research has tried to thoroughly compare and understand how it preserves features such as degree, clustering, and distances dependent on the graph size and structural properties. This research evaluates twelve widely adopted sampling algorithms across synthetic and real datasets to assess their qualities in three metrics: degree, clustering coefficient (CC), and hop plots. We find the random jump algorithm to be an appropriate choice regarding degree and hop-plot metrics and the random node for CC metric. In addition, we interpret the algorithms’ sample quality by conducting correlation analysis with diverse graph properties. We discover eigenvector centrality and path-related features as essential features for these algorithms’ degree quality estimation, node numbers (or the size of the largest connected component) as informative features for CC quality estimation and degree entropy, edge betweenness and path-related features as meaningful features for hop-plot metric. Furthermore, with increasing graph size, most sampling algorithms produce better-quality samples under degree and hop-plot metrics.