The diveXplore video retrieval system, by Klaus Schoeffmann and Sahar Nasirihaghighi, was awarded as the best ‘Video Question-Answering-Tool for Novices’ at the 13th Video Browser Showdown (VBS 2024), which is an international video search challenge annually held at the International Conference on Multimedia Modeling (MMM 2024), which took place this year in Amsterdam, The Netherlands. VBS 2024 was a 6-hours long challenge with many search tasks of different types (known-item search/KIS, ad-hoc video search/AVS, question-answering/QA) in three different datasets, amounting for about 2500 hours of video content, some performed by experts and others by novices recruited from the conference audience.

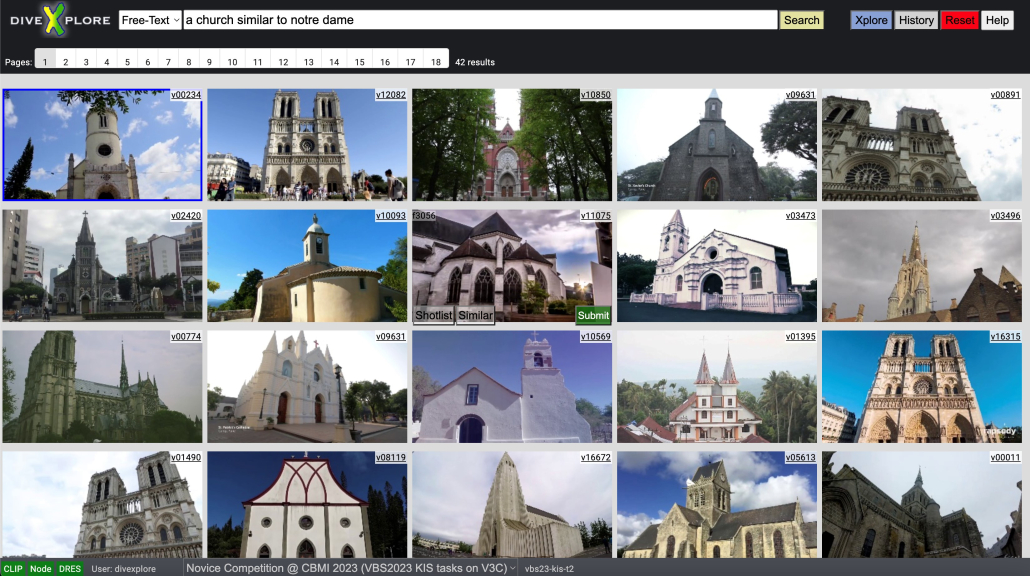

diveXplore teaser:

https://www.youtube.com/watch?v=Nlt7w0pYWYE

diveXplore demo paper:

https://link.springer.com/chapter/10.1007/978-3-031-53302-0_34

VBS info: